Does your current testing approach match the speed and complexity of modern software development? In this modern world of software development, bug-free apps are necessary.

With the AI and ML combination, dev and QA teams can reinvent the way they do testing and drastically cut down on testing effort while maintaining high software quality.

Did you know that GenAI-based tools will write 70% of software tests by 2028, based on IDC, and the AI use in software testing helps reduce test design and execution efforts by 30% according to Capgemini?

This article dives into what AI in software testing is and why to use artificial intelligence in QA testing – and offers actionable tips to level up your entire testing process and be in sync with the current AI software engineering practices.

Role AI In Software Testing

Artificial intelligence and machine learning algorithms in software testing enhance all stages of the Software Testing Life Cycle (STLC). The adoption of AI in Quality Assurance continues to rise because it boosts productivity while automating processes and enhancing test accuracy.

Topics handpicked for you

The traditional testing approach depends on manual test script development, while an AI-powered system learns from data to generate intelligent decisions.

Knowing that, AI test automation tools are a good fit for identifying critical code areas, generating test case recommendations, and automatically developing test cases. What’s more, these tools adapt to user interface modifications without requiring regular updates and maintain their ability to detect and test interface elements even when buttons move or their labels change. This is relevant when considering visual testing codeless tools.

The current software testing industry heavily depends on AI to speed up operations while improving test quality. The artificial intelligence system helps create tests and run them while analyzing results and adapting to new changes through learned knowledge.

Only by opting for AI in software testing can you enhance testing speed, provide smarter and scalable solutions, and decrease the need for test maintenance while speeding up testing operations. When integrated, teams achieve faster software releases with increased confidence through their work and the efforts they put in alongside the artificial intelligence capabilities.

What is AI Testing?

When we talk about AI testing, we mean the use of Artificial Intelligence(AI) and Machine Learning(ML) technologies in the testing process, which helps improve its speed, accuracy, and efficiency. Both are becoming essential in modern QA strategies.

In contrast to classical testing, applying AI-based approaches promotes intelligent analysis and processing of testing data and previous test cycles, fosters test case selection and test case prioritization, and offers the detection of UI inconsistencies and much more. Smart software testing solutions, like predictive analytics, pattern recognition, and self-healing scripts — improve overall software quality.

Manual Software Testing VS AI in Software Testing

It is not a secret that conducting the traditional software testing process requires significant time and efforts, which makes QA and testing teams manually design test cases, make updates after recent code changes, or inadequately simulate real user interactions.

Of course, they can create automated scripts for some components where it is possible, but they also require continuous adjustments. AI in software testing has changed the situation and made it more reliable, efficient, and effective (of course, when following the right approach and using the right AI testing tools).

Thanks to it, teams can automate many repetitive and mundane tasks without risk, more accurately identify and predict software defects, and speed up the test cycle times while improving the quality of their products. Furthermore, it helps them make adjustments before deployment and predict areas, which are likely to fail, reducing the chances of human error and overlooked issues.

| Manual Testing | AI Testing |

| Process is time-consuming and requires a lot of human effort. | AI-based tests save time and funds and make the product development process faster. |

| Testing cycles with QA engineers are longer and less efficient. | Automated processes speed up test execution. |

| Manual test runs are unproductive. | Automated test cases run with minimal human involvement and higher productivity. |

| Tests can’t guarantee high accuracy in terms of the chances of human errors. | Smart automation of all testing activities leads to better test accuracy. |

| All testing scenarios cannot be considered, resulting in less test coverage. | Creation of various test scenarios increases test coverage. |

| Parallel testing is costly, requiring significant human resources and time. | It supports parallel tests with lower resource use and costs. |

| Regression testing is slower and often selective (test prioritised) due to time constraints. |

More comprehensive and faster. |

💡 Summary

Manual testing focuses on human insight and intuition, while AI in testing brings speed, adaptability, and data-driven intelligence to the QA process.

🧠 When to Use What?

→ Manual Testing: Best for exploratory testing, usability evaluation, edge cases, or when AI setup is not justified.

→ AI Testing: Ideal for repetitive tasks, large-scale regression, risk prediction, and accelerating Agile, CI\CD workflows.

Let’s dive deeper into the use cases of how AI is used in real testing workflows.

Current Landscape: How to Use AI in Software Testing?

So, you can find popular use cases to apply generative AI in software testing below:

✨ Test generation & Accelerated testing

Testers face a long and tiresome process of creating test scenarios. Thanks to gen AI in software testing, this process has changed. Now, AI-based tools can be applied for the generation of tests.

They analyze your codebase, requirements, user acceptance criteria, and past bugs to automatically create new tests, which will cover a wide array of test scenarios and detect edge cases that human testers might miss, and accelerate the testing process.

✨ Low-code testing | No-code testing

The combination of Low/No-code testing with artificial intelligence allows testing teams to create and execute tests quickly and reduce the need for human resources and time. Even non-technical team members can actively participate in test automation and faster feedback loops, which contribute to more stable software releases.

✨ Test data generation

With AI test data generation, QA teams can get new data that mimics aspects of an original real-world dataset to test applications, develop features, and even train ML/AI models. It helps them achieve better test results, AI model predictability and performance.

AI can automate the generation of test data in several ways:

→ To create datasets that cover a wide range of scenarios, user behaviors and vary inputs.

→ To produce anonymized data with key features, without personal identifiable information.

→ To generate test data that closely reproduces user actions and situations.

→ To create data for rare and complex testing scenarios which are difficult to capture with real-world data alone.

✨ Test report generation

Artificial intelligence reduces time on manual report creation. With AI-based algorithms, you can automate various aspects of report creation and quickly build test reports which help teams in the following situations:

- Investigate the failure reasons after the completion of tests.

- Visualize test results and provide the visual indicators of test performance.

- Configure simple and understandable reports for your teams

- Analyze the roots of failure and suggest possible solutions for resolution.

✨ Bug Analysis & Predictive Defects

Based on past test data and pattern recognition, AI-based tools can predict which line of code is likely to fail. This helps testers concentrate their efforts on high-risk areas to boost the chances of detecting defects early in the automation testing process. Thanks to predictive defect analytics, test case prioritization, and bug identification are getting more efficient and quicker in the testing process.

✨ Risk-based testing

Risk-based testing focuses on areas that pose the greatest risk to the business and the user experience. With AI-based tools, teams can reveal the “risk score” for each feature or workflow and increase test coverage for them. AI helps them prioritize testing efforts based on potential risks and balance the use of resources, concentrating on areas with the greatest potential impact. More information about risk-based testing can be found here.

Why Teams Need AI in Software Test Automation

Without fear of oversimplifying, the biggest challenge that testing teams face is automating repetitive testing tasks that require a lot of time to perform. However, AI in testing is that not only solves this key problem. In fact, it can handle other, no less pressing issues. Let’s discover why teams need AI in software test automation:

- Teams need Artificial intelligence to automate similar workflows and orchestrate the testing process.

- Teams need Artificial intelligence to highlight which test cases to execute after changes in the program code of some features to make sure that nothing will break in the app before its release.

- Teams need Artificial intelligence to know what test scripts to update after changes in the UI.

- Teams need Artificial intelligence to know what feature or functionality requires immediate attention.

- Teams need Artificial intelligence to expand test coverage by revealing edge cases and allocate testing resources efficiently.

- Teams need Artificial intelligence to reduce delays in regression testing and find possibilities to speed up testing.

However, it is important to mention that artificial intelligence in testing cannot deal with situations not included in the training data or replace human judgment.

AI in Software Testing Life Cycle

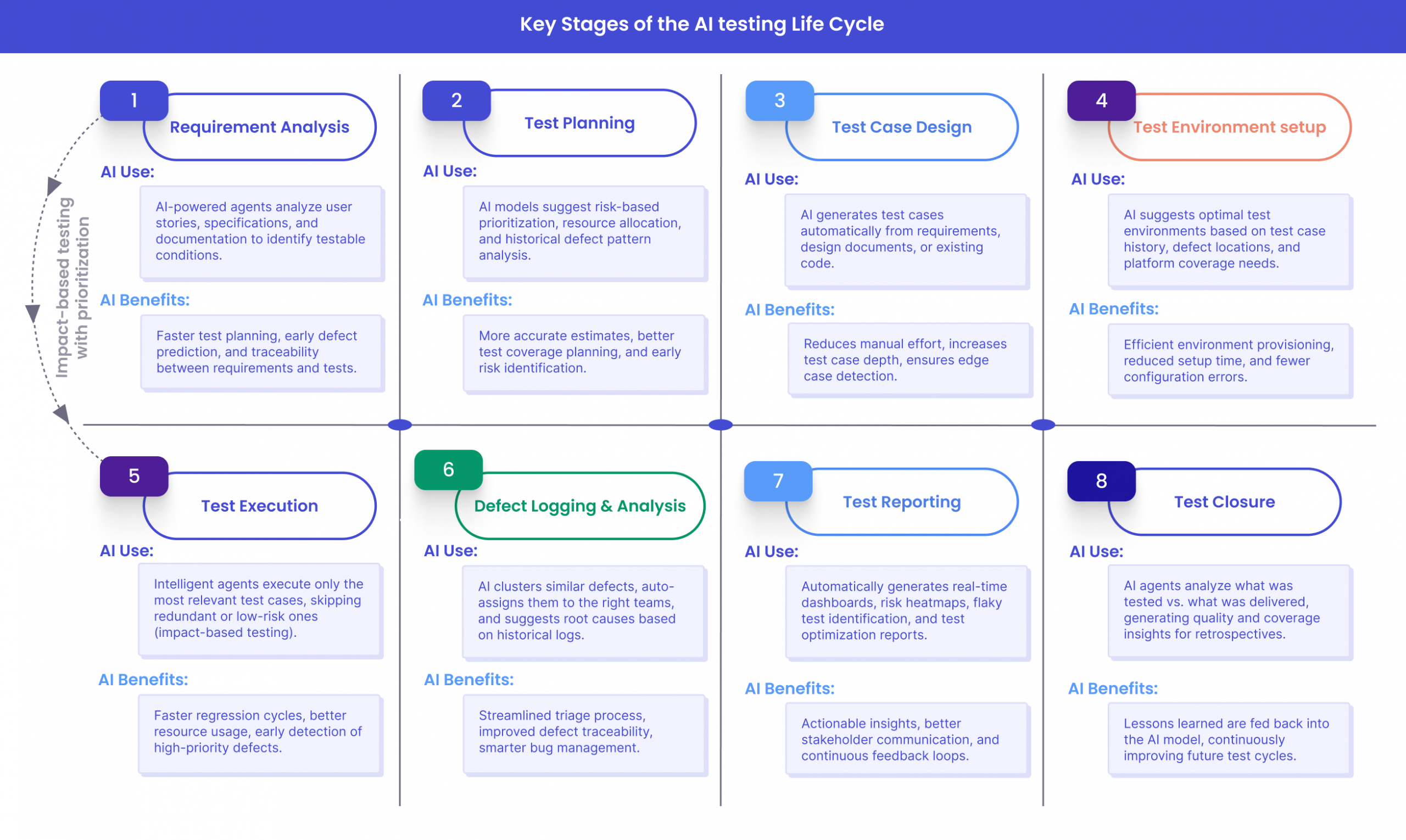

Artificial intelligence can be integrated into the key stages of the testing lifecycle – planning, design, execution, and optimisation. Below, you can find more information about each stage:

#1: Test Planning

With artificial intelligence, the requirement documentation, user stories, and specifications for the testing process can be processed in seconds. Depending on the information that AI will gather from them, it can convert them into testable scenarios.

When implementing this approach, teams can remove the possibility of errors during the test creation process and reduce the manual efforts, which are required to analyze large documentations and identify inconsistencies at the earlier phases of the development cycle.

Also, AI-based algorithms can go through the historical project data and predict high-risk areas of the applications that are more prone to failure and repurpose all the testing efforts accordingly.

#2: Test Design

Using AI, teams can automatically create the tests depending on the requirements and user behaviors, and suggest areas of the application that require further testing. With accurate and varied test data, teams can also ensure that all the tests that occur in real-world scenarios are met. Artificial intelligence will also collect that data to do variability and compliance testing around GDPR, so you are compliant with user privacy and security.

#3: Test Execution

AI’s goal is to minimize the time required for test execution and improve real-time decision-making about testing strategy. Teams can integrate AI to create AI-driven tests that automatically find the UI changes and update the locators within the tests, which leads to the scalability of tests and their dependability as well. Furthermore, teams can apply AI to understand the optimal execution strategy – they are in the know which tests to run and on which platform/environment, taking into consideration previous testing results and current changes within the application infrastructure.

#4: Smart bug triaging

If bugs are not recorded, mapped, and reported properly, then the time and efforts involved in identifying the root cause and rectifying them are much higher. Thanks to AI-based natural language processing techniques, teams can intelligently address bug triage.

Artificial intelligence can automate the creation, update, and follow-up of bug reports and get a full picture of your tests’ performance. By spotting flaky tests and using historical data, it identifies the best tests for the task instead of wasting resources on unnecessary testing.

#5: Self-healing tests

Traditional automated testing requires extensive time to maintain scripts because of UI updates and functional changes. The testing scripts frequently fail and need human intervention for updates when working in environments with dynamic development. AI-based algorithms can be utilized for autonomous issue detection, precise test case generation, and software change adaptation without requiring human involvement.

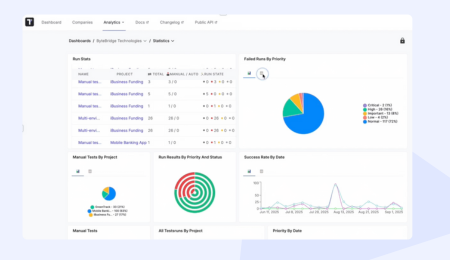

#6: Test Reporting

With AI-powered reporting, testing teams can generate detailed and actionable testing dashboards with detailed information and recommendations using AI. It also speeds up the defect triaging and helps teams define the resolution strategy to get rid of bugs in less time before the software system is deployed. In the long run, it improves the visibility across multiple teams and enables them to make faster decisions to reduce the feedback loops and also the production cycle.

#7: Test Execution Optimization (Maintenance)

AI-powered systems learned from past executions and user interfaces help teams identify the flaky or low-value tests and give recommendations – whether to remove or refactor them in order to meet the requirements. Thanks to AI, teams can find failures and link them back to the code changes, infrastructure issues, or integration errors, and minimize the overall troubleshooting steps.

Test Management AI Solves Software Testing Extra Tasks

Flaky test detection

When your test suite grows, flaky tests become a common problem for many development and QA teams. If left unchecked, they lead to false positives (tests that pass but shouldn’t) or false negatives (tests that fail but shouldn’t). Thanks to AI-based tools, teams can identify and score flaky tests, and then define which tests to re-run or skip and which ones mean the code needs fixing.

Code coverage analysis

In testing, code coverage quantifies how much of the source code is exercised by the test suite running. Teams can also measure what percentage of code is executed during those tests and understand how effective the testing strategy is.

If code coverage is high, it indicates that a larger portion of the code has been tested under various conditions. With the integration of AI, teams can get a full coverage review of code, study the app code thoroughly, and suggest tests achieving over 95% test coverage. It prevents the likelihood of any defects escaping into production due to insufficient tests.

Regression automation

With AI-based regression testing tools, teams can adapt to changes in scripts and prioritize tests. Artificial intelligence can manage large numbers of regression tests by automatically detecting changes and identifying areas that are likely to be most affected by new updates. By analyzing defect patterns, user behavior, and historical data, Artificial intelligence helps identify risk-prone areas and provides thorough testing of critical functionalities, saving manual effort and accelerating test cycles.

Test orchestration

With orchestration in place, teams can perform several rounds of testing within an extremely limited amount of time and achieve the desired levels of quality. Thanks to Artificial Intelligence test orchestration, it optimizes the selection of tests and intelligently prioritizes the right ones for execution based on code changes and risk, rather than simply running everything.

With its help, teams can dynamically manage the execution of tests across diverse environments and validate the reports for successes/failures, including the report on smoke testing and performance testing, and configure the right capacity of resources needed.

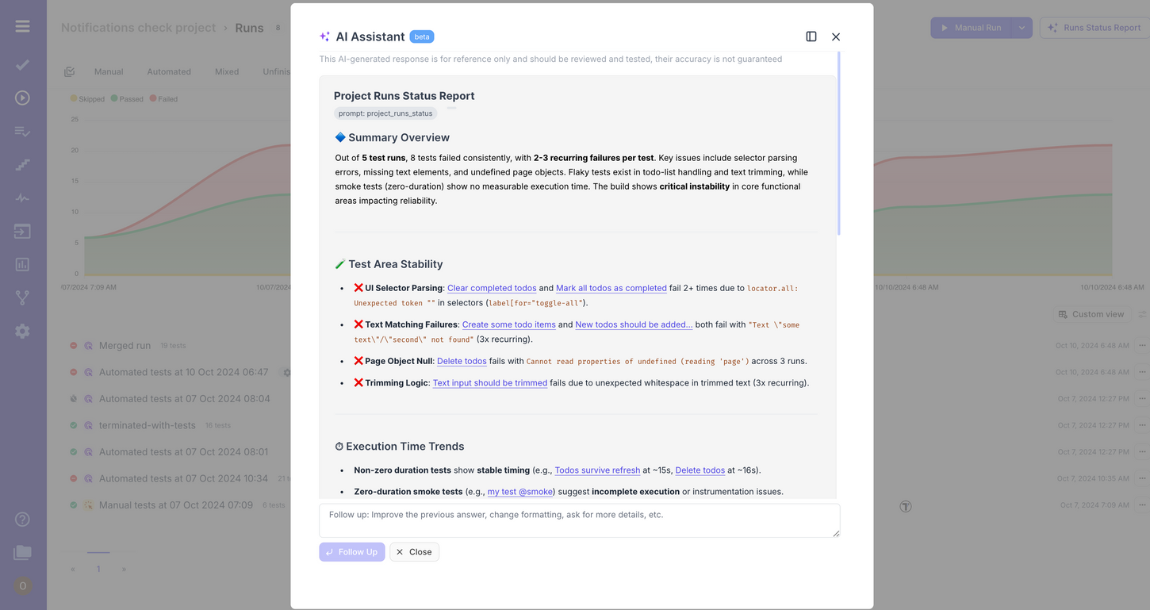

For example, the AI Testing Assistant from testmat.io can help QA teams make decisions regarding determining the project’s release readiness or assessing its quality.

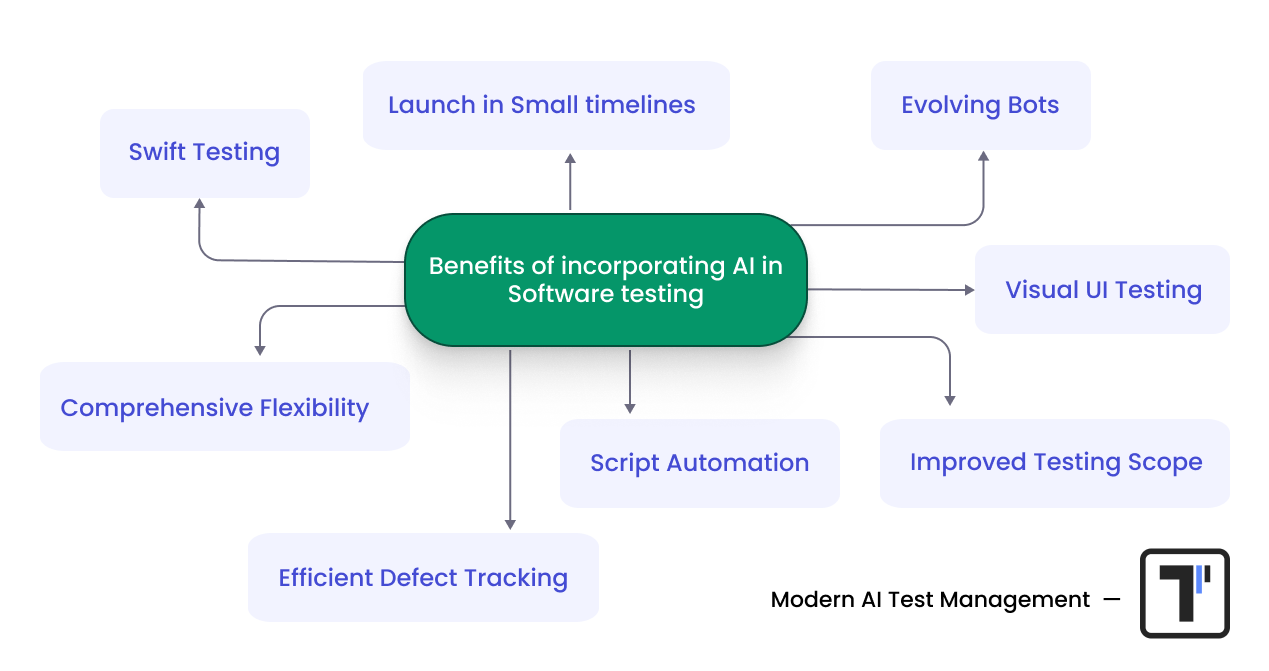

Benefits of AI in Software Testing

AI brings significant improvements to how software is tested, especially in Agile, Shift-left and fast-paced CI\CD, DevOps, TestOps modern methodologies. Below are the key benefits:

Detailed some benefits incorporating AI:

- Visual AI Verification. With AI, teams can recognize patterns and images that help to detect visual errors in apps through visual testing, which guarantees that all the visual elements work properly.

- Up-To-Date Tests. When the app grows, it changes as well. Thus, tests should also be updated or changed. Instead of spending hours updating broken scripts of tests, Artificial intelligence can automatically adjust tests to fit the latest version of your application.

- Improved Accuracy and Coverage. By scanning large amounts of data, AI finds patterns and highlights areas that require more attention. It also measures how much of the application is tested and reduces the risk of bugs before production.

- Automation of Repetitive Tasks. Artificial intelligence is helpful when it comes to the automation of repetitive tasks and lets teams focus on the things that need human attention, like exploratory testing.

- Faster Execution of Tests. Thanks to AI in software testing, tests can be executed 24/7, which leads to faster feedback and quicker development cycles.

- Reduced Human Error. When teams do manual testing, it can lead to mistakes. AI changes this situation and does the same work without losing focus, and eliminates bugs caused by missed steps or overlooked details.

Challenges of AI in software testing

Below, we are going to explore the challenges of AI in testing that development and QA teams face when implementing it:

- AI is highly dependent on data and requires quality data to be trained on for producing correct and unbiased recommendations.

- Devs and QA teams need to constantly monitor and validate the data generated by AI, because even a small error may break the existing functioning unit tests.

- Devs and QA teams face difficulties in explaining AI-driven decisions and might cope with the risk of biased AI models.

- It is important to mention that AI is not a full replacement for human testers, but a help for them in automating repetitive tasks, speeding up test execution, and improving accuracy.

- AI implementation requires significant initial setup and continuous learning and updates.

- It produces training complexity and is computationally expensive in the initial phase.

Tips for Implementing AI in Software Testing

Below, you can find some information you need to know to successfully implement AI in testing:

✅ Define Goals

To get started with AI implementation, you shouldn’t forget about setting testing goals. All these questions should be asked and answered from the very beginning:

- Do you need to increase test coverage or test execution time?

- Do you need help deciding on software quality or release readiness?

- Do you need to boost bug triaging?

✅ Choose the Right AI Tool

Taking into account your quality assurance objectives, you need to assess project demands and choose an AI tool that fits your needs and development environment. Don’t forget about usability, scalability, and integration capabilities of the right AI test automation frameworks during the selection process.

✅ Prepare High-Quality Training Data

You need to remember that AI testing success depends on training data quality. For the AI to start providing accurate outcomes, it should be trained on quality datasets which go through iterative data refining steps. You need to establish data policies, standards, and metrics that define how data is to be treated at your organization. Also, you shouldn’t forget to implement data audits, which reveal poorly populated fields, data format inconsistencies, duplicated entries, inaccuracies in data, missing data, and outdated entries to make sure the training data remains high quality.

✅ Incorporate Metrics for AI assessment

You need to establish meaningful success criteria and performance benchmarks aligned with real-world expectations for AI in software testing. With statistical methods and metrics, you can measure the reliability of AI model predictions and its results. Also, you can incorporate human judgment for evaluating AI effectiveness.

✅ Continuous Monitoring and Improvement

For better results, you need to continuously analyze AI testing results and find areas for improvement, audit training data, and adjust artificial intelligence parameters to keep AI as efficient and flexible for software testing as possible.

Wrapping up: Are you ready for AI and software testing?

It is crucial to remember that there is no “one-size-fits-all” solution anywhere, even in testing. Before implementing AI for software testing, assessing artificial intelligence readiness in your organisation is essential. All the current testing processes, team capabilities, and specific QA challenges should be investigated.

Furthermore, you need to discover areas of weakness where AI can help, choose the right tool to address them, and then start integrating it into your process. If you need any help with AI in testing software, our team understands the AI life cycle and is equipped with the AI-based tool you need for an effective and fast AI software testing process.

Frequently asked questions

How is AI used in software testing?

AI is used to automate test case generation, predict high-risk areas in code, identify bugs faster, and optimize test coverage. It enhances traditional testing with intelligent insights and continuous learning.

What are the benefits of AI-powered software testing?

AI testing improves speed, accuracy, and efficiency. It reduces manual effort, helps detect defects earlier, and supports continuous integration by adapting to code changes automatically.

Which tools use AI for software testing?

Popular AI-driven testing tools include Testomat.io, Testim, Applitools, Functionize, and Mabl. These platforms use machine learning to enhance UI testing, visual validation, and test maintenance.