AI Testing Agent is an intelligent assistant built-in directly into the testing workflow on every stage. On the backend of our AI agent, the different AI providers work, such as OpenAI or Anthropic, AzureAI and SCIM. Primarily, by default it is Groq was founded by a group of former Google engineers.But you may choose your preferred one. This offers flexibility in leveraging AI capabilities while ensuring compliance with security data-sharing policies. Also, meet your price budget.

The implemented Retrieval-Augmented Generation (RAG) approach allows testomat.io AI agent to use real project data along with generic industry knowledge — to deliver accurate, context-specific responses and actions, helping to create, manage and understand tests faster than ever before.

How does the AI agent assistant work?

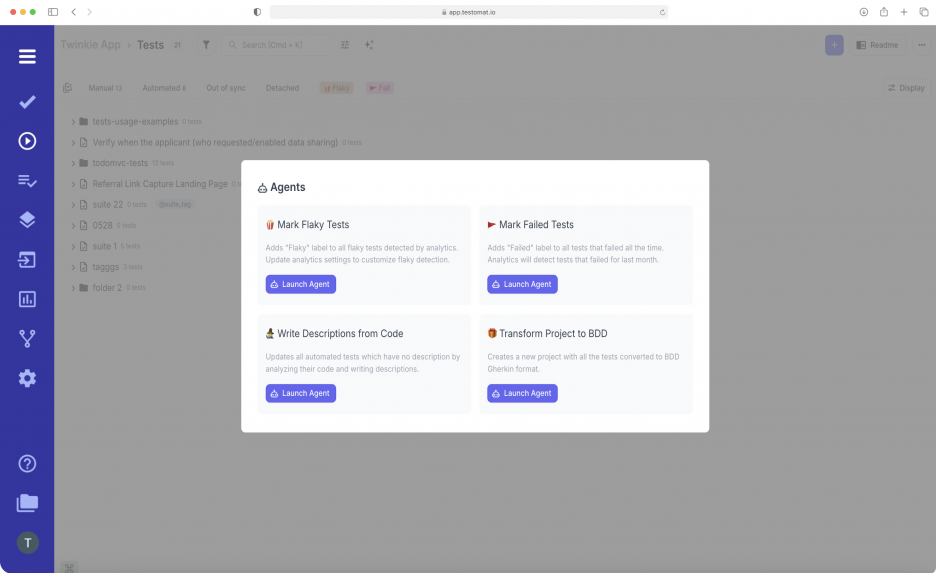

AI agent acts as your ever-present testing copilot, guiding through a UI dialogue window. You can use it when you wish. To get started, simply click the AI star button. You can see it in different parts of the project. For instance, on the level of test plan, test suite and folders, as well as test result reports, e.g. Our team is constantly working to increase the AI agentic handy presence on your project.

Now, AI testing Agent support these major tasks:

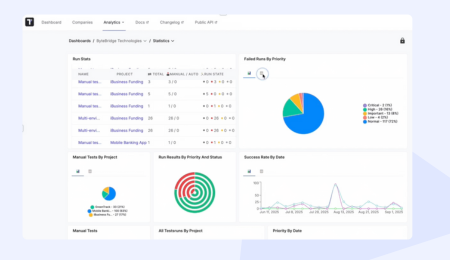

- Mark Flaky test Assistant — Mark Flaky test Assistant — identifies and labels unstable (flaky) tests within your project, making them easy to spot. Note, on the Analytica dashboard, Flaky test detection is also implemented. Here, you can control flaky tests calculation, fixing the minimum and maximum pass rate in Analytics Settings to determine what counts as flaky.

- Mark Failed test Assistant — intelligently flags and labels tests that have failed in recent runs, helping QA teams quickly identify, prioritize, and triage issues in large or complex test suites.

- Run Statistics Analysis — a general overview of the completed test run helps stakeholders quickly become acquainted with test results at a glance.

- Write descriptions from code — automatically generates descriptions for automated tests that currently lack them. The AI agent analyzes each test’s code and creates meaningful descriptions to improve readability and documentation across your project. This significantly reduces the manual effort needed to document existing tests and helps teams quickly understand test coverage without digging into implementation details.

- Deep Analyze Agent is an advanced AI tool designed to give you a comprehensive overview of your project’s testing health. Instead of manually compiling reports from multiple runs, the Agent does it automatically instead of you. It scans tests and deeply assesses the running statuses, test coverage, and provides stability insights and release readiness. AI Analyze Agent works on different project levels, for instance, Analyzes Suites. Also, you can set up the data gathering period within the settings.

- Transform Project to BDD AI agent — convert traditional tests into BDD format using Gherkin syntax without manual rewriting, making BDD adoption seamless and efficient. Logic is quite simple: the title converts into Scenario, the preconditions into Given, and the expected results into Then steps.

So, study the info in the window that the smart AI assistant suggests to you, or ask what you wish using natural language, and receive an answer instantly in a few seconds. You can accept it, or if it does not satisfy you, Follow up new.