Test Execution is the process of executing the tests written by the testers to verify are the developed code, functions or modules of the application meet the expected result outlined by the client and business requirements. Test Execution comes under one of the phases of the Software Testing Life Cycle (STLC) which in turn is an integral part Software Development Life Cycle (SDLC).

In the test execution process, the QA testers usually write test scenarios and then execute a certain number of test cases, and test scripts or do automated testing. Errors in this way inform about issues and the need to make the fixes in the code. If the process shows successful test execution results then the application will be ready for the deployment phase after the proper setup for the deployment environment. The test execution process is intricate yet important, requiring meticulous attention to detail.

Importance of Test Execution process:

- The project runs efficiently: by performing the test execution we can ensure that the project runs smoothly and efficiently.

- Application competency: test execution plays an important role in the demonstration of the application’s competency in the market.

- Requirements are correctly collected: It makes sure that the requirements are collected correctly in project documentation and incorporated correctly in design and code architecture.

- Application implemented correctly: Test execution checks whether the software application is built following the pre-defined requirements or not and works properly.

Reducing the test execution time is one of the primary goals of modern Agile teams in test script maintenance. We take our customers’ wishes into account. That’s why we have included the Execute test cases feature in TMS, with extensive capabilities for launching manual and automated tests. Test execution through intelligent test management tools saves time and increases testing efficiency and software quality.

How to work with the test execution feature?

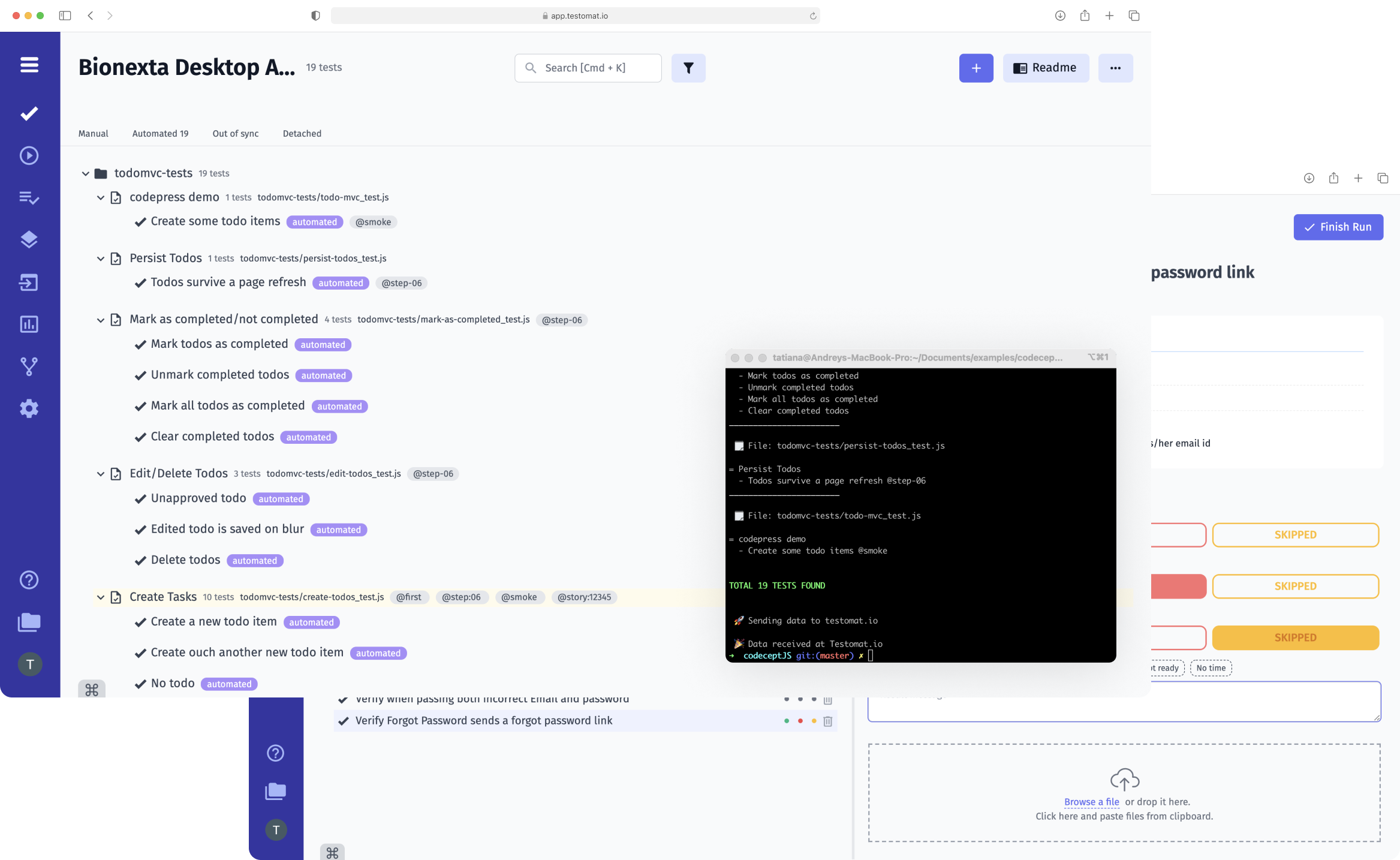

When you enter any project in testomat.io test management you will see many folders with tests that need to be passed. They can be manual or automated. The tester manually runs the first ones, and upon completion, they give a Passed/Failed/Skipped status and leave a comment. The latter is run from the terminal using Advanced Reporter if they are created in any test automation framework.

Let’s consider the Execute test cases function on the example of a Mixed Run, a test execution phase that contains both types of tests. In this case, the user’s algorithm will be as follows:

- Go to the Runs page.

- Click the menu button in the upper right corner and select Mixed Run.

- Run all tests or a separate Test Plan (as implementation of your test strategy).

- Select the desired CI service (e.g., Azure) and test environment (browser and OS) from the drop-down lists.

- Assign a name to the test run.

- Click Launch.

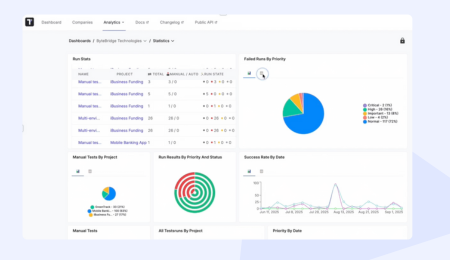

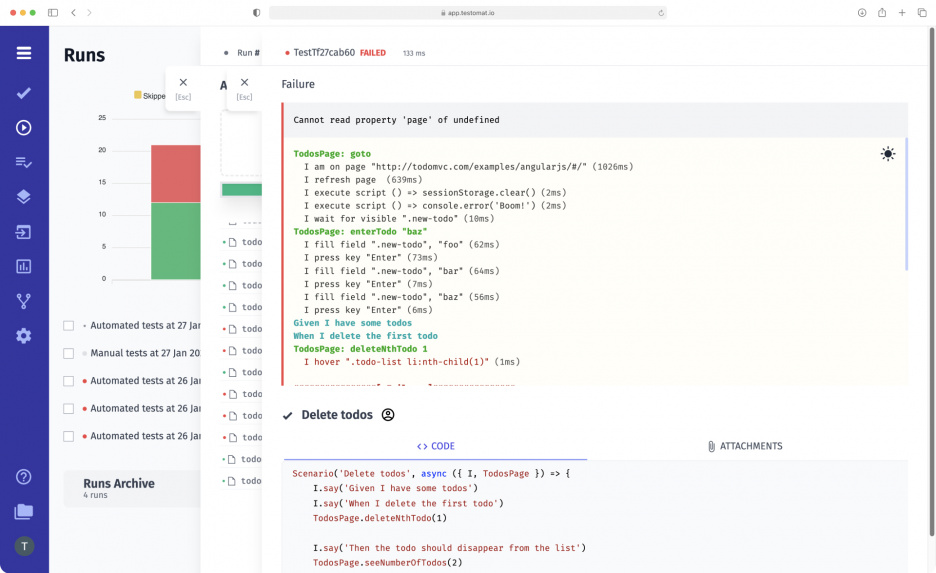

After executing the test cases, you can validate the overall results as well as separate test cases on the report. It is the clousure test execution stage. You should check whether the executed test cases achieved the expected result, check test artifacts, measure test performance with Analytics, mark test result status Passed/Failed/Skipped or make your records when the actual result is Inconclusive i.e. If any of the test cases failed or did not satisfy the condition then report the defect to the development team for validating the code by creating issues with Bug Tracking Tool like Jira or Linear on the fly. There you can see all defect tests or with the Analytics dashboard. It allows easy exude Not Run, In progress, Partially executed tests, Never run tests, Flaky tests and Slowest tests.

🔴 Please note: If there are only manual tests in the test plan, the smart system will immediately turn Test Run into manual, which means users will see the tests that need to be passed in Manual Test Execution mode. Also

Additional features when using the Execute test cases function

After testing has been started, you will find yourself on the page where testing is directly performed. Here there is a variety of convenient functionality. You can:

- Edit test plans before starting a test or even while running a test.

- Filter tests in the logical group by their status., @tags, labels, environments etc.

- Change the presentation of tests. They can be grouped in a list or as a tree–nested in suites.

- Enable multi-select mode and select multiple tests.

- Assign a status to several tests at once.

When we execution test cases our test management system has the following:

- Test parametrization – means test data variables. And during the execution, each parameter creates a new particular test. Test case execution will flow in parallel mode.

- Progress bar – visually shows the current ratio of tests in different statuses. This is very convenient for managers. For example, when launching large-scale testing before release, managers need to understand how many tests are passed/failed/skipped and how many are Not Run yet. Just click on this button, and the tests that should be given attention in the first place will be filtered. This is an indispensable feature on projects with a lot of tests.

- Priority filtering – this feature is very important because almost all projects use test prioritization. They are assigned a level of importance Low, Normal, or High. Again, this is convenient for managers. They can see what status the High Priority tests are in. If any fail, the release is delayed until the defect is fixed. This makes it possible to make predictions and plan the team’s work.

- Toggle description – we have already mentioned that the Execute test cases function allows you to run tests in Checklist mode, i.e., without displaying descriptions. If you need to see them, just click on the Toggle description button, and you will immediately see the description and test steps.

- Comment field – under the buttons for assigning status to tests, there is a comment field. It is convenient that the system has templates of frequently used comments. That is, the user does not need to constantly write them manually. In addition, you can add your own templates. This saves a lot of time.

- Modifying execution variables – make changes to test run, you can add test run participants or increase the number of tests during testing. This is convenient when you have already realized after the start of testing that you need to test additional functionality. With this feature, you don’t need to create a new test run but can edit an existing one.

- Customization Run Retention Period in Project Settings – allows fine-tuning of historical record periods according to specific project requirements. By default, our system automatically purges runs older than 90 days daily. The vice versa you can archive test runs, which you do not need, manually.

At the end of the test run, the user needs to click the Finish button, after which all data will be saved, and a test execution status Report will be generated. Thus, the Execute test cases feature allows you to efficiently manage test cases, run manual and automated tests simultaneously, and track their results. This saves time for the QA team and allows you to release a high-quality product to the market faster.

Ways to Perform Test Execution

The testing team can choose from the below list of preferred methods to carry out test execution:

- Run test cases – a simple and easiest approach to run test cases and it can be coupled with other artifacts like test plans, test suites, test environments, etc.

- Run BDD test – our users may execute Behavior-Driven Development (BDD) tests within the TCMS. This feature facilitates the automation of tests written in a BDD format, and helps implement BDD approach into the testing workflow.

- Run checklist or test suite – allows you to create checklists of test tasks, i.e., to document your actions without describing them in detail. When you start a test run, you can choose in which mode to do it. If you set the switch to Checklist mode, all test case descriptions will be hidden. This is very useful in situations where testers constantly run the same tests (for example, smoke testing) and do not want to be distracted by a screen with descriptions that are familiar to them.

- Run Groups – help you organize test runs by context depending on your needs. It can be grouped by sprint, releases, or functional decomposition or define your own way to structure test results.

- Sequential or parallel test execution – test case collections might be executed sequentially or in parallel. Sequential execution is useful in cases where the result of the last test case depends on the success of the current test case.

- Multi-environment Run – it simply tells that in which platform or operating system the test cases have been executed i.e., test cases executed in the Windows OS, Mac OS, Mobile OS, etc.,

- Test executions by step – if the test has prescribed steps, not just a description, you can assign a status to each. These statuses are saved until the end of the test run, and the manager can see exactly where the error occurred. As a result, it becomes clear that it is necessary to start a defect in a certain functionality and fix it.

- CI\CD execution – such executing test cases allows you to run automated tests, manual tests, and both types of tests simultaneously – in Mixed Run mode. When working with automated tests, the user can go to the CI service in use and see what stage the test is at. In this case, all the statuses of automated tests will be displayed in Testomat.io.

- Rerun automated tests manually – sometimes to check particularly important test scripts or if the results of the automated test are unreliable, you may need to run auto-tests manually. To do this, just select the test you want and click Relaunch manually.

- Mixed Run – allows merging both manual and automated tests into the same run and consolidating all results in one Run Report to see the full picture of your testing. If your team use both automated and manual tests in quality assurance flow, this feature helps you optimize the testing process a lot.

- Rerun failed tests – in addition to the individual automatic tests, you can also set up a manual mode for failed tests. To do this, select the desired tests and check the Relaunch Failed on CI checkbox. The system will re-run the failed tests and display their results.

- Run tests from Jira (User Story Execution) – a special testing environment to simplify the collaboration process. In particular, built-in test execution is implemented into our Jira plugin. Notably, our Jira plugin includes built-in test execution capabilities, enabling non-technical individuals such as Business Analysts (BAs), Project Managers (PMs), or Quality Assurance (QA) professionals to execute test cases.

- Fast-Forward Test Execution – once a tester adds the result of a test case within a test run, the system automatically transitions to the next test case. This eliminates the need for manual navigation and reduces the time spent on repetitive actions, allowing testers to steady testing pace.

- Re-testing (Regression testing runs) – from the name itself, we can easily understand that Re-Testing is the process of testing the modules or entire product again to ensure that the newly made changes to the code or newly developed modules or functions should not affect the application.

- Generate test results without execution – generating test results from non-executed test cases helps to track test coverage.

Other test management features that make using Execute test cases even more efficient:

- Jira integration – the Jira Plugin allows you to set up two-way integration of the TMS with the bug tracking system. Thanks to this function, you can run test scripts directly from Jira. Moreover, even non-technical experts can do this.

- Report on current status – of the test run you can see the progress of the test by pre-grouping the tests by suite or in a list format, as well as filtering them by status.

- Manual Completion of Automated Test Runs

- Analytics – the Test Runs page displays key analytical metrics, including the number of tests in each test run, test execution progress by bar, Passed Failed Skipped test ratios, etc.

- Artifacts – test artifacts include video recordings, screenshots, data reports, etc. These are very helpful as they document errors, the results of the past test execution and provide information about what needs to be done in future test execution.

- Stack traces – it is an extract of program code that informs us about the presence of an error.

- Code view for automated tests – displays a code fragment where an error is detected after an unsuccessful run, it is helpful in autotest debugging.

- Reset Test Result During Test Execution – a convenient way to make on-the-fly adjustments during test execution, ensuring that the test status accurately reflects the current testing conditions and the test run integrity is saved.

- Track Changes in Ongoing Test Runs view displays newly added, modified, or re-assigned tests — so testers can not miss important information, avoid confusion, and keep test execution aligned with the latest project changes.

- Copy test runs – enables users to duplicate existing test runs within our software platform. Such replicating test scenarios allows quick iteration of testing efforts to save time.

- Compare test run – thanks to this feature the QA team can see the test results for different test runs side-by-side.

- Run History – run and track test execution, without limiting how these runs are archived within releases.

- Defect mapping – allows users to systematically track and manage defects identified with test execution. It facilitates defect management practices, prioritization and resolution of defects by providing context on where and how each defect was discovered during the test execution process.