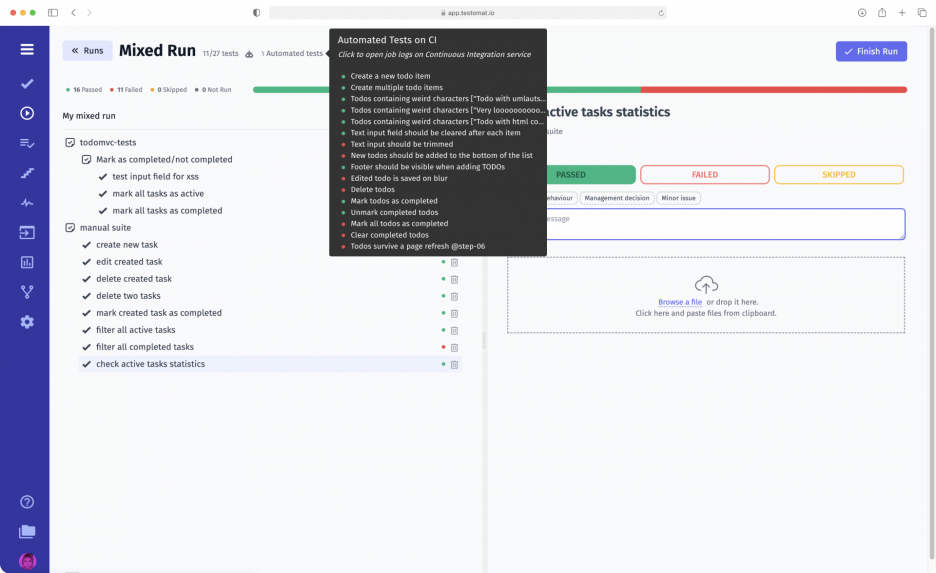

Mixed Run provides the ability to simultaneously launch manual and automation testing with flexible configuration, status tracking, real-time analytics, and comprehensive reports on one screen to evaluate the results.

When to use automation testing manually and how?

Software development lifecycles can be a year or more, increasing the number of covered test cases to the thousands. In this case, routine work can take weeks or even months in total, which delays the time and requires additional financial costs. How to accelerate this process? We have a decent solution for you.

Mixed Run by testomat.io is designed to optimize conducting simultaneous manual and automated testing by combining and storing all test results in one place. Forget about “manual testing vs. automated testing” issues and use them together!

How to automate manual test cases with testomat.io

Testing tool Mixed Run is a unique feature that, thanks to testomat.io, is now available to every test team, regardless of the project size. Its main advantage is the ability to build and run a test plan using both manual testing and automated tests in the correct order. If you still think that test cases cannot be automated and make things difficult for the manual testing team, Mixed Run is for you!

What does Mixed Run open for you, and why is manual testing important in parallel with manual one?

- Flexibility of setting up a test plan: tests can be selected or fixed to run with effective filters in just a few clicks.

- Parallelism of manual and automated software testing: a specialist is not required to perform routine actions to run tests in the system; at the same time, when performing tasks on CI, it is possible to execute manual tests.

- Concise display of the test plan: for the convenience of testers, tests from the plan are displayed in the format of a checklist only by name and tags, without visualization of scripts and attachments.

- Tracking the run history of a combination of tests: view the results in regression with information on the success of the last 5 passes.

How Mixed Run works, and does manual testing require coding in testomat.io?

- Create tests or automated test trees. To do this, enter a title, description, add a code template and attachments if required.

- Add manual tests or test trees. To do this, follow the same steps as with CI requests.

- Create a new combined test plan. To do this, go to the plans section, and when adding tests, mark in the checklist the necessary ones, both manual and automatic. Give the test plan a description, attach queries and tags.

- Run Mixed Run. To do this, go to the launch section, select the appropriate tab in the upper right. In the window, check the runtime and write the name of the manual tests, and select the integration service for automated tests. Select a test plan from the previously created ones or add a new scenario.

- Track progress in real-time. View test status data from the tree on an interactive heat map, with the ability to search results for each individual test name or tag.

- Fix problems directly in the heat map. Note data for each test, submit it as a new issue to Jira, or link to existing issues.

- View and share the full report with the team. Discuss tasks and development process by viewing failed, passed, and skipped tests on one screen.

Mixed Run in Jira integration

Set up the Jira Plugin and run the testing process in parallel for manual and CI tasks right from your project management system. Create, edit, and pass different testing types in manual and automatic modes. Attach tests to an issue, create bundles, mark test trees — all in one place for the convenience of the whole team.

We created a bi-directional communication and minimized the interface by hiding scripts and test descriptions. Thanks to this, in just a couple of clicks, without switching between tools, you can manage the testing process directly from Jira. Also, you can view the results right in the system in real-time and post a full report on test plan implementation.

Okay, let’s look briefly at test management features that work along with mixed run:

- Tags manual and automatic tests – the number of test cases and problems on the project can be hundreds and thousands. It is essential to build the right test tree and test plans for each task. We simplified the test plan construction process several times by searching for the right ones by tags. When creating a test, it is enough to write a tag in the title using “@”. The test will be highlighted in the query group. So, you can combine several manual and automated tests with one tag. Further, through the search, you can easily find the ones you need and quickly mark them as mandatory in the plan.

- Real-time report – often there are situations when executing a combination of tests takes several hours or even days. But your development team expects initial results in the shortest possible time. By viewing the Real time report, you can get the results during test execution: how many tests were passed, which of them were successful, and how many were passed, rejected or skipped. It allows you to get a general picture of the gaps in the product.

- Heat map report Data visualization – plays a critical role in the perception of information. In the public report, we paid special attention to the charts so that you can understand at a glance how the test plan went. With Heat map, any member of your software testing team can immediately assess how many tests passed and how many passed or failed. Each block of tests is expandable, easy to understand since we have hidden the scripts and attachments but left the main thing. The tester can share the report in Jira, via mail, or in Microsoft Teams. Also, this report format makes it easy to visually compare the results of the same type of test coverage launched at different time periods without in-depth detailing.

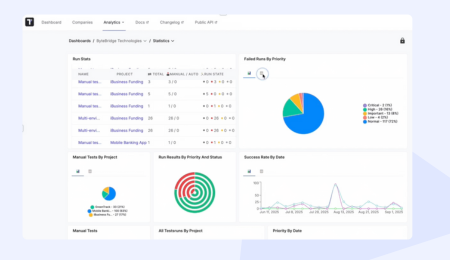

- In-depth analytics – our team provides the software tool that allows even junior-level specialists to work with testing. To do this, the report section displays analytics for each Mixed Run. In the block, you can see information about which tests are flaky and which are the slowest. It will help you improve scripts or plans in the future without wasting time on weak points in queries.