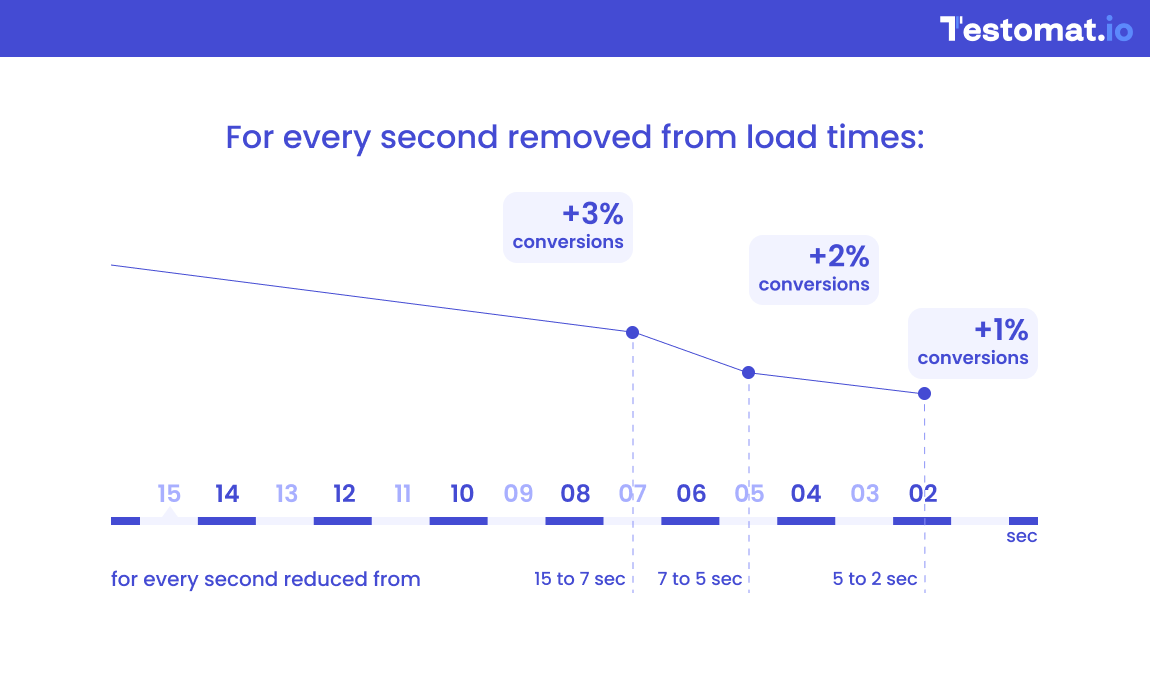

Any software user has experienced those annoying moments when they wait for ages for a site to load or for an app to launch. Or, the site suddenly freezes and doesn’t react to your clicks and taps. If the response time is too long, you are likely to close it and try another solution.

However, if you are an entrepreneur owning an internet-driven business, a poor system’s ability to respond to users’ commands is not just an awkward nuisance. It will cost you a pretty penny since 53% of people will abandon the site if it takes over three seconds to load. If you launch an e-commerce site, ensure it can handle a large number of users on special occasions or during holidays.

The only recipe for eliminating performance issues and preventing performance degradation is conducting an out-and-out performance testing.

This article explains the essence of performance testing, outlines its use cases, explores various types of performance testing with examples, helps choose among different types of performance testing, provides a roadmap for conducting performance tests, suggests a list of test automation tools, and highlights common mistakes made by greenhorns during the procedure.

Performance Testing Made In a Nutshell

Performance testing is the key non-functional software testing method that aims to expose the solution’s responsiveness, stability, scalability, and speed under various network conditions, data volumes, and user loads.

When properly planned and implemented, performance tests allow software creators and owners to:

- Expose performance bottlenecks

- Identify potential issues and points of failure

- Minimize downtime risks

- Ensure meeting latency and load time benchmarks

- Optimize system performance under heavy user traffic

- Improve user experience

- Enhance the solution’s scalability

- Evaluate how the system handles recovery

- Validate the reliability, stability, and security of the software piece in different scenarios (like peak traffic, DDoS attack, or sustained usage) and across different environments

Performance testing comes as a natural QA routine at the end of the software development process. Yet, there are certain events during and after the SDLC that make performance testing vital.

When Should You Conduct Performance Testing?

The necessity of conducting particular performance testing types is conditioned by the solution you are building. For example, if you are crafting a gaming app, you should run various types of software performance testing to test data, databases, servers, and networks and verify that it works well on devices with different screen sizes, renders visuals properly, and tackles multiplayer interactions between concurrent users seamlessly.

Typically, various performance testing types in software testing of a product are conducted continuously if you stick to the Agile development methodology, and at least once in case you employ the waterfall approach to SDLC. Besides, multiple software performance testing types are advisable:,

- at early development stages to identify possible issues

- after adding new features

- after significant updates

- prior to major releases

- before the anticipated traffic spikes or user quantity expansion

- regularly in environments identical to the production environment

The best practices of performance testing presuppose conducting checks in the automated mode. Which types of tests are used in automated performance testing?

Dissecting the Types of Performance Testing in Software Testing

Different types of testing in performance testing are honed to check various aspects of the solution’s functioning. Here is a roster of the types of performance testing with examples.

1. Load Testing

It assesses the system’s ability to handle an unusual amount of traffic or user interactions without slowing down or – God forbid – crashing. Load tests allow you to gain scalability insights, minimize the solution’s downtime, mitigate data loss risks, and consequently save time and money.

Example: Simulate 10,000 virtual users browsing your e-store and simultaneously adding goods to carts.

2. Stress Testing

Unlike load tests, which deal with expected loads during normal usage, a stress test aims to detect the system’s limits by subjecting it to extreme conditions. When properly performed, this type of performance test exposes the solution’s weak points, enhances its stress reliability, and ensures regulatory compliance.

Example: Apply a sudden surge of 20,000 shoppers at a flash sale event.

3. Scalability Testing

This technique assesses the product’s capability to cope with the gradually increasing number of users and/or transactions. Scalability tests showcase the system’s growth potential, reduce OPEX, and augment user experience.

Example: Add virtual users incrementally to understand response times and conduct server load capacity testing.

4. Endurance Testing

Also called soak testing, this technique enables QA teams to detect memory leaks and performance degradation when the system operates over an extended period. Thanks to soak tests, you can reveal issues that may not manifest themselves during shorter load or stress testing procedures.

Example:Run a fintech app for a month under sustained usage to assess its long-term stability and basic performance metrics.

5. Spike Testing

Both stress testing and spike performance testing types simulate sudden increases in traffic. Yet the aim of spike tests is different. They reveal how the system handles and especially recovers from traffic surges related to promotional events, viral social media campaigns, or product launches. Spike tests forestall system-wide failures, optimize resource utilization, and enhance the solution’s uptime.

Example: Simulate a 5x or 10x traffic surge on Black Friday or Cyber Monday for an e-commerce platform.

6. Volume Testing

Among other types of performance testing, this one is data-oriented, making it crucial for databases and data-driven software. It is intended to ensure the system remains functional and swift in performance even if a large amount of data is ingested. Volume testing is honed to minimize data loss risks during memory usage and guarantee high data throughput.

Example: Check the system’s performance and query execution times when importing millions of records into its database.

7. Peak Testing

Its overarching goal is to identify the maximum load a system can be subject to and understand what happens if this threshold is crossed. Peak tests help determine the solution’s maximum capacity and minimize the possibility of crashes.

Example: Monitor an e-store’s throughput and response time when a maximum number of virtual users simultaneously browse it, add items to the cart, and pay for the purchase.

8. Resilience Testing

This technique is honed to assess a system’s capability to withstand disruptions and resume its normal functioning after one occurs. It helps QA personnel identify single points of failure and proactively eliminate them, thus mitigating downtime risks and improving disaster recovery.

Example: For a fintech platform, simulate a shutdown of a database server during a money transfer or other transaction to see if users face service interruption, the data remains safe, and the system bounces back fast.

9. Breakpoint Testing

The method aims to identify the breaking point – a moment when the system fails. It allows you to determine the conditions under which the solution becomes unresponsive or unstable, thus enhancing capacity planning and preventing downtime in advance.

Example: Gradually increase the number of a video streaming platform’s concurrent users to detect the figure after which the video streaming quality plummets dramatically or when buffering becomes excessive.

Now that you know which types of tests are used in automated performance testing, you may wonder how you can select the best technique.

Choosing the Proper Types of Performance Tests

Here is the checklist that you should stick to while opting for certain test types to apply.

- Identify your solution’s critical needs. Do you prioritize handling heavy loads, go for rapid traffic spikes, build for resilience, or deal with surges in data volumes?

- Analyze the user base. Do you expect it to grow significantly, or will it remain relatively stable?

- Assess system failure risks. Do you expect it to run continuously (like gaming apps or streaming platforms) or does its functioning involve a sequence of distinct steps?

- Consider different scenarios. What are the solution’s typical use cases, and what are their possible implications?

- Envisage continuous adjustment and monitoring. Different types of performance testing aren’t one-time efforts. Consider them as elements of a comprehensive testing strategy that should be revisited once a significant overhaul of the solution occurs.

- Apply the right tools. Each specific tool serves a certain purpose that should align with the system’s vital parameters.

Performance Test Automation Tools: A Detailed Comparison

Let’s have a look at specialized software that streamlines and facilitates performance testing.

| Tool | Pricing | Pluses | Minuses |

| Apache JMete | Free | Open-source, large user community, highly customizable | Limited GUI capabilities, steep learning curve |

| K6 | Free | Open-source, easy CI/CD pipeline integration, command line execution | Limited reporting capabilities and few plugins |

| Gatling | Free | Open-source, easy CI/CD pipeline integration, high performance | Limited intuitiveness, proficiency in Scala as a prerequisite |

| Locust | Free | Open-source, developer-friendly, flexible, resource-efficient | Limited built-in reporting capabilities, problematic handling of non-HTTP protocols |

| BlazeMeter | Both free and paid plans are available | Cloud-based, integrates with browser plugin recorder, flexible pricing | Limited customer support, inadequate customization, potential integration bottlenecks |

| Artillery | Free | Open-source, easy to use, flexible for testing backend apps, microservices, and APIs | Resource consumption limitations and questionable accuracy at high loads |

| NeoLoad | Commercial | Easy CI/CD pipeline integration, user-friendly interface, realistic load simulation | High cost, limitations in protocol support, potential complexity for advanced features |

| LoadRunner | Commercial | Robust reporting, comprehensive feature roster | High cost, considerable resource intensity, steep learning curve |

| LoadNinja |

Two weeks of free trial, paid subscriptions after that |

Cloud-based, easy to use, real-browser testing, no scripting required | High cost, limited customization, inadequacy for complex testing scenarios |

Today, AI-driven testing tools (like Testomat.io, Functionize, Mabl, or Dynatrace) are making a robust entry into the high-tech realm, enabling testing teams to essentially accelerate test generation and execution. However, it is humans who are ultimately responsible for running all the types of performance testing.

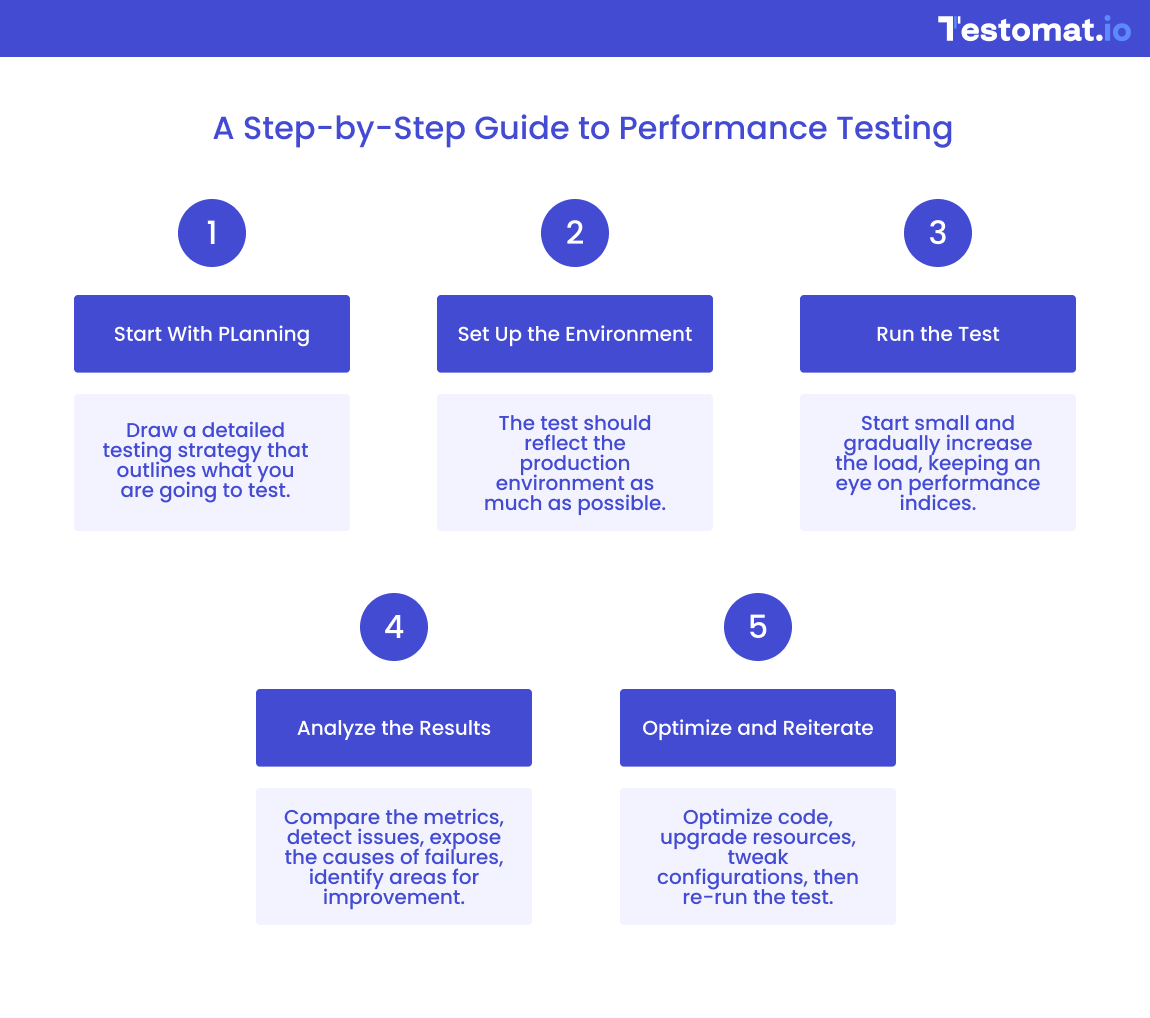

How to Conduct Performance Testing: A Step-by-Step Guide

Specializing in providing testing services, Optimum Solutions Sp. z o.o. recommends you to adhere to the following straightforward performance testing roadmap.

Step 1. Start With Planning

Draw a detailed testing strategy that contains answers to such questions as what aspects you are going to test, what are the different types of performance testing techniques to be used in the process, what key metrics you will leverage to measure the results, what tools will dovetail with the testing’s scope and objectives, and others.

Step 2. Set Up the Environment

The test should reflect the production environment as much as possible, and test inputs should resemble those your solution will deal with in the real world. Besides, you should have tracking tools in place to monitor test execution and results.

Step 3. Run the Test

You should start small and gradually increase the load, keeping an eye on performance indices (response time, error rate, etc.). All results should be captured by the detailed log for a deeper post-test analysis.

Step 4. Analyze the Results

Such an analysis involves comparing the received metrics against the expected benchmarks, detecting trends indicative of issues, exposing the root causes of failures, and identifying areas for improvement.

Step 5. Optimize and Reiterate

After understanding the reasons for problems, introduce remedial changes (optimize code, upgrade resources, tweak configurations, etc.). Then, re-run the test to make sure the adjustments you implemented had a positive impact on the targeted performance aspects.

While conducting any of the aforementioned types of performance testing, you should watch out for typical mistakes.

Common Performance Testing Mistakes to Avoid

Inexperienced testers very often overlook certain details that can ultimately ruin the entire process. What are they?

- Underestimating the cost. Meticulous recreation of production conditions is not a chump change issue, so you should have sufficient funding for performance testing.

- Lack of planning. Crafting user case scenarios and load patterns requires a thorough preliminary planning, without which the success of the procedure is dubious.

- Disregarding data quality. Inconsistent and irrelevant data leveraged for testing can distort the results.

- Technological mismatch. Testing tools should play well with a particular tech stack; otherwise, you will waste a lot of time trying to align them.

- Starting to test late in the SDLC. You can launch tests as soon as a unit is built. Relegating performance testing to later stages increases the number of errors and glitches that need to be corrected.

- Disregarding scalability. You should always take thought for future-proofing and envision the potential growth of the system’s user audience.

- Creating unrealistic tests. Tests you run should simulate the real-world usage scenarios as much as possible and involve operating systems, devices, and browsers with which the solution will work.

- Forgetting about the user. You should always adopt a user’s perspective while gauging the importance of various performance metrics.

As you can easily figure out, preparing and conducting an efficient performance test is a challenging task that should be entrusted to high-profile specialists in the domain, wielding first-rate testing tools. Contact Testomat.io and schedule a consultation with our experts.

Key Takeaways

Performance testing is an umbrella term that encompasses a range of techniques used to verify whether a software piece meets basic performance indices (response time, latency, throughput, stability, resource utilization, and more). It should be conducted after reaching each milestone in the SDLC and in several post-launch situations, such as after adding new features, together with introducing updates, and before anticipated surges in the number of users or queries.

What are the types of performance testing? Here belong load, stress, scalability, endurance (aka soak), spike, volume, peak, resilience, and breakpoint methods. To obtain good testing results and receive a high-performing solution as a deliverable, you should select the proper test type, opt for the relevant automation tool, follow a straightforward testing algorithm, avoid the most frequent mistakes, and hire competent vendors to assist you with planning and conducting the procedure.

Frequently asked questions

How many types of performance testing are there?

There are several types of performance testing, including load, stress, endurance, spike, and scalability testing. Each evaluates different aspects of system behavior under various conditions to ensure stability and speed.

What is an example of performance testing?

An example is simulating thousands of users accessing a website simultaneously to see how response times and server stability hold under heavy load.

What is QA performance testing?

QA performance testing ensures an application’s speed, responsiveness, and stability meet defined benchmarks before release. It helps uncover bottlenecks, scalability limits, and user experience issues under real-world usage.

What is the best tool for performance testing?

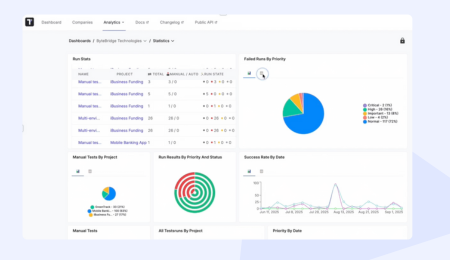

Testomat.io is a modern test management platform that supports performance testing workflows, integrates seamlessly with automation tools, and provides real-time analytics for informed QA decisions.