Emphasising the deep industry heritage and innovation that dates back to early hardware testing and manufacturing, the speaker connects historical milestones to modern developments in AI assurance. They underline the growing importance of regulatory frameworks and trustworthiness in AI systems, reflecting ongoing efforts to ensure safety, fairness, and accountability as AI becomes a critical part of the technological landscape.

Key Takeaways

- AI integration in testing has evolved from rule-based methods to generative and agentic AI platforms.

- Industry heritage informs current advancements and the need for trustworthy AI practices.

- Regulatory standards aim to balance innovation with safety and ethical considerations.

Evolution of Automation and AI in Testing

Testing automation traces back to iterative build-and-test cycles that gained momentum in the mid-20th century. Initial efforts focused on hardware manufacturing, progressively improving test equipment production and enabling breakthroughs such as stereo sound and RF signal testing.

These foundations paved the way for using automation to enhance reliability and efficiency in software and hardware test processes.

Progress in Model-Driven Testing Techniques

Advancements in the 2010s introduced model-based testing supported by machine learning algorithms. Early platforms used models to generate test cases automatically, anticipating next steps in the testing sequence before transformers became mainstream.

This marked a shift towards integrating traditional AI methods with automated processes, improving test generation and coverage.

Emergence of Generative AI Testing Solutions

By 2022, generative AI platforms transformed test automation with natural language-driven code creation and execution. Teams combined expertise in AI research from multiple global centers to develop systems that leverage large action and vision models for more advanced autonomous testing.

Recent innovations emphasize AI assurance principles, aiming to build trust and governance into these automated testing frameworks.

Agentic AI Explained

Agentic AI refers to systems designed to perform tasks independently by making decisions and taking actions without continuous human intervention. These AI agents integrate automation with cognitive capabilities to operate in complex environments.

This approach extends beyond simple automation, enabling AI to interact with software and hardware ecosystems through purposeful, goal-directed behaviors.

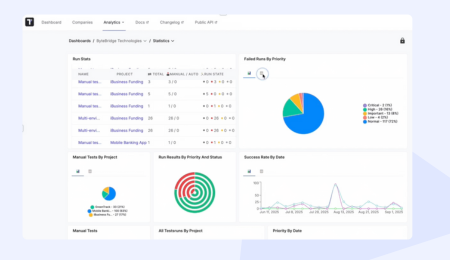

Applications in Automated Software Validation

Agentic AI is increasingly applied to software testing where it generates and executes test cases autonomously. By leveraging models and algorithms, these systems reduce the manual effort required and improve test coverage.

They support creation of tests from specifications and even natural language inputs, accelerating the validation process while maintaining accuracy and consistency.

Role of Expansive Action and Visual Models

Large-scale models that understand both actions and visual inputs play a critical role in agentic AI platforms. These models allow AI to interpret complex data types, such as images or UI elements, and decide appropriate responses.

The combination of large action and vision models enables more sophisticated interaction with digital environments, supporting tasks from code generation to visual test automation.

AI Assurance and Trustworthiness

AI assurance involves validating that AI systems operate as intended, maintaining reliability and predictability. It addresses the need to build confidence by ensuring systems are designed, tested, and monitored to perform safely under various conditions.

The process includes continuous validation, risk management, and adapting to new challenges posed by advanced AI technologies like large language and vision models.

Evolving AI Rules and Frameworks

Governments and international organizations are creating regulations to guide the responsible development and deployment of AI. Policies focus on managing risks while fostering innovation in AI.

Key regulatory efforts emphasize transparency, accountability, and adherence to standards that reduce harm and promote ethical use. These frameworks aim to harmonize global practices around AI governance.

Core Concepts for Secure and Equitable AI

Safe and fair AI relies on foundational principles such as:

- Safety: Ensuring AI systems do not cause unintended harm.

- Fairness: Preventing bias and promoting equitable outcomes.

- Transparency: Making AI decision processes understandable.

- Accountability: Establishing responsibility for AI behaviors.

Organizations adopting these principles engage in proactive risk mitigation and self-regulation to uphold trust. Standards like ISO 29119-11 and ISO 42001 play vital roles in formalizing these best practices.

Industry Heritage and Impact

He highlights the deep roots of key players in the testing industry, tracing back to their inception in 1939. Early innovations included building test equipment instrumental for companies like Disney and pioneering work in audio and radio frequency testing. This foundational work influenced iterative hardware development long before agile methodologies existed, creating a continuous legacy linked to modern testing practices.

Breakthroughs in Automation and AI Technologies

He details significant technological advances such as early model-based testing combined with machine learning from 2015 onward. In 2017, solutions emerged that generated predictive test sequences using frameworks like TensorFlow, preceding the Transformer model era. By 2022, his team introduced the first generative AI platform capable of automatic code generation and natural language-driven automation.

Evolution of Testing Methods Through Emerging Paradigms

The speaker emphasizes the shift in testing approaches driven by cognitive engineering concepts discussed as early as 2017. This includes developments in digital twins, brain-computer interfaces, and the application of AI assurance frameworks. Regulatory efforts, such as those influenced by the UK government and EU, aim to establish trust and safety standards through mechanisms like ISO certifications, shaping future assurance strategies for AI-infused testing environments.

| Aspect | Description |

| Early Contribution | Iterative hardware testing since 1939 |

| AI Integration | Model-based and machine learning-driven testing |

| Regulatory Influence | AI assurance standards and ISO frameworks |

| Future Direction | Agentic AI platforms and cognitive automation |

Regulatory Frameworks and Standards

ISO 29119-11 sets guidelines specifically for the assurance of AI systems. It focuses on defining how AI testing should be conducted to ensure safety, fairness, and transparency. The standard provides a structured approach to identify risks and establish trustworthiness in AI-driven products, emphasizing measurable and repeatable assessment methods.

Requirements of ISO 42001

ISO 42001 outlines mandatory practices for organizations implementing AI technologies. It demands ongoing monitoring, risk mitigation, and ethical considerations as core components. The standard encourages organizations to integrate AI governance frameworks that align with both legal compliance and social responsibility.

The Bletchley Statement on AI Safety

The Bletchley Declaration represents a collaborative agreement between governments and industry leaders to promote AI assurance. Originating from discussions held at Bletchley Park, it highlights the need for clear regulations on AI development and deployment. The declaration supports a multi-stakeholder approach to creating trustworthy AI that mitigates harm and fosters public confidence.

AI in the Broader Technological Landscape

AI has increasingly influenced artistic and creative fields, previously considered less vulnerable to automation. Technologies like generative AI now impact musicians, visual artists, and other creatives, raising questions about the changing nature of creative work. This shift also involves higher resource consumption, as some AI models demand significantly more energy than traditional methods.

Foundations for Integrating an AI-Driven Workforce

Developing infrastructure to support AI integration into the workforce is critical. Large-scale AI systems rely on extensive server farms and complex data centers, designed to manage continuous automation and workforce optimization. Investments in such facilities underpin efforts to replace or augment human tasks with AI solutions, particularly in government and industry sectors.

Emerging Directions in AI-Powered Testing

Advancements in AI are reshaping software and hardware testing practices. By leveraging models that combine natural language processing, machine learning, and vision capabilities, automated test generation and execution have grown more sophisticated. These developments enable more efficient and comprehensive assessment processes, driving greater assurance in AI-infused systems.

Building for the Future

He emphasizes the role of cognitive engineering in AI, highlighting concepts like digital twins and brain-computer interfaces. These innovations aim to enhance how systems interpret and respond to complex data, enabling more intelligent automation tailored to human cognitive processes.

New Horizons for Software Testers

Advancements in AI present fresh possibilities for testers, shifting the focus toward automated test generation and intelligent validation. Utilizing tools powered by machine learning and natural language processing, testers can create more comprehensive test cases faster, improving efficiency and coverage.

Establishing Reliability in Advanced AI

Trustworthiness is critical as AI systems grow complex. He stresses the importance of frameworks and standards, such as ISO guidelines, designed to ensure safety, fairness, and risk mitigation. These governance efforts underpin the development of AI systems that users can depend on securely.