Unluckily, QA and testing teams have to cope with challenges in meeting the demands of modern software delivery and fail when balancing high-quality releases with effective bug findings along the way in the traditional testing process.

Thus, poorly developed software products can ruin the user experience and the company’s name and reputation.

The new trend of applying AI agents in testing can minimize human errors, make the QA teams more productive, and dramatically increase test coverage. In the article below, you can find out what AI-based agent testing is, discover types of AI agents in software testing and their core components, learn how to test with AI agents, and much more.

What is an AI Agent?

An artificial intelligence agent, or AI agent for short, is both a small program and a complex system which has been programmed to utilize artificial intelligence technology for completing tasks and meeting user needs. Thanks to its ability to demonstrate logical reasoning and understand context, an AI-backed assistant can make decisions, learn, and respond to changes on the fly. In most cases, AI agents or AI-powered assistants are characterized by the following:

- They can carry out repetitive or expert tasks and can even replace the whole QA team department.

- They can function autonomously when there is a need to attain defined goals (often without constant human intervention).

- They can be fully integrated into organizational workflows.

What is an AI Agent Testing?

When we talk about AI testing agents or assistants, we mean smart systems that apply artificial intelligence (AI) and machine learning (ML) to perform or assist in software testing tasks.

They replicate the work of human testers, including test creation, execution, and maintenance, with limited manual involvement of the specialists, operating under specific parameters they define. It is helpful to have AI-powered assistants in the following situations:

- With their help, anyone in the team, even without technical expertise, can create and maintain stable test scripts in plain English.

- They can automatically adjust, fix, and update tests in terms of the system changes, requiring less effort from human testers.

- Suggest how to make your tests better.

- They can automatically run test cases, which have been created with manual effort, and require minimal direct control of the QA specialists.

Types of AI Agents in Software Testing

Below, you can find information about widely known categories of AI agents in software testing, based on their roles and capabilities:

✨ Simple Reflex QA Agent

Reflex Agents use if-then rules or pattern recognition. They follow specified instructions or fixed parameters with rule-based logic and base their decisions on current information. As the most basic type, these AI agents perform tasks based on direct responses to environmental conditions, which include diverse OSs and web browsers, network connections, structured and unstructured data formats, poorly documented APIs, and user traffic. For example, a task for a simple reflex agent can be to detect basic failures (e.g., 404s, missing elements), log errors, and take screenshots when it detects an error message on the screen.

✨ Model-based Reflex Test Agent

These agents are intelligent enough to act on new data, not just directly, but with an understanding of the context in which it is presented. They are considering the broader context and then responding to new inputs. Can simulate user flows or business logic. Reflex Agents are used in testing to perform more complex testing tasks, because their decisions are based on what they remember/know about the situation around them.

For example, this type of agent remembers past login attempts. If users make multiple failures, it will try to attempt a Forgot Password process or alert about a potential account lockout instead of just logging an error.

✨ Goal-based Agents

When we talk about this type of agent, we also mean rule-based agents. It happens because they follow a set of rules to achieve their concrete goals. They choose the best tactics or strategy and can use search and planning algorithms, which help them achieve what they want. For example, if an agent’s goal is to find every unique error or warning, it can be done by creating test scripts, which will help identify only unforeseen errors and decrease re-testing efforts.

✨ Utility-based AI testing Agent

These agents are capable of making informed decisions. They can analyze complex issues and select the most effective options for the actions. When predicting what might happen for each action, they rate how good or useful each possible outcome is and finally choose the action most likely to give the best result.

For example, instead of just running all tests like Goal-based agents or reacting to immediate errors like simple reflex agents, these ones include a utility function, which allows them to rate situations and skip some less critical ones to find high-severity bugs.

✨ Learning Agents

These assistants can use past experiences and learn from past mistakes or code updates. Based on feedback and data, they can adapt and improve themselves over time. For example, QA teams can use learning agents if there is a need to optimize regression testing. AI-powered assistants self-learn over time from previous bugs and codebase changes, prioritising areas with frequent failures — they highlight the team’s attention on what matters most.

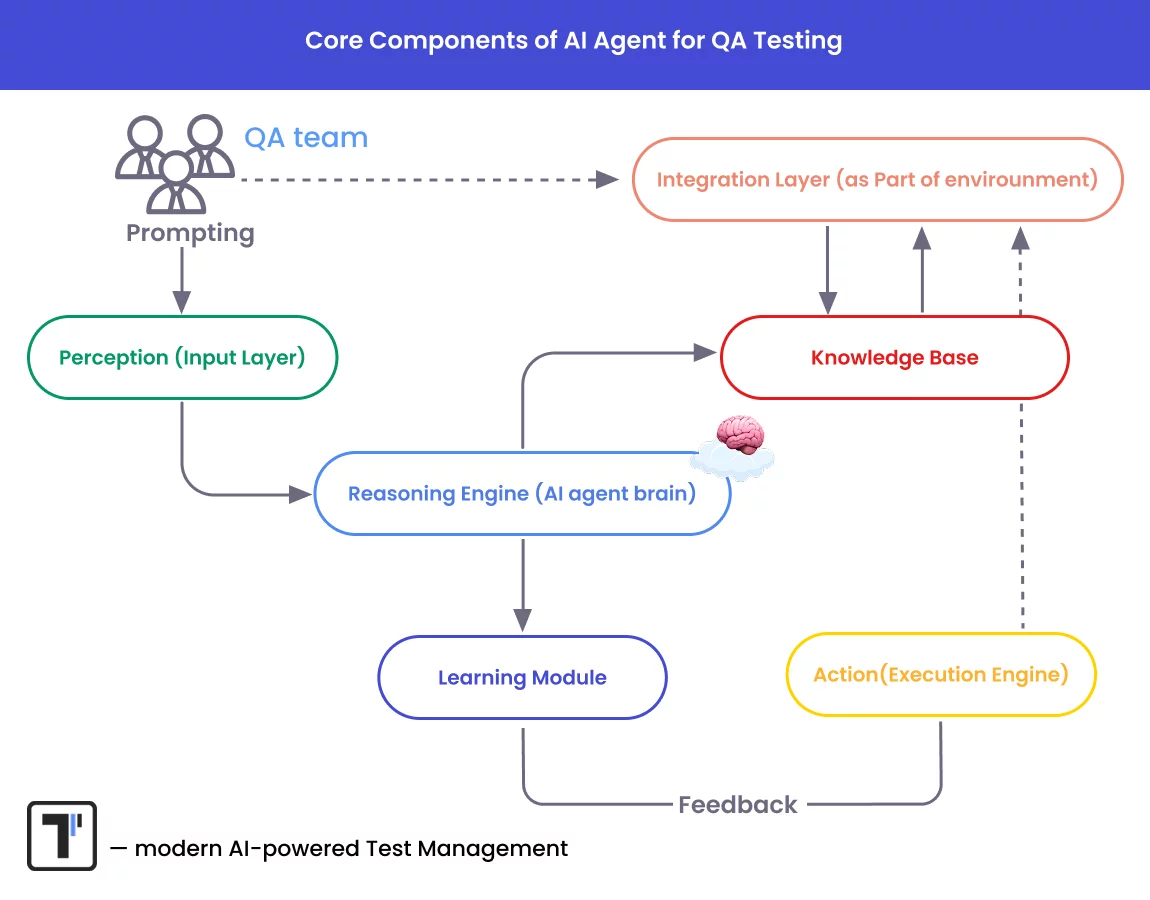

The core components of AI agent for QA Testing

The essential parts of an AI agent for QA testing allow it to intelligently analyse, learn, adapt, and act during the software testing process. Let’s explore them more:

- Perception (Input Layer). Collects data from the environment. It might be code changes, test results, test execution logs, test diff analysis, API responses or some patterns in the test project.

- Knowledge Base. Stores the historical information the agent learns from. Its contents typically include past bugs and their root causes, test coverage data, and frequently failing components, e.g., this helps the Testing Agent make informed decisions based on context and experience.

- Reasoning Engine (AI agent brain). Makes decisions based on current input and the knowledge scope. AI agents use techniques such as rule-based logic, impact analysis, risk-based prioritization, and dependency graph evaluation to process information. For example, the agent can decide which tests to execute based on recent code changes.

- Learning Module. AI agents learn from user stories and test cases by training, adapting to patterns in them over time. This continuous learning helps reduce false positives and minimize test noise, improving overall test reliability. By leveraging natural language processing (NLP), they can predict potential test failures, detect flakiness and intelligently optimize the sequence in which tests are executed.

- Action(Execution Engine). Performs tasks based on decisions. For example: generating new test cases, selecting and proposing the execution of the most relevant tests, reporting defects or opening issues.

- Feedback Loop. Analyzes test engineers’ feedback on false positives to improve future answers. It enables continuous learning and self-improvement of the AI agent.

- Integration Layer. This layer enables seamless connection between the agent and external tools, allowing it to both send and receive data. The incoming information supports the agent’s perception and learning modules, enhancing its ability to respond in a reasoned and intelligent manner throughout the testing process. Integrates the agent with test frameworks, bug trackers, project management tools like Jira, documentation extensions such as Confluence and CI\CD pipelines.

So, these components work together to support automated, efficient, and smarter testing.

Considering this flow… 🤔 How do you think? Where should test engineers focus the most? Right! It is their prompting and the filling of artifacts and documentation. Moving forward with our next paragraph:

What kind of feeding AI agent is dependent?

First, focus on the efficiency of your inputs — specifically, the prompts— since the accurate and useful results you get will directly depend on how your instructions are formulated. Today, prompting has become a key skill for modern QA engineers working alongside AI-driven tools. Thus,

What is prompt engineering in software testing?

It is your specific questions, instructions, and inputs given to the MCP AI agents to guide their responses; they can generate and improve tests, highlight potential faults, assist with analysis, and decision-making.

5 basic rules for prompting in software testing

1. Be clear, avoid vague instructions. Define exactly what you want: test type, tool, scenario, outcome.

Example of right prompt to generate test cases:

✅ Generate test cases for the login form with email and password fields and a button Sign In to verify their work.

❌ Instead of just: Write a test for the form.

2. Provide Context. The more context, the better the result. Include the user story, functionality, code snippet, or bug description.

Example a prompt based on user story:

✅ Based on this user story <User Story RM-1523>: As a user, I want to reset my password via email, suggest edge cases for testing.

❌ Generate tests for password.

3. Include expected behavior or test goals. Helps AI understand the validation points or test criteria. Also, use terms like: edge cases, negative testing or happy path, e.g., to guide the logic.

Example:

✅ Write a test case that ensures users are redirected to the dashboard after logging in.”

4. Avoid overloading the prompt. Do not cram too many instructions in one sentence. It may confuse the MCP Agent’s model and reduce output quality. Better break large tasks into smaller steps, using step-by-step or follow-up prompts.

Example of step-by-step prompt

✅ Start with: “What should I test in…” then ask for detailed test cases or code.

5. Mention tool, framework and format. If you are using a specific stack (like Playwright, Cypress, Selenium), need output checklist code to describe a test case with Gherkin, etc., just say it in the prompt.

Examples:

✅ Write Gherkin-style scenarios for login functionality in Playwright with invalid credentials.

✅ Return the test cases in a markdown table format.

Reasonable artifacts in input data equal efficient AI prompting

Secondly, always remember to keep your artifacts well-structured. Avoid inflating your test project with excessive, unused test cases — that’s a red flag. Prioritize only what’s necessary. This discipline is especially important for AI agents, as they rely on clear, relevant artefacts and operate them in the following:

- Requirements & Specifications. AI agents should have access to a detailed description of the intended purpose and environment for the system under testing. Knowing functional and non-functional requirements allows assistants to better understand what the system should do and how well it should perform in terms of speed, usability, security, etc.

- Existing user stories. AI agents need access to users’ stories to investigate the desired features from a user’s perspective, which allows them to apply them to simulate realistic user journeys and test the end-to-end experience.

- Test Cases. AI-based agents need access to test cases because these give them an understanding of what steps to take and what situations to test when checking software. With this information, assistants can make sure that the software works correctly and can find any bugs.

- Bug Reports. You can find them in Jira, Bugzilla, Linear, or some internal analytics dashboards, as well as external tools like testomat.io Defect Tracking Metrics. AI agents use linking with them for bug reproduction, identifying the fault reason in runs. AI can summarize bug trends to inform QA decision-making in bug prevention.

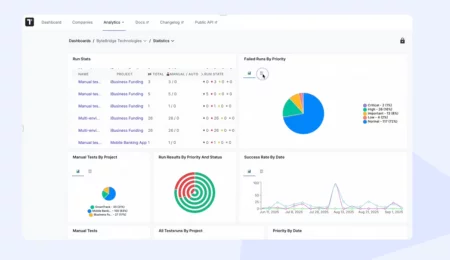

- Reporting and Analytics metrics. AI agents collect data from tools like Allure reports, CI/CD pipelines, and test dashboards. The AI evaluates test duration, failure trends, and pass/fail consistency. Frequent or critical test failures are flagged for priority investigation. AI agent provides suggestions for fixing unstable tests. Also learns which tests are most valuable for regression based on historical value. Based on these insights, it recommends test automation optimization.

- Documentation. With access to the testing documents, AI agents are in the know of what the software should do and what its goals are. These documents also tell them exactly what to test, give clear rules, and expected results – passed or failed tests. Also, AI-based agents can run existing tests and learn from past results in reports to carry out smarter and more effective testing.

Choose the best Artificial Intelligence testing agent

The test management system testomat.io is a modern AI-powered test management tool that helps you easily develop test artifacts and organize test projects with maximum clarity.

It is more than just a data repository and a tool for storing your test artifacts; it offers powerful, AI-driven functionality to accelerate your QA process. The AI Orchestration is integrated across your entire test lifecycle — from requirements to execution and defect tracking — while synchronising automation and manual testing efforts, supported by numerous integrations and AI enhancements.

Other strengths are Collaboration and Scalability. QAs in the team can easily share their test result reviews, flexibly select tests for test plans and runs, adapting AI suggestions to fit their specific needs.

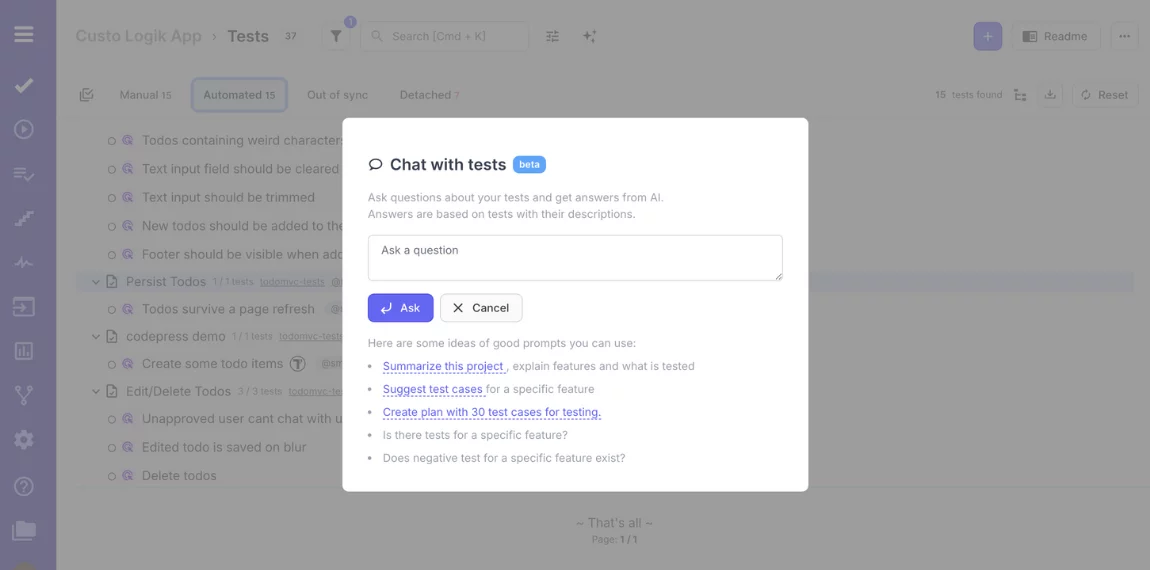

AI Assistant testing works at the level:

Generative AI and Chat with test modes provide direct interaction with the test case suites using natural language — it looks like chatting with a QA teammate. You can generate new test cases or refactor existing ones by automating these repetitive test tasks; manage suggestions, map tests to requirements, identify test gaps and flaky scenarios, and gain a clearer understanding of your test coverage to improve it continuously.

For instance, prompt samples of questions:

→ What does this test case do?

→ Write edge tests for password reset

→ Rewrite this test to be more readable

→ Which parts of the app are under-tested?

→ Find duplicates

→ Map these test cases to requirements

→ To improve desired feedback, teach the MCP AI model by clarifying follow-ups gradually.

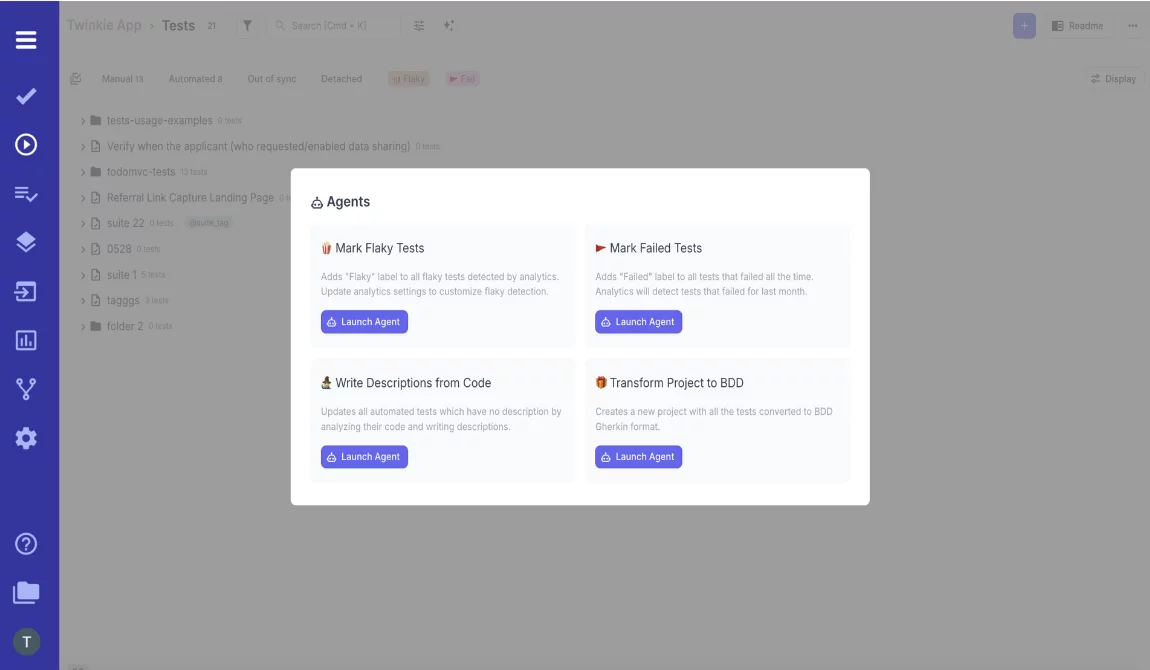

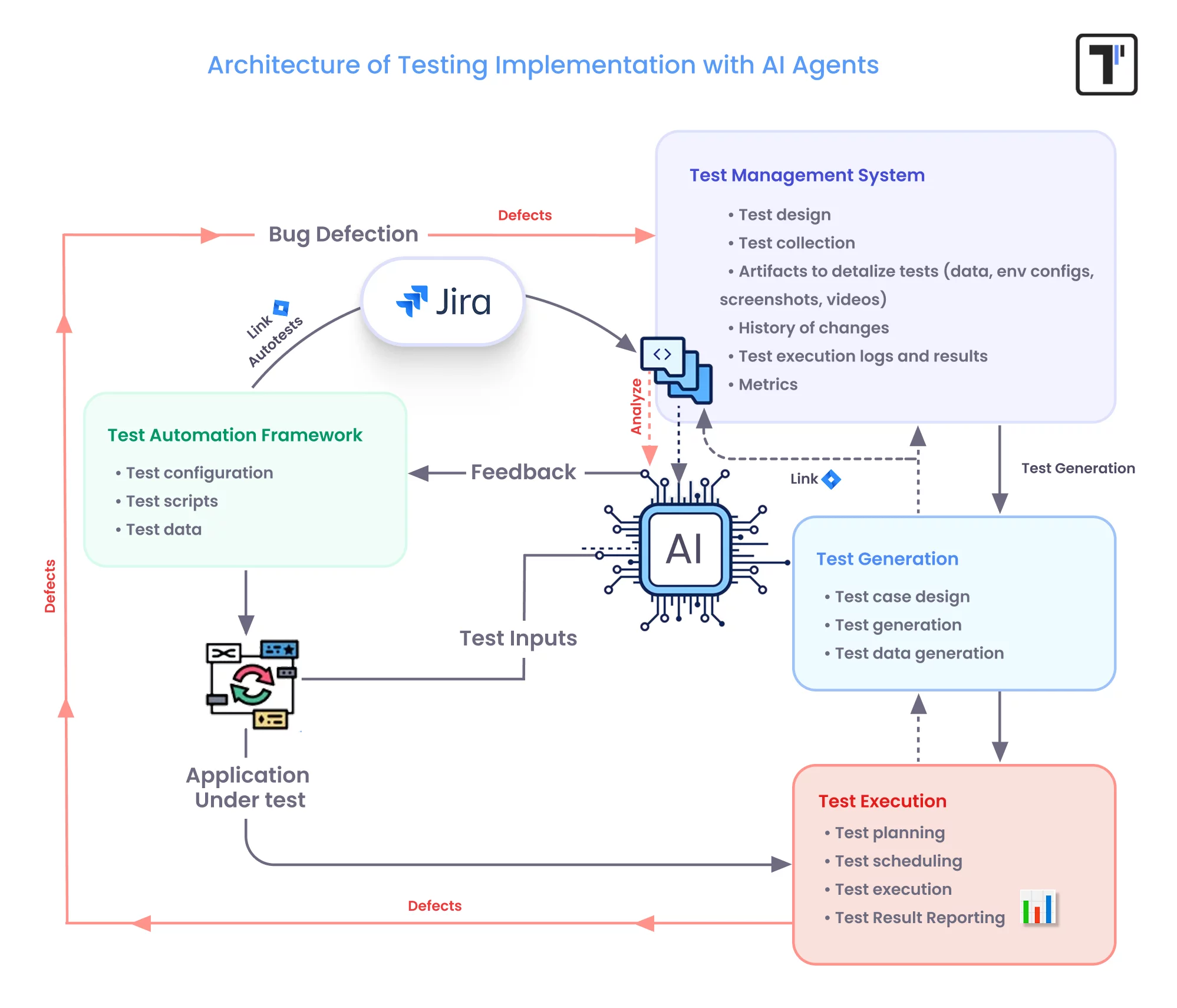

AI agent is an intelligent automation component that serves as a bridge between the test management system and the test automation framework ecosystem. This AI agent actively learns, analyzes, and optimizes the testing process. Namely, it can suggest clear, easy-to-understand test descriptions for automated tests — making them accessible even to non-technical stakeholders (Manual QAs, Business Analysts) — and can automatically transform your project into Behavior-Driven Development (BDD) format. Additionally, it detects flaky or failing tests based on execution history.

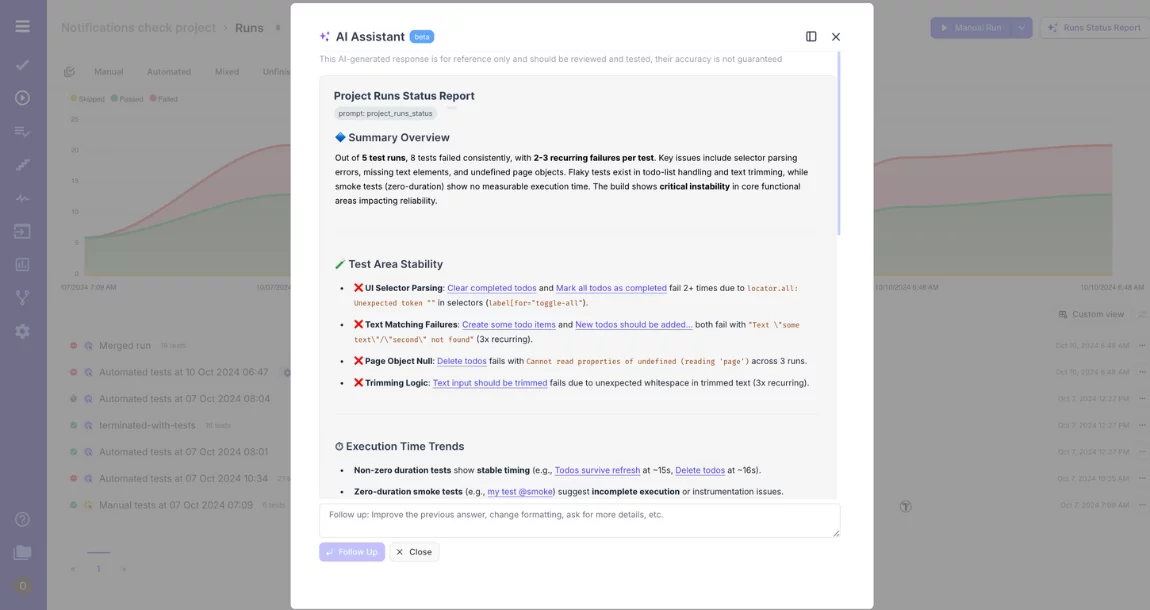

AI Reporting and Analytics is also our strength. Insights from AI Assistant are not hidden — they are delivered through suggestions in the UI of the test Report. Now the development team has implemented two kinds of AI extensions inside the Report — Project Status Report and Project Run Status Report. These reports are available automatically based on recent test run history. They deliver instant visibility into the health of the test project without delving into individual Test Archive logs.

The AI testing agent provided by testomat.io is an intelligent testing co-pilot. It empowers testers to move faster, test smarter, and reduce risk — all with less manual effort. Below, we break down its capabilities in action and show how its workflow acts.

The Use of AI Agents for Software Testing

AI-driven agents are changing the way software QA engineering teams do their work, enabling them to make the testing process faster, more reliable, and more efficient. Here are the key areas where AI assistants are considered the most reliable helping hand:

✨ Test Case Generation

To speed up the process of creating different test suites, QA and testing teams can use artificial intelligence assistants, which take into account the software requirements and are able to turn simple instructions into test scripts on the fly. Based on Natural Language Processing (NLP) and Generative AI, this process happens much faster and covers a wide range of situations, which would have taken much longer if established with human QAs and testers.

✨ Test Case Prioritization

AI-powered assistants analyze previous test results, code changes, and defect patterns, which help them decide the most effective sequence of test runs. Instead of relying on fixed or random order, these models use data from prior test executions to prioritize tests and optimize the selection of test cases.

✨ Automated Test Execution

AI-based assistants/agents are capable of running tests without QA specialists’ involvement 24/7. When the source code is changed, test suites are automatically triggered to execute continuous testing and provide fast feedback. In addition to that, integrations with test case management systems allow bugs to be reported and all updates to be automatically shared with relevant teams and stakeholders.

✨ Shift-Left Testing

In shift-left testing, AI-based agents deliver faster execution and identify bugs quickly, which enables developers to resolve issues earlier. AI-powered agents can also adapt to evolving project requirements to suggest relevant tests to run based on code changes.

✨ Test Adaptation

Thanks to self-healing capabilities, AI agents can respond to changes in the application’s interface and adjust their actions based on what has been changed. They can handle UI, API modifications, or backend changes while maintaining automated tests whenever there’s a change in the codebase.

✨ Self-Learning

Thanks to AI agents’ ability to learn from previous findings from tests, they can analyze trends and patterns from past testing cycles, which helps them predict future test results. When learning and adapting, assistants are getting better at identifying potential bugs and making decisions in a jiffy to proactively address them.

✨ Visual Testing

Backed with computer vision, agents can detect UI mismatches across various devices and screen sizes. They verify the aesthetic accuracy of the visible parts that users interact with. AI-based agents aim to find visual ‘bugs’ – misaligned buttons, overlaid visuals (images, texts), partially visible elements, which might be missed during traditional functional testing.

✨ Test Result Analysis

AI agents can review test results on their own in order to find failures and group similar defects. They also point out patterns in the data, which help them detect the root cause faster and focus on what matters most – identifying patterns that may lead to vulnerabilities in the system.

Overview: Pros and Cons of AI agent for software testing

This table compares the advantages and disadvantages of using an AI agent testing (agentic testing), reminds us about common AI hallucination troubles, and provides a balanced view of AI’s role in testing processes.

| ✅ Pros of agentic testing | ❌ Cons of AI agent testing |

| Generating test cases that humans might miss. Improve test coverage. | Inability to understand wider context – user intent, business logic, and non-functional requirements that a human tester would comprehend. |

| Updating the test cases automatically in terms of changes in the code. | Generating test cases, which trigger false positives or false negatives, and requiring careful review before implementation. |

| Running test suites faster, accelerating the release cycle, and reducing manual effort. | Requiring ongoing maintenance and updates to adapt to evolving testing needs. |

| Predicting potential bugs based on previous test data. | Having blind spots or ending up with inaccurate predictions when training data is poor and does not cover a broad range of test scenarios and edge cases. |

| Identifying and fixing broken tests. | Lack of human intuition in complex scenarios. |

| Self-learning capabilities and adapting testing strategies or techniques based on feedback. | Over-reliance on AI could decrease critical human oversight among test engineers, especially when risks would have appeared in senior and QA manager roles. |

How to Test with AI Agents: Basic AI Workflow

When it comes to the entire testing process of the software products, it is essential to mention that AI agentic workflows are Agile process and go beyond simple handling of repetitive tasks. QA teams should define roles, decide what to test, and what AI agent tool to use.

*It is a plain AI Agent testing Workflow Example; the more sophisticated one was published in a LinkedIn post. Follow the link to check this AI Agent testing Workflow within testomat.io test management software.

- Data-Gathering. To get started, AI test agent gather data from many different sources, like APIs, user commands, requirements, past bugs, usage logs, external tools, environmental feedback, and so on, to be trained (if there is a need). Our test management solution supports native integration with many.

- Collection & Coordination. It is a Role of the Test Management System. Once all the relevant datasets have been collected, the AI-based agents can create relevant test cases to achieve good test coverage, which even covers edge cases, while human testers should approve whether the generated test cases are relevant. Also, AI-powered assistants generate enormous unique test data and user data for email, name, contact number, address, etc., which mirrors the actual real-world data. But when you integrate large language models (LLMs) and generative AI (GenAI), QA agents can rapidly simulate diverse real-world conditions and evaluate applications with greater intelligence and adaptability.

- Test Execution. AI-powered agents are deployed to autonomously run tests and simulate user interactions to test UI components, assessing functionality, usability, and application performance.

- Real-time Bug Detection & Reporting. AI-based assistants detect anomalies, frequent error points within the system, and can predict bugs and automatically report defects to stakeholders. In addition to that, it can recognize repetitive flows and high-priority areas for testing.

- Test Analysis & Continuous Learning. As the software scales, the AI-powered assistants analyse data from user interactions and system updates to keep tests aligned with the application’s current state.

- Feedback and Improvement. QA team members need to regularly review AI-generated results to maintain software quality. Despite the power of artificial intelligence, it’s important to mention that continuous monitoring and periodic checks of their work guarantee accurate and reliable testing results.

Challenges in AI agent for testing

- When the software product becomes more complex, the amount of computing resources needed for AI testing increases exponentially.

- The absence of representative data leads to testing ineffectiveness – AI-based assistants could develop biases and couldn’t meet ethical standards.

- Using outdated APIs and poor documentation presents a huge challenge for the adoption of AI testing.

- AI-generated test cases and results require careful review before implementation.

- In terms of the AI black box problem, it is difficult for QA teams to understand the logic behind the failure of test cases.

Best Practices AI agent testing implementation

When choosing a test assistant, you need to find the tool that best adapts to your testing needs with the software development lifecycle. Take into account customization, integration, and user-friendliness. However, let us remind!

Do not forget about combining AI and human efforts to balance efficiency and creativity!

Here you can reveal some other tips to help you find the right AI agent testing tool:

- You need to investigate how your organization is structured, what systems and tools you already use, and what testing tasks you have.

- You need to define what areas the AI agent testing framework will help you automate before scaling.

- You need to make sure that your team understands why they need an QA agent in test automation and knows how to use it effectively.

- You need to discover if an AI bot can be integrated with the platforms you already use.

- When planning your tool budget, you should consider free, subscription, or enterprise pricing.

- You need to consider its customisation capabilities to be tailored to your unique testing requirements.

Boost your capabilities with AI Agent Testing right now

Whether you’re a QA lead or a startup founder, applying AI Testing Agents will change the way you carry out testing. They are becoming essential tools for modern QA teams, which can learn from past data and predict failure points. They can also generate different tests, self-heal, and adapt to changes to achieve a higher level of excellence for software delivery in record time.

Are you ready? 👉 Contact us to learn more information on how to use the power of Artificial Intelligence agents to create precise test cases and improve the quality and coverage of your software testing efforts.

Frequently asked questions

What is AI agent testing, and how is it different from traditional QA?

AI agent testing uses autonomous, intelligent agents to simulate real user behavior, explore edge cases, and validate system responses without predefined scripts. Unlike traditional QA, it adapts in real time, discovering bugs and usability issues that human testers or static automation might miss.

Can AI agents replace human testers entirely?

Not yet and in many cases, they shouldn’t. AI agents excel at repetitive, exploratory, and high-volume scenarios, freeing human testers to focus on critical thinking, creative problem-solving, and domain-specific insights. The best results come from combining AI agents with skilled QA professionals.

What types of tests can AI agents perform?

AI agents can handle functional, regression, performance, and even exploratory tests. They can navigate complex workflows, interact with APIs, validate UI changes, and detect anomalies in application behavior — all while learning from each run to improve future test coverage.