Traditionally, software testing was a manual and complex process that required a lot of time from the teams to spend. However, the advent of artificial intelligence has changed the way it is carried out.

AI-model-based systems now automate a variety of tasks – test case generation, execution, and analysis, and achieve high speed and scale.

To adopt AI-model testing, you need to effectively manage the massive amounts of data generated during the testing process. Furthermore, you need to train AI models using these vast datasets and enable the models to make accurate predictions and informed decisions throughout the testing lifecycle.

In practice, the problem of introducing AI-models into a real business is not limited to new data preparation, development, and training. Their quality depends on the verification of datasets, testing, and deployment in a production environment. When adopting the concept of MLOps, QA teams can increase automation, improve the AI-model quality, and increase the speed of model testing and deployment with the help of monitoring, validation, versioning, and retraining.

In the article below, we are going to find out essential information about AI-model testing and its lifecycle, reveal popular tools and frameworks, and explore key strategies and testing methods.

What Is an AI Model?

When we talk about AI-models or artificial intelligence models, we mean mathematical and computational programs which are trained on a collection of datasets to detect specific patterns.

🔍 In Simple Terms:

AI model is like a trained brain that learns from data and then uses that knowledge to solve real-world problems.

AI-models follow the rules defined in the algorithms that help them perform tasks from processing simple automated responses to making complex problem-solving. AI models are best at:

✅ Analyzing datasets

✅ Finding patterns

✅ Making predictions

✅ Generating content

What is AI Model Testing?

AI Model Testing is the procedure of testing and examining an AI-model carefully to make sure it functions in accordance with design specifications and requirements. AI model’s actual performance, accuracy, and fairness are also considered during the testing process, as well as the following:

- Whether the predictions of the AI-model are accurate?

- Whether an A-model is reliable in practical circumstances?

- Whether an AI-model makes decisions without bias and with strong security?

Google’s Gemini, OpenAI’s ChatGPT, Amazon’s Alexa, and Google Maps are the most popular examples of ML applications in which AI-powered models are used.

Why Do We Need to Test AI Models?

Below, we have provided some important scenarios why testing an AI-based model is essential:

- To make sure AI-models deliver unbiased results after changes or updates.

- To increase confidence in the model’s performance and avoid data misinterpretation and wrong recommendations.

- To reveal “why” the AI-based models make a particular decision and mitigate the potential negative results of wrong decisions.

- To confirm that the model continues to perform well in real-world conditions in terms of biases or inconsistencies within the training data.

- To deal with scenarios in which models have misaligned objectives.

*AI, as well as APIs, are at the heart of many modern Apps today.

AI Model Testing Methods

Carrying out various testing methods allows teams to make sure the model is accurate, reliable, fair, and ready for real-world use. Below, you can find more information about different testing techniques:

- During dataset validation, teams check whether the data used for training and testing the AI-based model is correct and reliable to prevent learning the wrong things.

- In functional testing, teams verify if the artificial intelligence model performs the tasks correctly and delivers expected results.

- When simultaneously deploying AI-based models with opposing goals, teams opt for integration testing to test how well different components of the ML systems work together.

- Thanks to explainability testing, teams can understand why the model is making specific predictions to make sure it isn’t relying on wrong or irrelevant patterns.

- During performance testing, teams can reveal how well the model performs overall on unseen large datasets and functions in various circumstances.

- With bias and fairness testing, teams examine bias in the machine learning models to prevent discriminatory behavior in sensitive applications.

- In security testing, teams detect gaps and vulnerabilities in their AI-models to make sure they are secure against malicious data manipulation.

- Teams examine whether the model’s performance does not change after any updates with regression testing.

- When carrying out end-to-end testing, teams ensure the AI-based system works as expected once deployed.

AI Model Testing Life Cycle

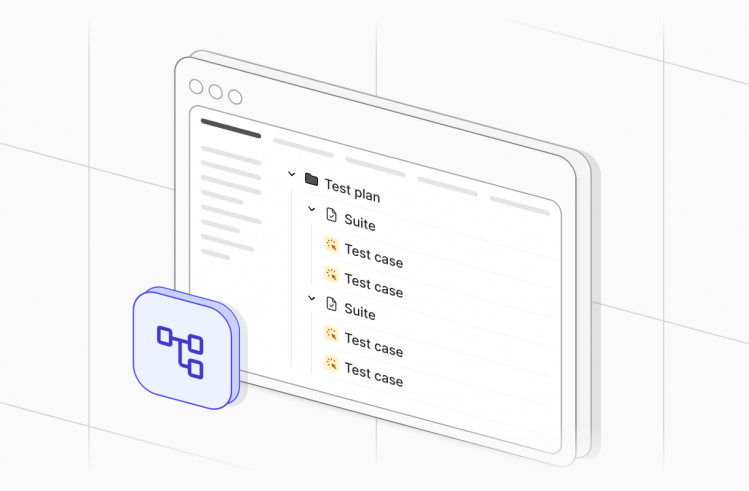

To get started, you need to identify the problem the AI-model solution will solve. Once the problem is clear, it is essential to gather detailed requirements and define specific goals for the project.

#1: Data Collection and Preparation

At this step, it is important to collect the necessary datasets to train the AI-powered models. You need to make sure that they are clean, representative, and unbiased. Also, you shouldn’t forget to adhere to global data protection laws to guarantee that data collection has been done with privacy and consent in focus. When collecting and preparing data, you should consider key components:

- Data governance policies which promote standardized data collection, guarantee data quality, and maintain compliance with regulatory requirements.

- Data integration which provides AI-models with a unified access to data.

- Data quality assurance which makes sure that high-quality data is a continuous process and involves data cleaning, deduplication, and validation.

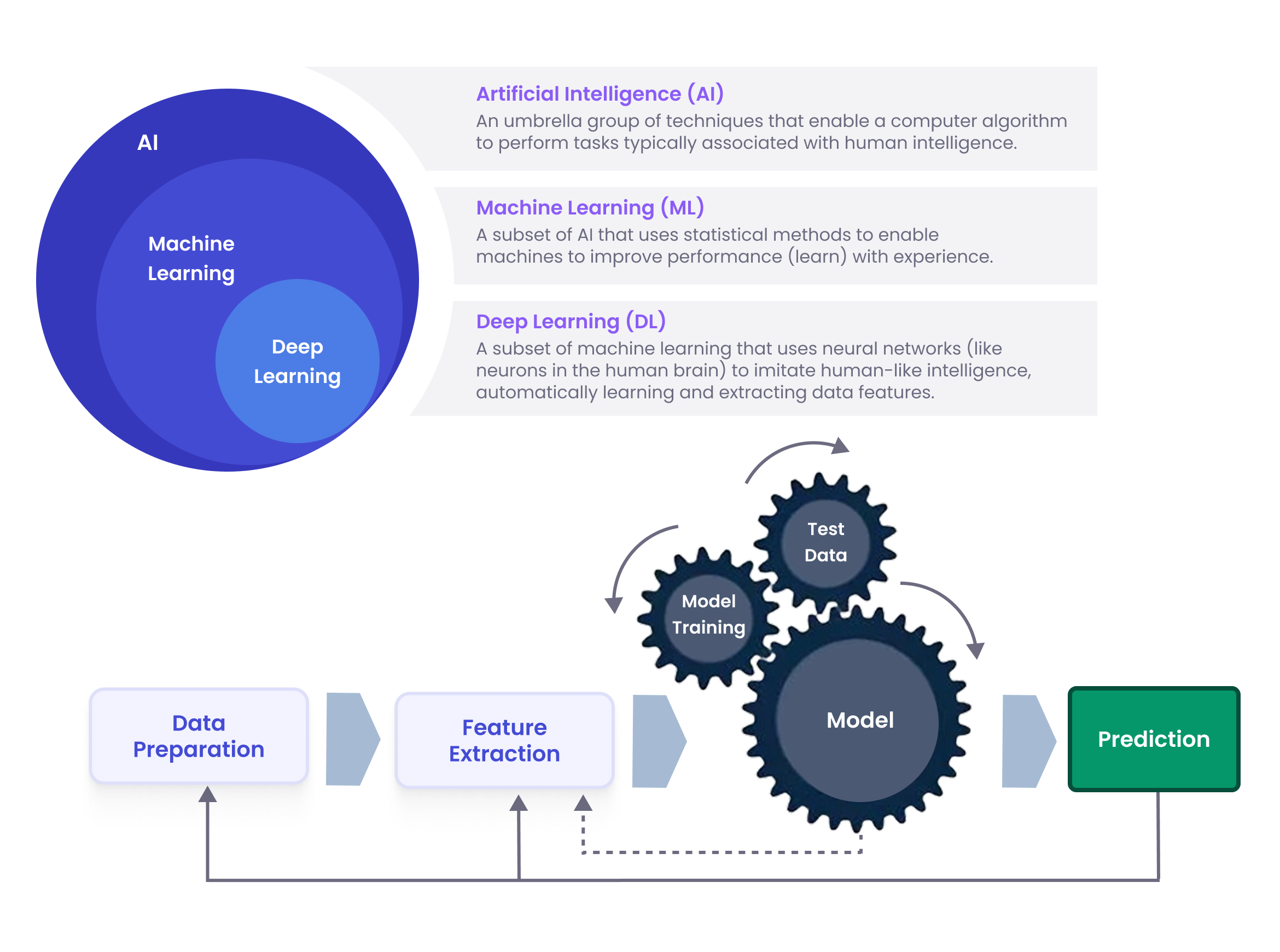

#2: Feature Engineering

At this step, you need to transform raw data into features, which are measurable data elements used for analysis and precisely represent the underlying problem for the AI model. By choosing the most relevant pieces of data, you can achieve more accurate predictions for the model and create an effective feature set for model training.

#3: Model Training

At this step, you need to train AI-powered models to perform the defined tasks and provide the most precise predictions. By choosing an appropriate algorithm and setting parameters, you can iteratively train the model with the processed data until it can correctly forecast outcomes using fresh data that it has never seen before. The choice of model and approach is critical and depends on the problem statement, data characteristics, and desired outcomes.

#4: Model Testing

Before the testing step, it is highly recommended to invest in setting up pipelines that allow you to continuously evaluate the chosen model and determine the AI model’s capabilities against predefined performance metrics and real-world expectations. You need to not only examine accuracy but also understand the model’s implications – potential biases, ethical considerations, etc.

#5: Deployment

After the AI model testing step, you can start the deployment of the model by transitioning from a controlled development environment to one that can provide valuable insights, predictions, or automation in practical scenarios. This step involves tasks like:

- Establishing methods for real-time data extraction and processing.

- Determining the storage needs for data and model’s results.

- Configuring APIs, testing tools, and environments to support model operations.

- Setting up cloud or on-premises hardware to facilitate the model’s performance.

- Creating pipelines for ongoing training, continuous deployment, and MLOps to scale the model for more use cases.

#6: Monitoring & Retrain

At the monitoring step, you need to provide ongoing performance evaluation, regular updates, and adaptations to meet evolving requirements and challenges. If done, you can make sure that the AI model functions in real-world conditions effectively, reliably, and in ethical alignment.

The Retrieval-Augmented Generation (RAG) approach uses its project data along with generic industry knowledge. Keep in mind, data quality in model training and testing is crucial to avoid pesticide effects.

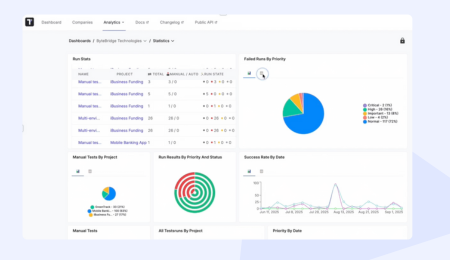

Look, as AI Model Testing Life Cycle goes 👀

As we can see in the illustration below, the testing process involving AI is sequential and cyclical. The stage of development and implementation of the AI strategy is major.

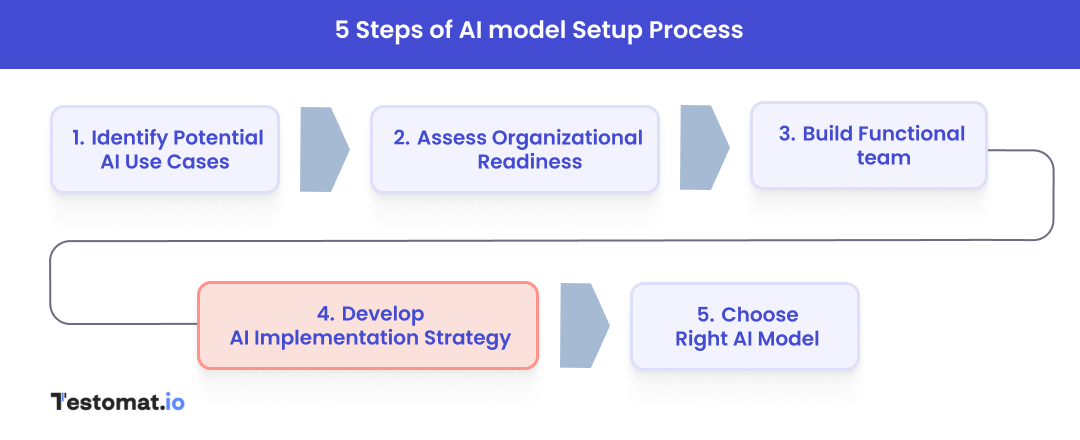

AI Testing Strategy: How to Use AI Models for Application Testing

AI is not a magic bullet, but a powerful co-pilot. By integrating AI models into your testing strategy, you can streamline test creation, enhance coverage, predict defects, and even reduce flaky results. These transform your test strategy into a smarter, faster, and more adaptive system. Leveraging artificial intelligence in application testing automates complex tasks.

#1: Identify Test Scope

At the very start, it is essential to define the goals that should be attained with AI model testing. Whether you need to automatically create new test scenarios, detect UI changes, or adapt flaky test scripts.

#2: Select and Train AI Model

Based on your goals, you need to choose an appropriate artificial intelligence model that best meets your software project requirements.

- Generative artificial intelligence models and Large Language Models (LLMs) are used for creating diverse and intelligent test cases.

- Computer Vision Models are a good fit for visual regression testing and UI element recognition.

- Reinforcement Learning Agents are well-versed in simulating complex user journeys.

- Anomaly Detection Algorithms is a good option for identifying deviations in system behavior (for instance, in logs or performance data).

- Predictive Models can be applied if there is a need to prioritize tests or predict defect likelihood.

Once the AI-model has been selected, you need to make sure you have all the necessary data for training: past test cases, test coverage results, UI snapshots/screenshots, software requirements, design documents, and user behavior data. Also, it is important to verify that it performs well.

#3: Integrate AI into the Existing AI Model Testing Framework

Once trained and validated, you should connect the AI-model with your current test automation tools and CI\CD pipelines. You can use custom testing platforms that offer pre-built integrations or automate the data flow between your application, test infrastructure, and the AI model. At this step, you can automate the testing process for generating test cases, analyzing test results, or UI changes for visual regressions.

#4: Analyze and Refine the AI Model

At this step, it is essential to review the AI-driven testing results and validate them. You need to review the test cases suggested by AI and investigate flagged anomalies, because human expertise still remains crucial for decision-making and context. Based on human feedback, you can retrain and improve the artificial intelligence model and adjust its specific goals if the testing needs of your AI application are changed.

#5: Employ MLOps for Retraining and Versioning

If you run several models simultaneously, need a scalable infrastructure, or require frequent AI-model retraining, you can automate deployment and maintenance with MLOps. Without MLOps, even advanced models can lose their value over time due to data drift. By implementing MLOps, or DevOps for machine learning, you can:

- Automate model retraining, deployment, and monitoring processes.

- Accelerate seamless interaction between data scientists, ML engineers, QA engineers, and IT teams.

- Guarantee version control for models, data, and experiments, and provide monitoring and retraining of the models.

- Support scalability and manage multiple models and datasets across environments, even as data and complexity grow.

From data processing and analysis to scalability, tracking, and auditing, when done correctly, MLOps is the most valuable approach, which enables releases to end up a more significant impact on users and better quality of products.

Advantages of AI-based Model Testing

Here are the most important reasons why you should embrace AI model testing:

| Advantages of AI Model Testing | Business Opportunities |

| Informed decision making |

|

| Improved operational efficiency |

|

| Better customer experience |

|

| Risk mitigation and compliance |

|

Challenges to Testing AI-based Models

In testing, QA teams usually face the following challenges:

- Being dependent on data, AI models in testing are as good as the data they are trained on and learn from. If the data is noisy, incomplete, and full of bias, the model will produce incorrect results and give wrong recommendations.

- In comparison to traditional software, AI-based models can’t deliver identical outcomes for the same parameters, especially during training, which makes testing a little bit tricky in terms of the ability to predict or replicate the results.

- When coping with edge cases, AI test models can cause unexpected failures in terms of unusual input data that they have not seen before.

- Complex AI-based models can be Black-boxed and hard to interpret how they make decisions or why they make a certain prediction.

- Testing for bias and fixing it can be difficult in terms of presenting biases in the training data or through the algorithm’s design.

- Training complex models often requires specialized hardware and significant infrastructure investment.

- It can be difficult to set up clear and precise criteria for evaluating the correctness of AI models because of the complexity and nuance of their outputs.

- When testing AI models, you need to make sure they adhere strictly to legal and ethical considerations to avoid trouble after deployment.

Software Testing Tools and AI Model Testing Frameworks

To conduct effective and efficient testing, you need to choose the appropriate tools, and you need to adhere to best practices. Thus, the testing process can be greatly increased with the appropriate AI testing tools, including the following:

| What AI Model Testing Tool do? | |

| TensorFlow Data Validation, or TFDV | This tool allows teams to simplify the process of identifying anomalies in training and serving data, and validating data in an ML pipeline. |

| DeepChecks | Python’s open-source package is designed to facilitate comprehensive testing and validation of machine learning models and data. It provides a wide array of built-in checks to identify potential issues related to model performance, data distribution, and data integrity. |

| LIME | It is a method which can be applied to explain predictions of machine learning models. |

| CleverHans | It is Python’s library which helps teams build more resilient ML models with a focus on security capabilities. |

| Apache JMeter | It is a Java-based open-source tool which can be applied for testing AI models and detecting anomalies. |

| Seldon Core | With this tool, you can get complete control over ML workflows – from deploying to maintaining AI models in production. |

| Keras | IT is a high-level deep learning API that simplifies the process of building and training deep learning models. |

Best Practices for Testing AI Models

Here are some best practices to follow to conduct effective AI Model Testing in your organization:

- You need to prepare clean and unbiased data for testing and training AI models.

- You need to automate repeated test scenarios to accelerate the testing process.

- You need to track model performance and conduct fairness and bias tests to maintain its accuracy in real-world applications.

- You need to update models frequently with fresh data and make sure AI model actions can be traced back.

- You need to implement MLOps to automate data preprocessing, model training, deployment, and to keep models updated.

Bottom Line: Struggling with AI model Testing?

Navigating the AI-model testing is a complex but rewarding journey. It requires defined goals, data quality, a well-thought-out MLOps approach, solid technical expertise with ethical considerations from the start, and strategic vision to reduce release lifecycles and iteratively improve the AI products.

Whether you test one model or more, you should focus on automation, collaboration, and continuous monitoring to make sure your models remain accurate and safe. Contact testomat.io if you have any questions, and we can guide you through the AI model testing process to help you address your unique challenges.

Frequently asked questions

What is AI model testing and why is it important?

AI model testing is the process of evaluating an artificial intelligence model to ensure it performs accurately, fairly, and reliably. It’s crucial for identifying biases, preventing errors in real-world applications, and maintaining trust in AI systems.

How do you test the accuracy of an AI model?

Of course, firstly, evaluate the sense of response. Secondly, you can test an AI model’s accuracy using metrics like precision, recall, F1-score, and confusion matrices. Cross-validation and testing on unseen datasets help ensure the model generalizes well beyond its training data.

What are the biggest challenges in AI model testing?

Common challenges include data bias, lack of explainability, overfitting, and changing real-world conditions (data drift). Ensuring fairness and interpretability in complex models like deep neural networks is also a major hurdle.