Modern software quality assurance (QA) processes demand speed, accuracy, and consistency. With the introduction of generative AI technologies such as ChatGPT, the potential for automating and enhancing QA tasks has grown exponentially. However, while ChatGPT and similar AI assistants offer general-purpose intelligence, specialized test management systems provide domain-specific solutions with deeply integrated AI workflows.

In this article, our seasoned Automation QA Engineer & AI Specialist – Vitalii Mykhailiuk has addressed these questions. Let’s break down the differences between ChatGPT, the general-purpose AI, and Testomat.io, the test management powerhouse built for QA pros like you.

General-Purpose AI: ChatGPT Workflow

ChatGPT, as a conversational AI, excels in free-form reasoning, document analysis, and ideation. So, how a QA engineer might implement ChatGPT in a testing workflow.

Step 1: Requirement Analysis via Prompting

The typical workflow starts by copying raw requirements (PRDs, Jira tickets, or Confluence documentation) and pasting them into ChatGPT. A structured prompt might look like

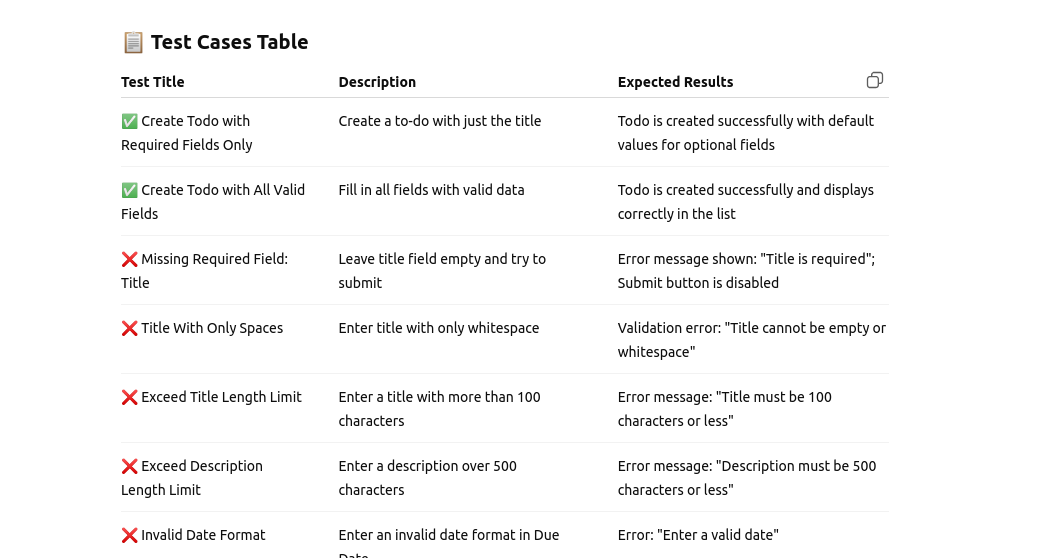

“Generate test cases for [“Todo list app – create todo feature”] based on the available Jira requirements. Include positive scenarios, negative scenarios, boundary conditions, and edge cases. Results should be in the table format with “Test Title”, “Description”, “Expected Results”.”

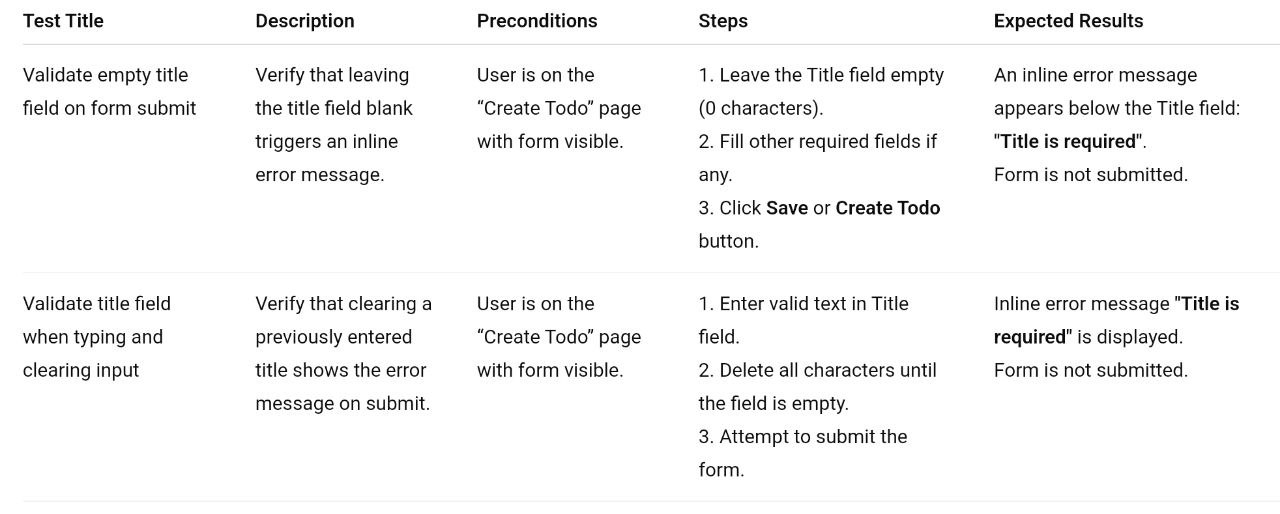

The answer we have received:

While effective, this method has technical limitations:

- Prompt engineering overhead: Writing effective prompts is a non-trivial task requiring prompt templating, prompt chaining, and result validation.

- Non automation process: Copy/Past requirements and project data to the well-structured prompt which can take a lot of time.

- Data entry risk: Copy-pasting requirements may omit metadata, links, or cross-references.

Step 2: Test Case Generation

ChatGPT can generate well-structured test cases, but aligning them to internal templates (e.g., title, preconditions, steps, expected results, tags) requires additional prompting:

“Generate “TODO App – create todo feature” well-structured test case text for the title field validation, which has the following logic: if the field is empty (0 characters), an inline error message ‘Title is required’ should appear. Please produce test cases similar to existing ones, considering the validation rules and user interactions. # Steps Identify Valid Conditions: Ensure there are test cases where the title field is voluntarily left blank to trigger the ‘Title is required’ message. – Verify the appearance of the error message when the field is submitted empty. # Output Format Provide test cases in a structured table format with columns “Test Title”, “Description”, “Preconditions”, “Steps”, “Expected results”, “Test notes””

Challenges here include:

- Manual data injection: Test data variables must be manually defined and scoped.

- Template adherence: Slight variations in phrasing may break downstream parsing pipelines.

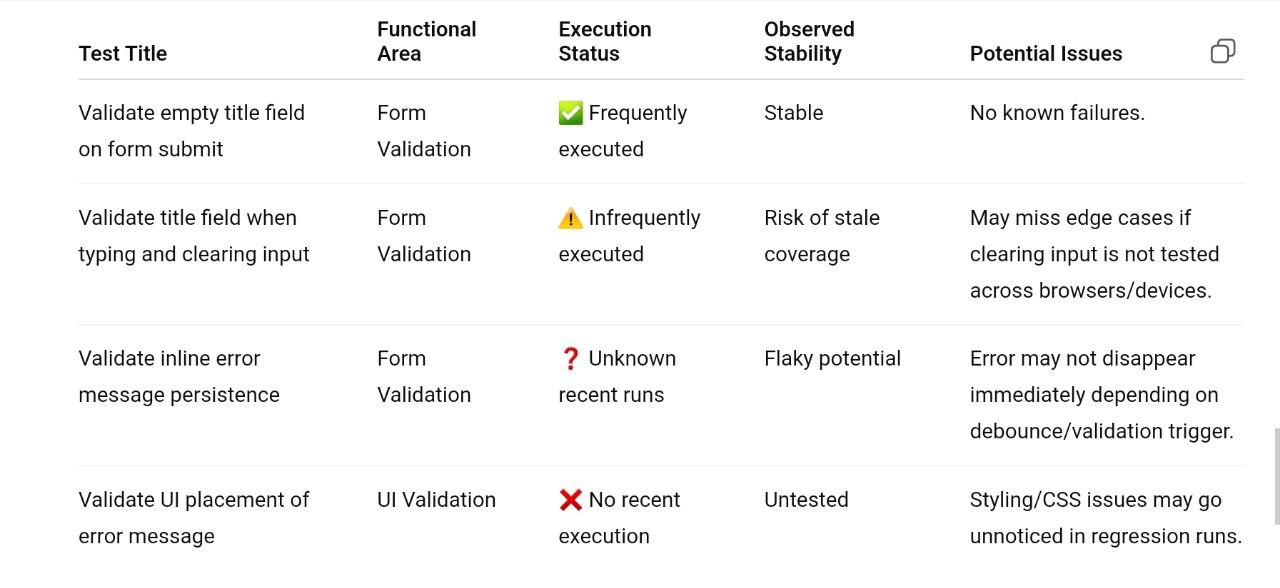

Step 3: Execution Metrics and Test Data Analysis

Analyzing past execution data via ChatGPT requires exporting results (CSV, JSON, or XML) from your test management system and generate a stability report:

“Analyze “TODO App – create todo feature” feature Test Case data and generate a stability feature report:

1. Use available test labels to group tests by functional area or component.

2. Identify tests with possible consistent failures, flaky behavior, or no recent executions….”

Limitations:

- No direct integration: Requires manual data export/import.

- Trend history blindspot: Without access to past executions or historical baseline data, ChatGPT’s insights are limited to the immediate snapshot.

- No test entity awareness: It cannot infer relationships between test suites, execution runs, or code changes unless explicitly encoded.

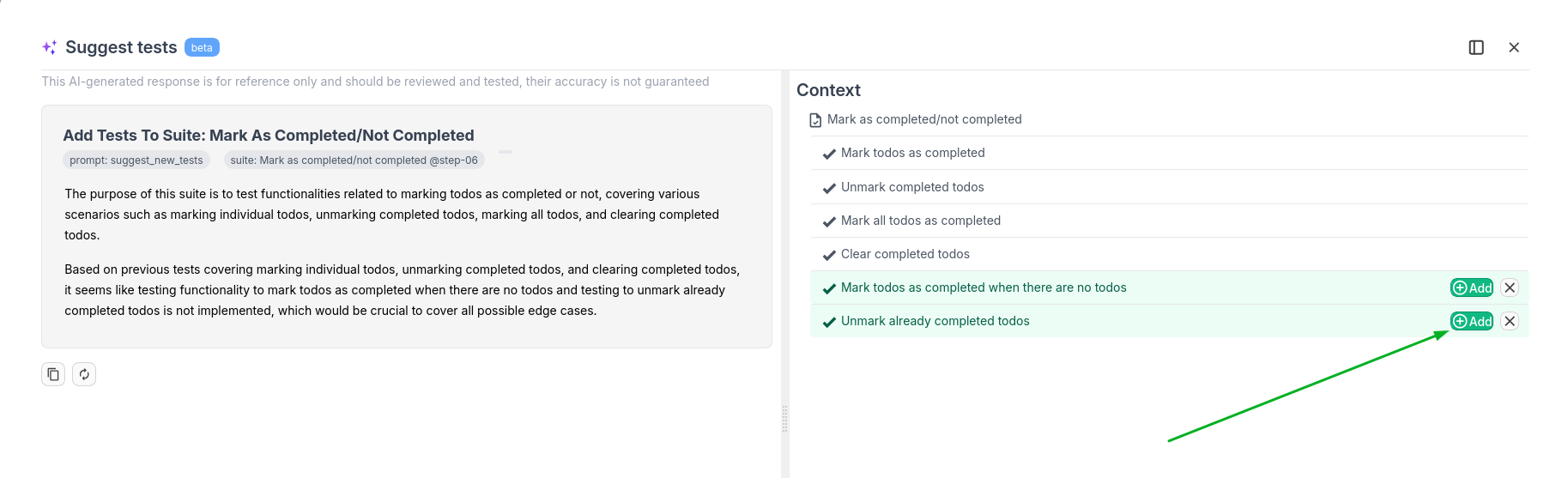

Built-in AI in Test Management Tools: Testomat.io Approach

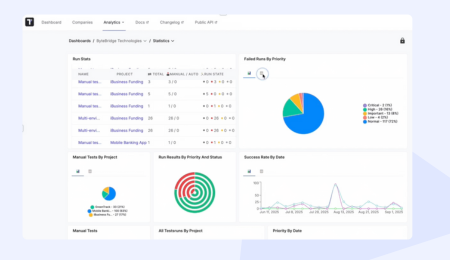

The best AI platforms for QA, like Testomat.io, integrate AI directly into the QA lifecycle. Unlike ChatGPT, they operate with full access to internal test data models, suite hierarchies, project metrics, and version history — enabling context-aware and sequence-aware automation.

Inner AI Integration – How Testomat.io Solves Existing QA Problems Technically

Instead of relying on prompt-based instructions, Testomat.io’s AI:

- Parses linked Jira stories or requirements from integrations.

- Automatically maps them to existing test cases or flags gaps.

- Suggests test suites based on requirement diffing using semantic embeddings.

- Pay attention to the custom user’s rules or templates which are used as project general points.

All of this is done by the system “under the hood” and uses project information to generate well-described prompts to get the most accurate information possible.

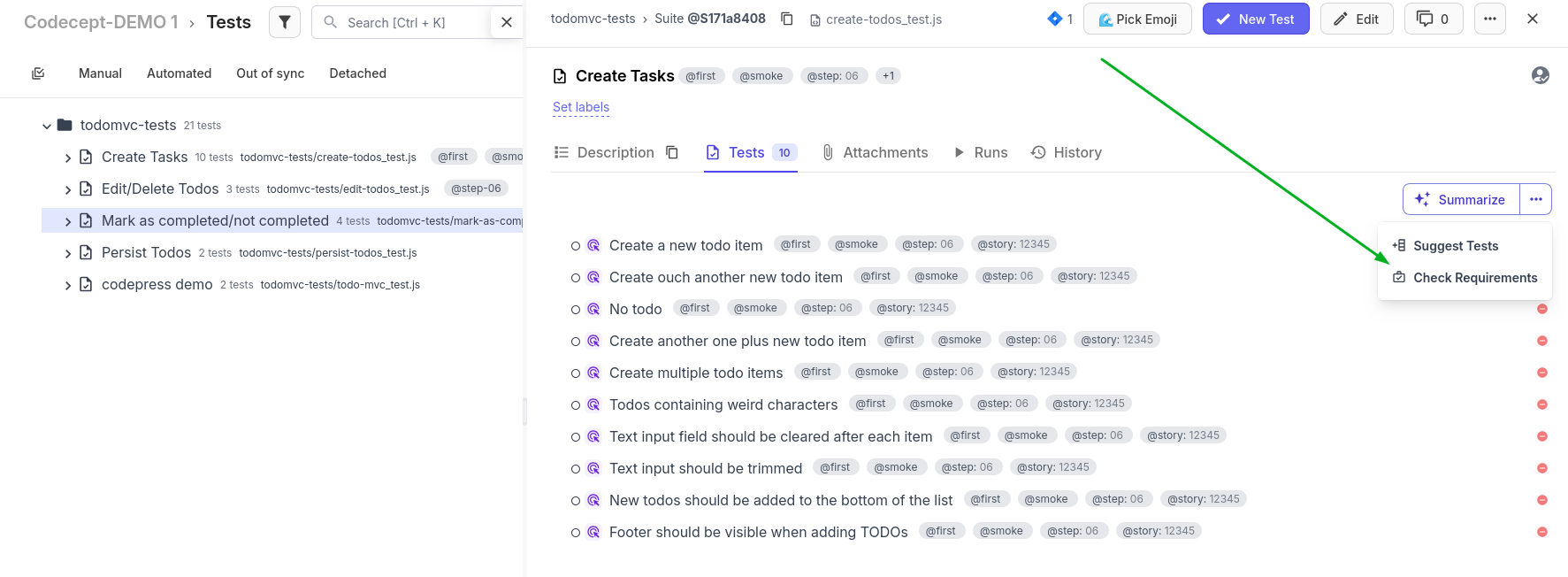

Auto-generation of Test Suites & Test Cases

With domain-aware generation:

- Testomat.io generates tests directly from requirement objects.

- The AI understands project templates, reusable steps, variables, and even tags.

- It ensures conformity to predefined schema and applies internal templates or rules.

Prompt Engineering & Data Preprocessing in Action

At Testomat.io, we believe true AI integration is about understanding your data. Our platform uses advanced prompt engineering, grounded in your real test management data: including test templates, reusable components, and historical test coverage, to auto-generate test suites, test cases, and even test plan suggestions. This ensures accurate, schema-conforming test generation.

How does it work?

Thanks to our access to comprehensive test artifacts, project settings, and example cases, the system constructs structured prompt templates enriched with real data, functional expectations, and even team-specific conventions. These templates include rules, formatting expectations, and embedded examples, effectively guiding the AI to produce output that is production-ready.

If the response deviates from expected structure, a validation layer flags inconsistencies and requests regeneration or manual refinement to meet the required format, ensuring every generated test is useful and compliant by design.

chatGPT prompt example

<task>Improve the current **test title** for clarity and technical tone.</task>

<context>

Test Title: `#{system.test.title}`

Test Suite:

“””

<%= system.suite.text %> (as a XML based content section)

“””

…

</context>

<rules>

* Focus only on the **test title**, ignore implementation steps.

* Avoid phrases like "make it better".

…

</rules>

Conclusion

While ChatGPT provides a powerful, flexible assistant for ad-hoc QA tasks, it lacks deep integration with test management artifacts and historical context. In contrast, AI-powered platforms like Testomat.io embed intelligence into the workflow, enabling seamless automation, traceability, and data consistency across the QA lifecycle.

If your goal is full-lifecycle automation, continuous test optimization, an AI-native test management system offers a more scalable and technically robust solution than standalone AI chatbots.

Stay tuned for our next technical article on how Testomat.io’s internal AI pipeline is architected from data ingestion, through LLM integration, to real-time feedback loops.