Modern software testing is a complex and multifaceted stage in software development, aimed at ensuring the solution meets all functional and non-functional requirements. However, the real-world data sets leveraged in the process often fall short of the QA teams’ expectations. Such data is hard to acquire, should be curated to fit each use case to be then delivered to the test environment on demand, and needs anonymization to safeguard sensitive information. That is why testing personnel often resort to utilizing synthetic data produced by a data generator.

What is a Test Data Generator?

Initially, they are collected in a specialized document from which experts can retrieve them and utilize them during the testing process. The most common testing purposes where such data is leveraged are performance testing, acceptance testing, system testing, unit testing, and integration testing.

When leveraged by vetted professionals in the field during test execution, high-quality test data enables early bug detection and elimination. In addition, realistic and consistent data provides comprehensive traceability and transparency of test cases, allows for their prioritization and optimization, streamlines and accelerates software testing thanks to its reusability and test automation, and ultimately leads to increased ROI.

However, real-world data has grave limitations. Its volume and variety are often insufficient for covering all testing needs, its availability and provisioning are often inadequate, and efficient test data management (especially in flexible testing and development environments) can become a real headache for stakeholders.

The test data generation process can be dramatically boosted by leveraging test data generator tools. These solutions produce, store, and manage vast datasets known as synthetic data. Depending on the type of tool (random, goal-oriented, pathwise, or intelligent), generating test data can be either specific or random, while the data itself can be structured or unstructured.

Test data creation speed and quality can be dramatically boosted if the generator you utilize leverages automation and artificial intelligence. Machine learning and predictive analytics potential of this disruptive combination of technologies paves the way for automatic test data generation, allowing testing teams to minimize manual effort, increase test coverage, and ultimately enhance software quality.

How do test data-generating tools improve test results?

Zooming in on the Advantages of Test Data Generation

The most significant benefits of test data creation are:

- Comprehensive testing. Versatile and consistent data produced by generators enhances test coverage, furnishing a broader scope of conditions and edge cases that real data fails to cover.

- Accuracy and relevance. Thanks to the systematic nature of algorithms that generators rely on, the data they create is accurate and specific, tailored to fit the test case to a tee.

- Efficiency in testing. Fast automated data generation, high scalability potential, and adaptability to shifting application requirements become enormous efficiency boosters, allowing testing teams to save lots of time and effort during the process.

- Data privacy compliance. Although generated data possesses all the characteristics of real-world information, it remains synthetic. Thus, you can conduct realistic tests, avoiding the usage of sensitive data and staying compliant with data integrity and security standards.

What kind of data can you obtain by employing test data generators?

Data Types Produced by Generators

The four basic kinds of data you can receive from a test data generator include:

1. Valid Test Data

It consists of acceptable and correct inputs and conditions necessary for the proper functioning of a software piece. Examples of such data are valid email addresses that should be entered into a registration form of a login page to verify whether it accepts the appropriate formats.

2. Invalid Test Data

By contrast, this includes negative data containing incorrect or unexpected values that serve to expose weaknesses in error handling or data validation, often indicating security vulnerabilities. Here belong special characters that aren’t acceptable, out-of-range values, letters entered into a numeric-only field (like the one meant for a phone number), etc.

3. Boundary Test Data

Such values are used to check boundary conditions, that is, to determine whether the tested parameter is within or outside the expected range. For instance, you should test a form field by entering the maximum or minimum value length to confirm correct boundary handling.

4. Blank Test Data

In fact, this is the absence of data that helps reveal the system’s responses to missing inputs. For example, leaving a certain form field empty should trigger a user-friendly message prompting to fill it out.

Any data type can be entered into the system using different methods.

Test Data Preparation Techniques

When preparing test data sets to be fed into the system, you can follow the procedures outlined below.

- Manual data entry. Testers do it personally to ensure the accuracy of test data for specific scenarios.

- New data insertion. It happens when fresh data is added to the newly created database in line with the stipulated requirements. This method is usually utilized to compare the actual results of the executed test cases with the expected ones.

- Synthetic data generation. Here, test data generators step in. They are vital when you need large data sets containing multiple values.

- Data conversion. The data you possess is transformed into various structures and formats to check how well the tested solution handles different data inputs.

- Production data subsetting. You divide production data into subsets, each of which is employed for a specific test scenario. In this way, you maintain data relevance and save resources.

Since we speak here of synthetic data generation, let’s focus on this technique and find out how to select a test data generator that will bring you the most value.

Tips on Choosing the Right Test Data Generator

As a company well-versed in software testing, we can offer recommendations on selecting a suitable test data generation tool.

- Steer by the testing type. Each testing type (functional, performance, API, network, etc.) requires certain data sets to be leveraged. Moreover, you can resort to different approaches (like white box testing or black box testing) to validate the same functionality. Make sure the tool you consider enables the generation of data that suits your particular use cases.

- Mind data sensitivity and compliance. Normally, data generators create entirely new values, so data security and privacy concerns that might raise compliance issues are generally irrelevant here. However, if any real-world data is involved, you should employ data masking tools to anonymize sensitive information.

- Look for integrations with automation tools. If you opt for a generator that plays well with mainstream test automation platforms (Playwright, Cypress, and the like), you will ensure seamless test data provisioning, enhance testing speed, quality, and coverage, guarantee scalability and adaptability, and minimize maintenance burden.

What are the test generating tools that have won universal acclaim?

Top Test Data Generator Tools in 2025

1. Testomat.io

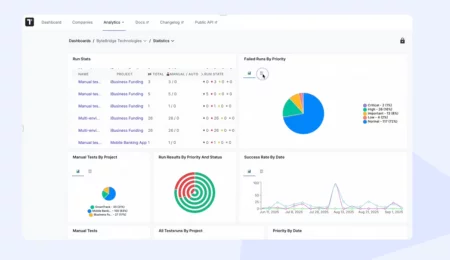

The platform’s greatest strength is its comprehensive nature. When you choose it, you receive a complete package of integrations that support end-to-end testing of software solutions of all sizes and complexity levels. The test data generation features are activated by configuring your framework, allowing you to define the parameters for the test data you wish to generate. These data are downloaded alongside your test into the test management system, where they enable you to validate the tests; you can do this within the tool. When working with complex and multi-layered data, you can utilise the test parameterisation feature and variables to optimise their processing. Naturally, a powerful AI agent also recommends generating some data to assist QA testers in their tasks.

Testomat.io’s pricing policy is quite affordable. The basic tier is free, whereas two upper tiers (Professional and Enterprise) are offered on a commercial basis. They provide access to high-end features and advanced options, such as AI-driven functionalities.

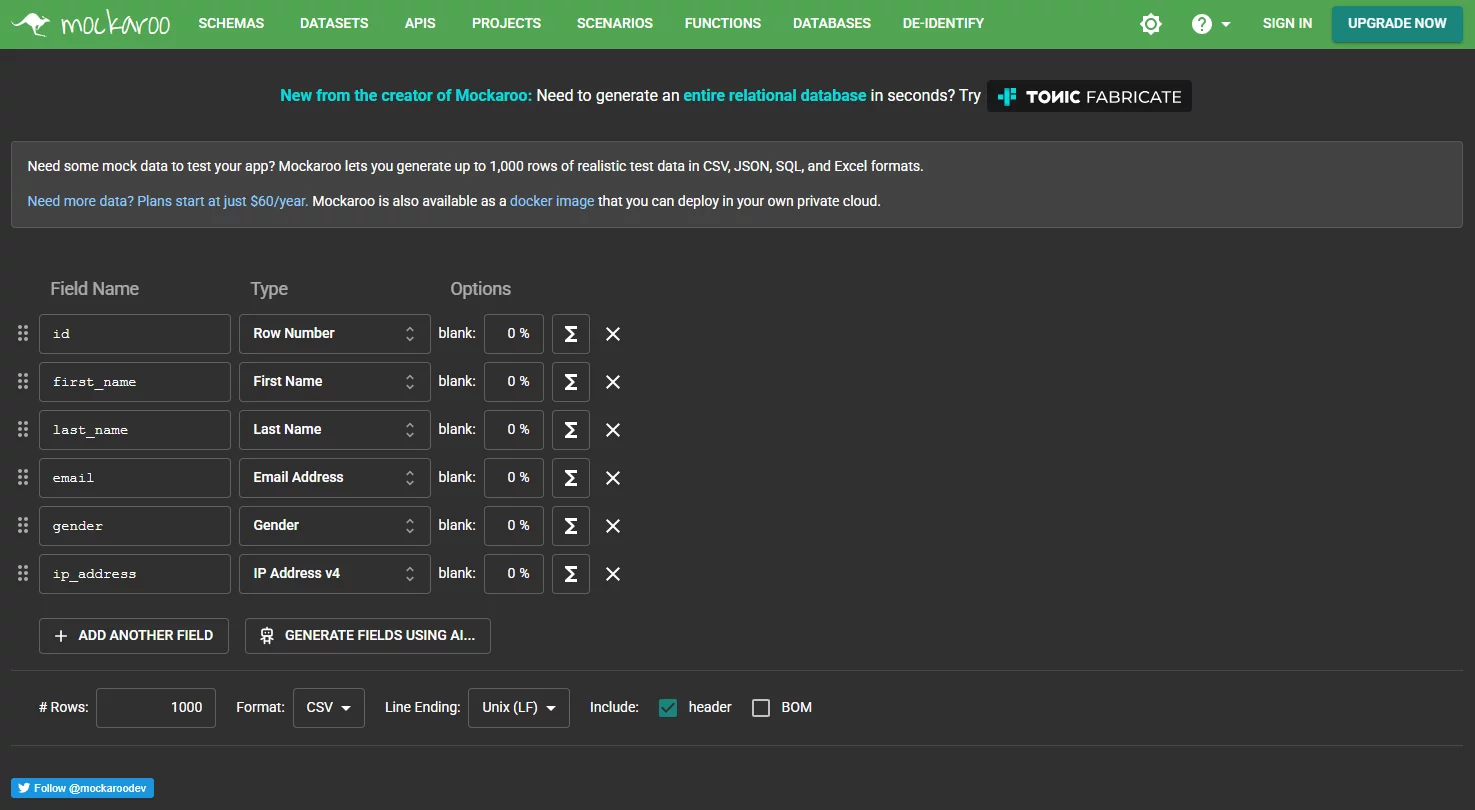

2. Mockaroo

In its core, this is an online Python test data generator but the formats of the test data it produces are much more versatile (JSON, SQL, XML, Excel, and others). It is appreciated for its ease of use, a broad gamut of realistic test data types (such as names, emails, addresses, credit card numbers, etc.), API mocking functionality, and automation potential.

However, its free tier is limited to generating under 1,000 rows of data, while the platform itself has no inbuilt data masking capabilities and displays subpar dynamic response efficiency, making it hardly suitable for testing enterprise-level systems.

3. DATPROF

In comparison with Mockaroo, DATPROF relies on design, which allows it to handle large data sets, mask all sensitive information (except for XML values), perform data subsetting, and enable DevOps integration.

As for drawbacks, the major of them is its high cost which may become forbidding for cash-strapped companies in case they leverage LLM-driven features. Plus, its support and documentation are rather substandard. On balance, DATPROF is way too complex for small teams and simple projects on lean budgets.

4. Datafaker

Living up to its name, this library operates as a Java test data generator of large sets of fake data. Such data closely resembles real-world names, addresses, and phone numbers and is tailored to suit the regions and countries where the tested software solution will be used. Since it is synthetic, you don’t need to anonymize values or worry about data security.

The cons of the resource encompass biases and anomalies that may creep into generated data, a steep learning curve to work with advanced features, and an inadequacy for employment in highly specific testing scenarios with complex data interdependencies.

5. IBM InfoSphere Optim

This platform is honed for full-cycle test data management. Additionally, it provides database test data generation capabilities, allowing for the creation of referentially intact test databases that play well with multiple servers, including DB2, Oracle, and Microsoft SQL Server, where sensitive content is thoroughly masked. Its major fortes are b customer support, plentiful documentation, and data provisioning automation capabilities..

The three main drawbacks of the tool are its steep learning curve, where users should master the platform’s setup and configuration, sophisticated integration with other tools, and a large price tag intended for enterprise-sized customers.

6. GenRocket

This is another big-ticket resource that takes some time to learn. Besides, its community size is rather limited, so you can hardly hope to get prompt help from them. Yet, all these minuses are offset by the tool’s extensive range of enterprise-level features (including generation of versatile data sets, data masking, subsetting, and test reusability), multiple database support, and API integration.

To maximize the efficiency of these tools, follow best practices for leveraging them.

Best Practices for Using Test Data Generator

When utilizing a test data generation tool, you should:

- Clearly define requirements before generation starts. You can’t hope to obtain relevant test data of high quality unless you meticulously specify the types, formats, and volumes of test data you need.

- Use realistic but anonymized data. The more generated data resembles distributions and characteristics of real-world data, the better the testing outcomes will be. When you use real production data in non-production environments, the employment of masking techniques is non-negotiable.

- Validate the generated data before utilizing it. You should make sure the data sets accurately represent real-world data and identify discrepancies, if any.

- Automate generation as part of CI/CD. Data generation should become an integral element of your CI/CD pipelines. In this way, you will ensure the novelty, consistency, and availability of test data for every testing cycle.

- Keep test data versioned and reusable. To do it, you should treat test data as code. To put t simply, you should establish version control, load it dynamically with an eye to the environment, and provide reliable data backup to guarantee consistency and rollback opportunities.ties.

- Document your data models. You should have all data generation rules, constraints, and dependencies properly documented. And don’t forget to provide a proper storage place for the records you have created.

That said, no matter how hard you try, you will still face some roadblocks when working with test data.

Addressing Common Challenges in Test Data Utilization

What are the typical test data generation bottlenecks you have to deal with?

- Handling large and complex data sets. When the amount of test data grows exponentially, the testing process is likely to slow down. The best recipe for mitigating such an effect is subsetting. You should extract only the data that is needed to execute this specific test.

- Guaranteeing data privacy and security. Synthetic data doesn’t require any protection measures. But if you leverage sensitive real-world information, it should be masked and anonymized.

- Maintaining high data quality. If the data is inaccurate, duplicate, or incomplete, testing will fall short of its goal, and the solution you are validating may not be completely functional or bug-free.

- Ensuring data consistency across multiple environments. The test data you utilize is juggled between development, staging, and production environments. You should go all lengths to keep it in sync.

- Managing data dependencies. Very often, data sets you leverage have intricate and even confusing relationships. To keep them transparent and ensure correct test runs, you should create a dependency map, continuously monitor and update all dependencies, and mitigate potential risks associated with them.

- Achieving scalability and performance. As the solution’s scope expands, you will require larger volumes of test data. The data generation process can be enhanced by parallelization of data creation, introducing incremental data generation, and implementing iterative refinement.

- Refreshing test data regularly. Given the fast-paced dynamics of the modern IT realm, you can’t hope to successfully reuse test data you generated a couple of months ago without any changes. You should keep it up-to-date with the shifting application logic and schema modifications.

- Maintaining test data over time. To be able to execute tests again and again, you should not only regularly update test data but also implement versioning and provide safe storage for it until it is retrieved.

- Managing complex data structures. Traditional test data may prove useless when validating solutions based on intricate schemas. Nested or relational data work better in such cases.

- Dealing with the pesticide paradox. Even the best tests, which utilize the most accurate and fresh data, tend to lose their effectiveness down the line and fail to pinpoint defects in new software. To combat this, you should update test cases regularly by rotating data within them, resorting to diverse testing techniques, and incorporating AI into your efforts.

Conclusion

Using real-world data for conducting software tests incurs numerous problems. You can eliminate them by leveraging synthetic test data created by test data generators. Such data is realistic, accurate, consistent, and can be obtained in huge volumes within a very short time. If you opt for a high-end test data generation tool, your testing pipeline will get a powerful impetus while your regulatory compliance remains rock-solid.