As the need for digital solutions in the contemporary IT-fueled world grows exponentially, development teams find it increasingly hard to churn out websites, apps, e-commerce platforms, ERP systems, etc., in sufficient numbers. And the non-negotiable requirement for all these products is unimpeachable software quality, validated through out-and-out software product testing. Given the sheer quantity of solutions to be checked and test cases to execute, traditional software testing procedures performed by human testers during manual testing fall utterly short of their ultimate purpose: ensuring high-quality software as a major deliverable. This is where AI tools come in handy. Their efficiency, agility, and accuracy make them vital in reshaping software development in general and test design as the pivotal element of the QA pipeline in particular.

The article explains what AI-driven test design is, exposes the inadequacies of classical test design approaches, and showcases how an AI testing tool by Testomat.io turns the theoretical perks of leveraging artificial intelligence in the software testing process into practical assets.

What is AI-Driven Test Design?

Quite naturally, AI-powered test design comes as an integral part of modern AI-driven software testing – a quality assurance technique that utilizes artificial intelligence and related technologies (machine learning, natural language processing, generative AI, and more) to streamline and facilitate the entire process.

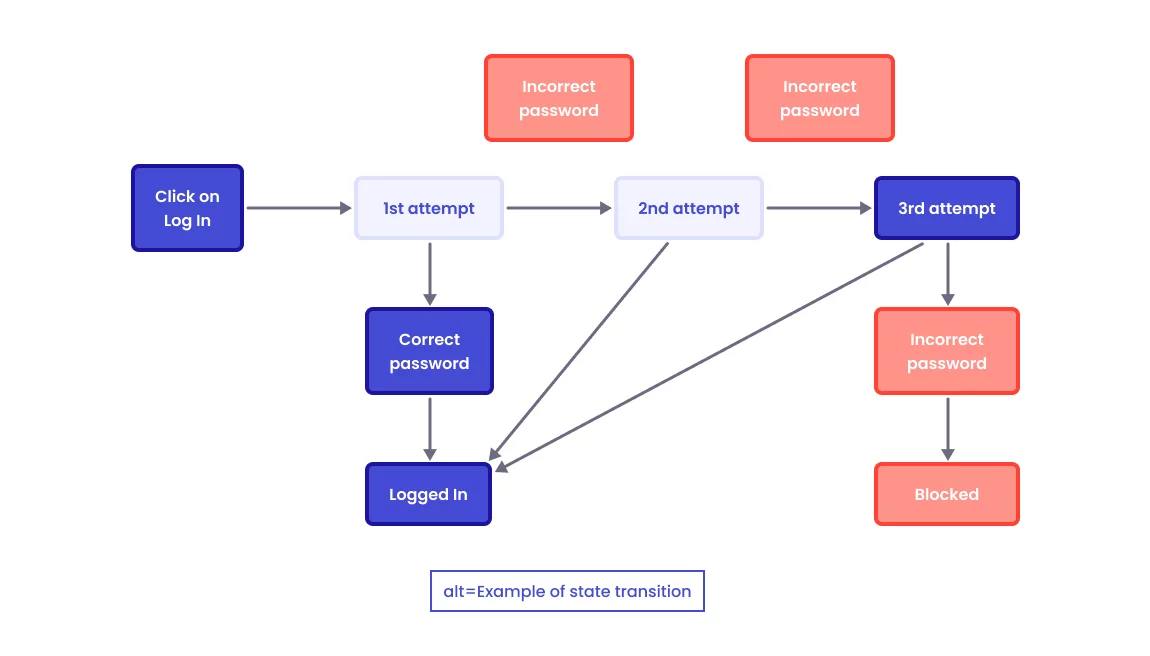

While designing test scenarios, AI testing tools analyze the solution’s behavior and historical data related to its defects to pinpoint high-risk features, modules, and areas where testing should be especially thorough and rigorous. It allows the intelligent machine to optimize test coverage by including edge cases that QA team members may leave out. Moreover, the NLP capabilities of AI testing solutions enable them to interpret requirements written in plain English to transform them into executable test cases.

AI-driven test design is closely interconnected with test generation and test management. Thanks to the AI design’s ability to comprehend requirements and conduct risk management based on predictive analytics, the tool can craft smarter and more relevant test cases. Once test scripts are generated, the solution performs continuous test maintenance and management, updating test data in them, prioritizing tests by risk, and analyzing test results, where anomalies are exposed and potential issues are identified. Plus, test management paves the way for continuous testing when AI-assisted QA is integrated into CI/CD pipelines.

However, when resorting to AI-driven test design software, remember that its primary role is to suggest. It can’t replace testers or work without human oversight. AI-powered mechanisms and natural language processing tools provide data-driven insights and handle repetitive tasks as part of a test automation strategy, freeing human personnel for such assignments as exploratory testing (namely code testing), user interface and user experience evaluation, visual testing, and other areas where manual effort and human intervention are mission-critical. Besides, humans are irreplaceable during such test steps as strategy development and test planning, result validation, etc.

If manual testers are so crucial, why can’t they handle test design themselves?

Why Traditional Test Design Fails to Deliver

Conventional test design practices (even when reinforced with test automation tools) are rife with multiple bottlenecks.

- Sluggishness. Traditional test design relies on manual updates, which are slow to implement, don’t scale well, and create delayed feedback loops, turning the entire routine into an extremely time-consuming endeavor.

- Prone to human error. People make mistakes, which, in test design, lead to duplicate coverage, overlapping tests, excessive setup, missed defects, and other shortcomings that negatively impact test execution and the overall quality of QA results.

- Cumbersome test maintenance. The tested solution’s structure and interface are bound with test scripts. Thus, all (even small) UI changes and code changes require corresponding modifications to the script – an activity that accounts for up to 60% of the QA team’s effort.

- Test data issues. Quite often, manual tests operate stale, inaccurate, unrealistic, or incomplete data, as well as utilize unmasked production data. All these hurt test results and escalate security and compliance risks.

- Lack of labor force. With many projects to complete, software companies struggle to assign enough testers to each, which significantly delays delivery.

Development cycle siloes. Reliance on old-school methods causes poor communication and collaboration between development, testing, and operations teams, telling adversely upon the final outcome. - Documentation fatigue. Excessive, time-consuming paperwork, symptomatic of the traditional test design routine, results in declining test script quality and more frequent test execution failures.

Evidently, you can’t address these challenges by relying on the traditional test design methods. AI-powered test design tools are honed to cope with them more efficiently.

How Testomat.io Implements AI-Driven Test Design

Let’s see how the AI-based testing tool by Testomat.io handles test design.

AI-assisted test creation

Test case generation is performed by involving user stories from Jira tickets. Another source for test creation is BDD feature file specifications. AI mechanisms scan BDD steps across the project and emulate them to craft new ones.

Pattern recognition

detecting regularities in test cases, AI helps QA teams identify unlinked or unused tests, remove unnecessary or duplicate sets, simplify complex ones, and pinpoint untested logic or missing edge cases.

Maintenance automation

AI suggests changes in the code after it is committed, whereas its integration with automation tools enables testers to update tests accordingly.

Moreover, Testomat.io can be seamlessly integrated into CI/CD pipelines set up for Agile software development and plays well with GitHub and GitLab, as well as various automation frameworks.

Naturally, such an approach to test design ushers in weighty perks.

Benefits of Using Testomat.io for QA Teams and Developers

No matter whether you involve artificial intelligence in designing tests for regression testing, performance testing, or any other QA type, Testomat.io’s AI test orchestration guarantees:

- Faster test design cycles. Automation of repetitive jobs, accelerated regression cycles, and test data generation enable testers to quickly pinpoint issues, swiftly perform root-cause analysis, and complete other QA steps with high productivity.

- Improved coverage and fewer redundant cases. The vast amount of data analyzed by AI mechanisms enables them to detect both duplicate tests and potential gaps in the produced outcomes. Then, they additionally generate more diverse and complete test scripts that go beyond the most obvious cases and immensely augment test coverage.

- Context-rich reporting for debugging. The AI-fueled test reporting feature integrates multiple data sources, pools diverse background information, and enables understanding the underlying processes, which positively affects the speed and quality of debugging.

- Time savings for both testers and developers. AI-powered automation steps up QA workflows and enables all project team members to fulfill their responsibilities more quickly, significantly reducing the solution’s time-to-market.

This is all theory and assumptions, you might say, but how does it work in real life?

Smarter Test Creation in Action: A Real-World Example

A medium-size e-commerce organization tasked its QA team, consisting of six engineers, with optimizing the test design routine. What was wrong with it?

By this time, the software product had become quite mature, and manual testing yielded poor outcomes, as test coverage was only 46%. The only way to enhance test design efficiency under such conditions was to leverage automation tools, aiming to increase the figure to 95% or higher. However, totally ditching manual testing wasn’t an option as critical user paths involving accessibility, visual UX flows, or exceptional edge cases still partly necessitated human oversight and validation.

Yet, when automated testing was unleashed, another problem became apparent. Flaky tests containing frequently failing scripts that never appeared among manually produced test cases came in droves. The team reacted promptly by pinpointing root causes, introducing retry mechanisms, and stabilizing the automation pipeline. Such measures enabled them to reduce flakiness by 3.6 times (from 18% to 5%).

As the number of automated sprints grew, test coverage level soared to 82%, thanks to the adequate design, neat organization, and clear descriptions of test cases. Manual tests were preserved in vital regression areas constituting 16% of the overall quantity, which brought the automation vs manual ratio to the healthy 5:1. They were categorized by feature folders and meticulously documented, yielding straightforward, transparent, and traceable test cases.

What does Testomat.io have to do with it? The answer is simple: all automated tests were generated according to the POM (Page Object Model) scheme and directly linked to the platforms’ manual test cases and Jira requirements. Once Testomat.io’s AI agents suggested a piece of code, testers inserted it into the testing framework and optimized it. After this, the completed autotests were uploaded to the system, where they did their work, the results of which could be assessed through specialized metrics.

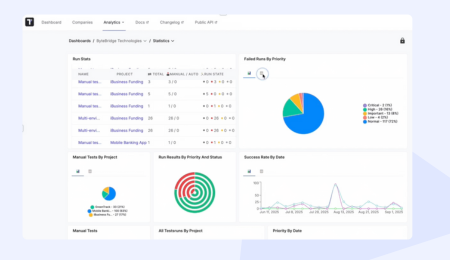

Finally, the QA experts applied AI-driven analytics that our platform contains, which allowed them to:

- Detect absent or duplicate scenarios;

- Examine unstable test executions and come up with improvements;

- Outline risk-dependent prioritization on regression tests;

- Pinpoint potential areas to which automation could be extended.

How were all these improvements reflected in basic test quality and efficiency metrics? After four weeks of using Testomat.io, the company reported that the average time to write a test decreased by 76% – from 25 to 6 minutes – while the number of new test cases created weekly increased by 183% (from 30 to 85). Test coverage rose by one-fourth (from 56% to 82%). Flaky tests decreased from 18% to 5%, improving stability by 72%, and regression suite maintenance displayed a dramatic reduction from 10 to 3 hours per sprint, allowing QA engineers to save 70% more time.

AI-fueled automation testing excelled at providing consistency, efficiency, and scalability of test case design, whereas manual testing still safeguards the team’s efforts, focused on high-risk features, with user behavior being validated.

Testomat.io’s AI-fueled platform proved highly efficient at handling major test design challenges. But today, the market is full of various AI-powered testing solutions. What gives Testomat.io the edge over its competitors?

Why Testomat.io Is the Right Platform for AI-Driven Test Design

There are several characteristics of the AI-based test design suite by Testomat.io that can be tipping factors in your vendor and tool selection process.

- The platform can be used for any tests. You can execute test case design for all types of tests (functional and non-functional) in both manual and automated modes.

- Human control remains central. AI serves as a highly competent assistant while the decision-making, planning, implementation, and management responsibilities still rest with human personnel, which lets QA engineers remain in the driver’s seat.

- Absence of vendor lock-in. The platform is framework-agnostic and plays well with multiple third-party tools, making it very flexible and widely applicable.

- Built for CI/CD environments. Testomat.io suits perfectly for QA teams that rely on Agile practices. The platform integrates with most mainstream CI/CD tools (GitHub, GitLab, Azure DevOps,

Jenkins, CircleCI, and Bamboo), supporting continuous test execution, monitoring, and management.

Next Steps

With the skyrocketing spike of QA procedures to be performed, manual test case creation and execution increasingly fail to satisfy experts’ requirements. The need for comprehensive and quick software testing brings AI-powered tools to the forefront. The AI-driven test design platform by Testomat.io can radically accelerate and facilitate the testing pipeline, saving time and effort for more meaningful and creative tasks that require human oversight. Start your free trial and experience intelligent test management today!