If someone asked me to name the three whales holding up the world of software testing, one of them would definitely be the test case, the foundation stone of all testing documentation 🐳

Now, I can already hear two camps forming:

- “We’ve never had test cases on our project, and everything’s just fine!”

- “The more test cases we have, the better our testing!”

And honestly — both sides have a point. But I’m not here to start a holy war in the QA world. Instead, let’s calmly figure out what makes a test case good, clear, and ready for real use.

What Is a Test Case?

Before diving deep, let’s remind ourselves what a test case actually is.

“Test cases help testers verify a software product’s functionality systematically. Each test case describes a specific scenario, the steps to execute it, and the expected results.”

Sounds simple, right? But writing a good test case – one your whole QA team can actually understand – that’s an art.

The challenge is writing them in a way that survives team changes, onboarding cycles, and the test of time. A test case written six months ago should still make perfect sense today, even to someone who wasn’t on the project back then.

What to Focus on When Reviewing Test Case Quality

When someone asks me to review their test cases, these are the key “Quality Dimensions” I always look at first.

1. Title Clarity

Is the title understandable even to someone who doesn’t know the project context? A good title should tell you what’s being tested at a glance, no decoder ring required.

Bad: “Test #47”

Good: “Verify user can complete checkout with valid payment card”

2. Preconditions

Are they listed clearly, especially for complex or multi-step tests? Missing preconditions are one of the biggest reasons test cases fail in the hands of new team members.

3. Steps Defined

Are the steps structured and described well enough for another tester to repeat them easily? Think of it like a recipe, if someone can’t follow it without calling you, it needs improvement.

4. Expected Result

Do we clearly know what success looks like at the end? Vague expected results like “system works correctly” won’t cut it. Be specific.

5. Reusability

Could a new team member pick up this test and understand it without extra help? This is the ultimate litmus test for quality.

When these aspects are well-covered, you can confidently say your test case is high quality, written in an accessible way, reusable across contexts, and easy to maintain in the future.

This kind of review becomes especially valuable when working on large projects with multiple testers. It also helps QA Leads and Managers monitor consistency and control the quality of testing documentation across the team.

The Hidden Cost of Poor Test Cases

Every time a tester has to stop and ask “what does this step mean?” or “what environment was this written for?”, that’s lost productivity. Multiply that by dozens of team members over months, and you’re looking at significant hidden costs in:

- Onboarding time: new team members struggle to understand existing tests

- Execution delays: unclear steps lead to blocked tests and waiting time

- Maintenance burden: ambiguous cases require constant clarification and rewrites

- False failures: poorly written tests produce unreliable results

Quality test cases aren’t just nice to have, they’re a direct investment in team efficiency.

The End of Manual Reviews? Say Hello to AI!

Okay, confession time: The era of mindless manual reviews is over.

With the rise of AI and all the specialized tools around, even test case review – once a purely human domain – has become simpler and more efficient.

Now, instead of manually combing through hundreds of cases, you can write a solid prompt, feed it to a system with an AI assistant, and get instant feedback.

What used to be a time-consuming task is now just a part of your daily workflow – analyze, review, improve, save. Simple.

Why AI-Powered Review Makes Sense

Manual review has its limits:

- Consistency — different reviewers may focus on different aspects

- Scalability — reviewing 500 test cases manually is exhausting

- Speed — human review is slow, especially under tight deadlines

- Availability — senior reviewers aren’t always available when you need them

AI doesn’t replace human judgment, but it provides a consistent baseline that every test case should meet before human eyes even see it.

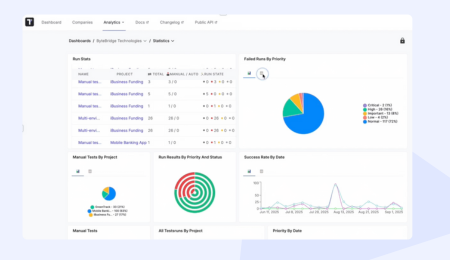

Your Pocket Test Case Reviewer Now in Testomat.io

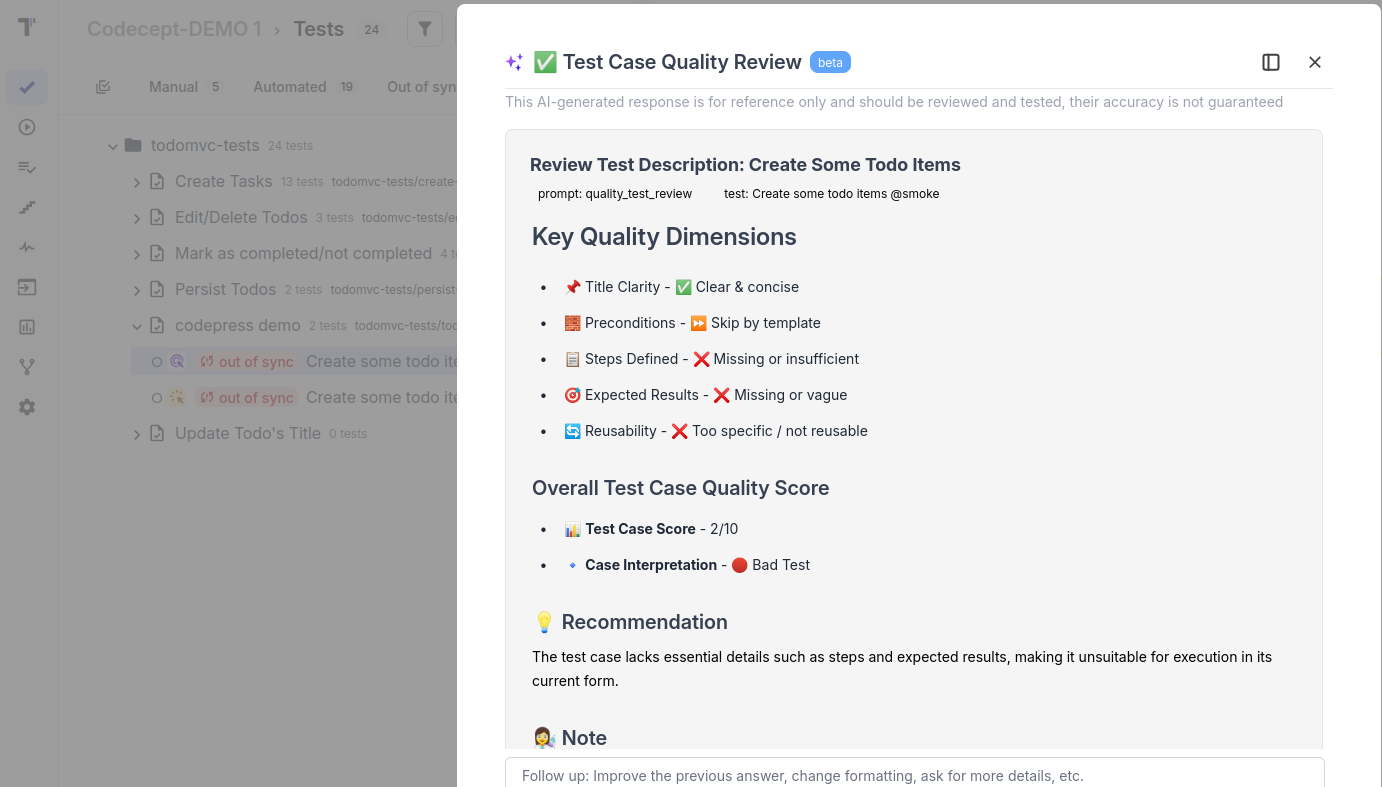

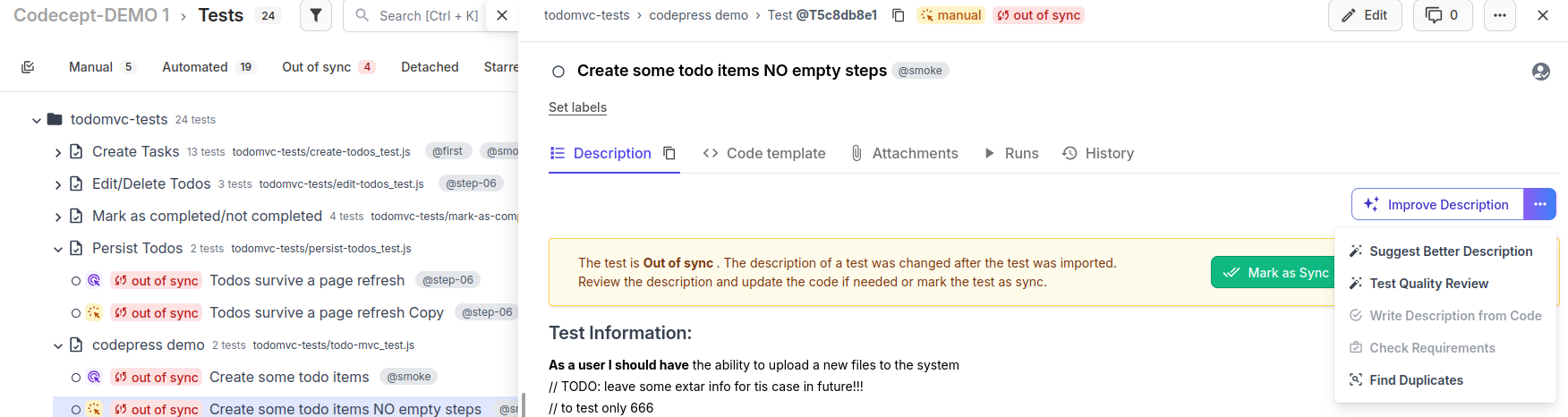

After seeing how tedious manual reviews can be, I decided to do something about it and added a “Test Case Quality Review” feature in Testomat.io. Here’s what it does (and how it works under the hood):

- You or your teammate write a test case.

- The AI reviewer analyzes it for “adequacy” and clarity.

- It checks the same Key Quality Dimensions: Title Clarity, Preconditions, Steps Defined, Expected Results, Reusability

- Based on this analysis, it returns a Test Case Score and a list of improvement recommendations.

In other words, your own AI-powered reviewer inside your favorite test management tool.

Real-World Impact

The difference is immediate and measurable:

- Before: A test case with vague steps sits in your suite for months, causing confusion and failed executions

- After: You get instant feedback highlighting unclear language, missing preconditions, or ambiguous expected results

It’s like having a senior QA mentor available 24/7, giving you actionable feedback on every single test case you write.

When Is This Feature Especially Useful?

For Solo QAs and Small Teams

If you’re the only QA on a project or part of a small team without established templates, this feature is a game-changer. It helps uncover weak spots and guides you toward better test documentation practices, even without a mentor or senior reviewer around.

For Growing Teams

As your team scales, maintaining consistency becomes harder. The AI reviewer ensures every team member follows the same quality standards, regardless of their experience level.

For Distributed Teams

When your QA team spans multiple time zones, asynchronous review becomes critical. AI provides immediate feedback without waiting for the right person to come online.

For Legacy Test Suites

Got hundreds of old test cases that nobody wants to touch? Run them through the quality review to identify which ones need urgent attention and which are still solid.

And the best part? Everything is already built into Testomat.io. No extra fuss. Just:

Analyze → Improve → Get a High-Quality Test Case.

And if needed, don’t forget to attach those screenshots, logs, or docs — because good attachments can save lives. Or at least a few hours of debugging.

What’s Next? The Future of Smart QA

For me, this new “Test Case Quality Review” is just the first step toward smarter, more automated QA workflows. Next, I’m planning to expand with features like:

“Improve Test Case”

Not just identifying problems, but auto-refining cases based on review feedback. Imagine clicking a button and having your test case rewritten with clearer steps and better structure.

“Suggest Tests by Design Practices”

Not just context-based suggestions, but cases that improve coverage using proven testing design techniques like:

- Boundary value analysis

- Equivalence partitioning

- Decision table testing

- State transition testing

The AI won’t just tell you what’s wrong — it’ll help you design better test coverage from the start.

“Test Code Quality Review”

Because test code deserves love too! Automated test scripts should be reviewed for:

- Code clarity and maintainability

- Proper use of waits and assertions

- DRY principles and reusability

- Error handling and robustness

Measuring Success: The Metrics That Matter

How do you know if improving test case quality is actually working? Track these metrics:

- Onboarding time — how long it takes new QAs to become productive

- Test execution clarity rate — percentage of tests completed without questions

- Test maintenance time — hours spent updating and fixing unclear tests

- False positive rate — tests that fail due to poor documentation rather than actual bugs

With Testomat.io’s quality review feature, you can track improvements in these areas over time and demonstrate the ROI of better documentation practices.

Conclusion

The goal is simple: to make QA work smarter, easier, and a little more fun.

Test cases are the backbone of systematic testing. When they’re clear, consistent, and well-maintained, your entire QA process runs smoother. When they’re messy and ambiguous, everything slows down.

By combining human expertise with AI-powered review, we can create test documentation that actually serves its purpose, helping teams test better, faster, and more reliably.

After all, a world where test cases are both useful and readable doesn’t sound bad at all, right? 😉

Ready to level up your test case quality? Try the AI-powered Test Case Quality Review in Testomat.io today and see the difference it makes for your team. Stay tuned, we’re just getting started, and the future of QA is looking brighter every day.