According to different estimates, the global number of software testers doesn’t exceed 9 million. Given the meteoric spike in the production of all kinds of IT products and the respective quantity of software tests to be conducted, QA teams can’t get them covered, no matter how hard they try. The only way to ensure a fast and efficient software validation procedure is to involve AI-powered mechanisms in test design, generation, and execution. Large language models (LLMs) are among the technologies that can radically streamline and facilitate the software testing routine, especially during the test generation phase.

This article describes the inadequacies of traditional test generation procedures, clarifies what an LLM is and how it works, showcases the types of tests that can be created using large language models, and highlights the benefits, limitations, and best practices of LLMs for effective test generation.

Traditional Test Case Generation: Limitations and Pain Points

When performed in line with classical approaches, test case generation is fraught with numerous setbacks and inefficiencies.

- Manual writing from requirements. When QA engineers manually create tests, they are subject to fatigue, bias, and conflicting approaches, leading to errors and non-standardized test cases.

- Copy-paste duplication. It not only reduces the understandability of test cases, but also allows bugs to spread dramatically.

- Inconsistent quality and coverage. Very often, rare edge cases and their complex interactions fall outside the scope of human testers’ attention, creating test coverage gaps.

- Knowledge silos in QA teams. Test cases generated by one team remain its own asset, while their colleagues across the organization have to do all the work from scratch.

- Poor scalability in Agile and CI/CD environments. As the product’s complexity grows during the dynamic Agile and CI/CD development process, test suites become increasingly unmanageable.

- Difficulty in maintaining test cases when requirements change. Evolving specifications and demands necessitate corresponding test case updating, which may sometimes require more time and effort than test case creation.

Using LLMs for test case generation minimizes those problems.

What Are LLMs and Why They Matter for QA

Large Language Models (LLMs) are AI-driven systems that leverage natural language processing (NLP) and deep learning to understand, process, and recreate human language texts by anticipating the next word in a sentence. They can not only write new texts but also summarize the existing ones, translate them from one language to another, and answer related questions.

To understand message context and intent, LLMs apply self-attention mechanisms and specialized external knowledge retrieval techniques (such as attention layers and tokenization) that identify statistical patterns in vast datasets. In this way, they recognize the meaning and predict likely sequences.

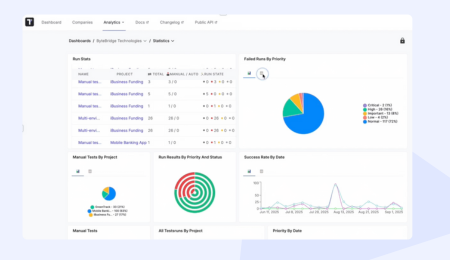

QA specialists found LLMs extremely useful in their testing workflows, leveraging general-purpose commercial tools like GPT-4 by OpenAI or Claude by Anthropic in their efforts. However, the capability of ChatGPT in test creation is limited, as the tool was designed for multiple use cases rather than software testing. To generate effective test cases, it is wise to employ dedicated systems, like the one provided by Testomat.io, which is specifically honed for this task.

What differentiates LLM-based test generators from the conventional rule-based ones?

Rule-driven test generation hinges on “if-then” conditions and pre-defined logic, making test cases based on it simpler to implement, highly transparent, and easily traceable. The most common use cases for such tests are specific, structured tasks related to compliance monitoring, workflow automation, and simple chatbots.

LLM-based test generation relies on extensive training data to learn natural language structures and statistical patterns, enabling models to create test cases that adapt to dynamic and novel situations and reproduce human-like interaction. As a result, LLM-generated test cases bring the most value for building virtual assistants, providing personalized customer support, creating content, implementing high-level knowledge-based automation, and performing other creative tasks. Thanks to their ability to understand requirements written in natural language, analyze source code, and create context-dependent test scenarios, the superb effectiveness of LLMs in test generation is beyond any doubt. Besides, it is an automated test generation by default, which allows QA engineers to receive tons of diverse tests in a short time.

Let’s explore the nitty-gritty of test case generation using LLMs.

How LLM-Based Test Case Creation Works

There are five basic stages in test case generation using large language models.

Input provision

Experts provide the model with relevant information derived from user stories, requirement documents, acceptance criteria, bug reports, past test data, and other available sources.

Prompt engineering basics for QA

Test inputs are refined and optimized to let the model understand the context and logic for the successful identification of potential scenarios, edge cases, and boundary conditions.

Context enrichment

The effectiveness of large language models is enhanced by using a Retrieval-Augmented Generation (RAG) framework to enrich context with API definitions, code snippets, prior code review comments, project metadata, file paths, user behavior metrics, runtime data, etc.

Test case generation

Now the automated test case generation begins. Test cases generated by LLMs come in different formats, such as manual types of tests, Gherkin scenarios (using natural language descriptions and commands), algorithms of steps to automate tests, and more.

Human-in-the-loop validation

The quality of generated test cases should be controlled by testers to balance the automation introduced by LLMs in test case generation with human expertise. QA specialists correct AI errors, check outputs, and add contextual understanding to the set of tests if necessary.

What types of testing with large language models are widespread today?

Types of Test Cases Generated Using LLMs

QA teams utilize LLMs to generate test cases of the following types.

Functional test cases

- Happy path test cases. They verify the intended user journey with valid inputs and expected actions (like successfully logging in). LLMs can help in defining clear objectives, crafting detailed prompts, specifying output formats, and generating synthetic test data.

- Negative scenarios test cases. These check how the solution reacts to invalid inputs and unexpected user actions. LLMs can create automatic test cases containing error-infested or incorrect inputs and pinpoint potential vulnerabilities by running “what-if” scenarios.

Edge case and boundary testing

- Testing data limits. LLMs can not only generate test data with mixed valid and invalid cases, real-world examples, and simulated edge cases, but also evaluate the effectiveness of test runs, acting as a judge for those nuances that can’t be detected through rule-based checks.

- Unexpected inputs. Testers utilize large language models to save effort while building a comprehensive test bank with unexpected inputs for adversarial testing and red teaming.

Regression test cases

- Reusable scenarios. LLMs are extremely helpful for creating a library of scenario templates that can be leveraged to generate multiple variations of the same test case by changing input parameters.

- Impact coverage change. After you have provided the model with ample information about code changes to certain functionalities or modules and their associated coverage gaps, the LLM is used to generate test cases that target these modifications specifically.

Exploratory test ideas

- Charters generated by LLMs. In charters, large language models can suggest test ideas, define testing mission and scope, produce realistic data, map out scenarios, and provide a starting point.

- Risk-based suggestions. LLMs are good at summarizing raw logs, documentation, and other data sources to highlight high-risk areas and offer new perspectives, enabling testers to think outside the box.

What perks do LLMs usher in the generation of test cases?

Benefits of using LLMs for Test Case Generation

When you employ LLMs for test case generation, you are entitled to:

- Faster test case creation. Automatic test case generation by LLMs is a premium time-saver, allowing testers to obtain correct test cases much more quickly than in manual mode.

- Improved coverage and consistency. LLMs excel at escaping coverage plateaus in test generation thanks to their in-depth understanding of code semantics and their ability to identify edge cases, which the manual approach fails to achieve.

- Reduced cognitive load for QA engineers. LLMs not only automate repetitive tasks but also streamline information retrieval, reporting, and documentation summarizing, thus freeing human personnel for more creative assignments.

- Quicker onboarding of new team members. LLMs can unite existing records and historical test cases into structured, searchable knowledge bases that testers who have just joined the team can refer to.

- Support for non-technical stakeholders. Employees with no technical background can express their requirements and desired outcomes in plain English, and LLMs translate them into test scripts and generate tests accordingly.

- Standardized test documentation. The documents LLMs create (test plans, reports, specifications, etc.) are construed according to pre-defined templates, ensuring their uniform structure, consistency, and clarity.

Alongside weighty fortes, the quality of the generated tests may be substandard if you ignore certain issues during automatic test generation by LLMs.

Risks and Limitations of LLM Test Generation

Despite the unquestionable efficiency of test case generation with LLMs, it is still an immature technology whose operating principles and functionality are modified and refined on the fly. That is why LLMs are prone to hallucination and creating syntactically correct but logically flawed output based on incorrect assumptions. Besides, a model’s capability to generate test cases is limited by context windows that may fail to include system-specific constraints or other vital parameters. On the flip side, LLMs may neglect context and exceptions, which triggers the appearance of overgeneralized scenarios and test cases using inaccurate premises and conclusions.

One more point of concern is the security and privacy of the data used by LLMs. If data anonymization and protection are subpar, the employment of the technology may result in training data leakage, unintentional information disclosure, and compliance violations.

Does this mean we should stop using LLMs for test generation? Of course not. But close human supervision over the process is vital. QA teams should exercise test case derivation from safety requirements and review test results critically, avoiding blind trust in AI outputs.

The effectiveness of test case generation using large language models can be significantly increased by adhering to best practices in the niche.

Best Practices for Effective LLM Test Case Generation

As a vetted vendor providing high-end AI test generation tools, we offer the following recommendations.

Ensure clear and structured input

As the universal test generation truth says, GIGO (Garbage In, Garbage Out). Make sure it is not about your effort for test case derivation. You can get high-quality, accurate tests only by providing LLMs with relevant, consistent, complete, understandable, and up-to-date training data in domain-specific language, transparent guidelines on using it, and clear context.

Use acceptance criteria and examples

These serve as unambiguous, foolproof instructions that minimize the risk of receiving incomplete or incorrect output.

Break down complex requirements

LLMs produce better results when they face less complex tasks and move from one simple step to another, rather than trying to make sense of elaborate, multistage assignments.

Combine LLMs with domain knowledge

It can be achieved by connecting models to both external and internal knowledge bases containing domain information. It is used both for model training and context provision.

Review and refine generated test cases

Regular evaluation of large language models‘ performance is mission-critical. Go through the output test cases and apply corrections if necessary.

Continuously improve prompts

Models are susceptible to prompt variations. As software requirements evolve, you should modify and fine-tune them, which will help you enhance test coverage, accuracy, diversity, and relevance.

Since most development teams today rely on Agile methodology and CI/CD practices, one of the most pivotal best practices for the automatic generation of test cases with LLMs is to integrate them.

Automatic Test Case Generation with LLMs in Agile and CI/CD

The key points of marrying LLM-driven test case generation with CI/CD pipelines and Agile approaches include:

- Generating test cases during backlog refinement. The introduction of LLMs during early Agile stages (aka left-shifting) improves requirements analysis, enhances risk assessment, and enables proactive bug detection.

- Supporting sprint planning. Use LLMs as tools for analyzing historical data to outline priorities, clarify goals, draft initial plans, and map out task breakdowns.

- Keeping tests aligned with user stories. By doing so, you achieve dramatic test improvement using large language models. They help testers analyze and manage connections between tests and user stories and suggest acceptance criteria in a structured format.

- Using LLMs for rapid regression coverage. Here, you go beyond using an LLM for test case generation only. Models are a good crutch at test prioritization, output comparison, prompt chaining and refinement, and more.

- Supporting test automation pipelines. LLMs do that by generating test scripts and data, performing self-healing and codeless automation, participating in API testing enhancement, creating test summaries and reports, evaluating outputs of other models, and more.

Test generation with pre-trained LLMs is fast and efficient, yet this technology can’t operate without human oversight and guidance.

Combining LLMs with Human Expertise: The Right Balance

LLMs have not only revolutionized software testing by automating test case generation and stepping up test execution. Their advent will undoubtedly have a long-term impact on QA roles, necessitating positions such as AI QA engineer, model validator, AI test automation manager, prompt specialist, and others.

However, today and most likely in the future, LLMs remain valuable assistants and facilitators, not replacements for human personnel. The responsibilities of QA engineers shift toward supervising and quality gatekeepers, while AI does the lion’s share of practical work. Besides, humans are sure to stay in charge of strategic decision-making, whereas machines will handle tactical test planning and execution.

Conclusion

Traditional test case generation can’t satisfy the ever-growing needs of modern QA teams. Large language models (LLMs), as AI-driven systems capable of understanding and creating human language text, can significantly bolster their efforts. LLMs can generate test cases of various types quickly and efficiently if you provide high-quality training and input data, give straightforward instructions, specify context, and provide access to domain-specific knowledge bases.

To maximize the value of LLMs for automatic generation of test cases, you should leverage top-notch AI-driven systems and rely on human personnel for supervision, quality control, and strategic decision-making.