The primary objective of the testing process in any project is to gain an objective assessment of the software product’s quality. Ideally, the process unfolds as follows: the QA team reviews test results and determines whether refinements are necessary or if the product and its features are ready for release. However, in practice, testing is not always a reliable source of truth.

— Are you surprised 😲

— The reason? Flaky tests are!

This article explores how to identify, eliminate, and prevent dangerous flaky tests.

Unstable tests can become a serious obstacle that complicates the development process. They create uncertainty and require significant time and resources to resolve when they are detected.

→ What do flaky tests entail?

→ What triggers them?

→ How can they be fixed or prevented? — it is the most important for us.

You will find answers to all these questions below ⬇️

What Is a Flaky Test?

A flaky test means an automated test that produces inconsistent results. We can talk about a spontaneous test failure and a successful pass during the next test execution. This behavior is not related to any code changes. Naturally, this type of software testing does not contribute to overall Quality Assurance. In other words, it prevents teams from effectively reaching their objectives.

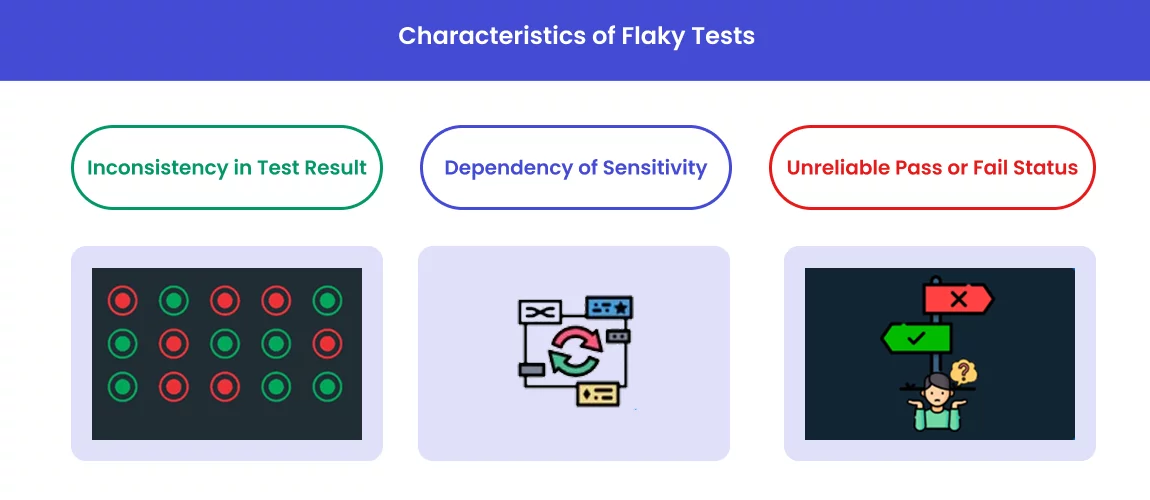

Key characteristics of flaky tests include:

- Inconsistency of results. Flaky tests produce unreliable outcomes, as their results fluctuate regardless of code changes.

- Unreliable test status. Assessing a product with flaky tests is inherently unreliable, as the results cannot be trusted. The Pass and Fail statuses fluctuate randomly with each retry, making them unpredictable and difficult to interpret.

- Dependence on external factors. These tests are extremely vulnerable to external dependencies, including environment variables, system configurations, third-party libraries, databases, external APIs, and more.

We have now defined flaky tests and outlined their key characteristics. However, the common causes of their occurrence have not been revealed yet. Let’s delve into this topic 😃

What Causes Test Flakiness?

Understanding the root causes of flaky tests will allow you to develop an effective strategy for their prevention. It will also help you mitigate the consequences if they do occur.

What can serve as precondition for future flaky tests?

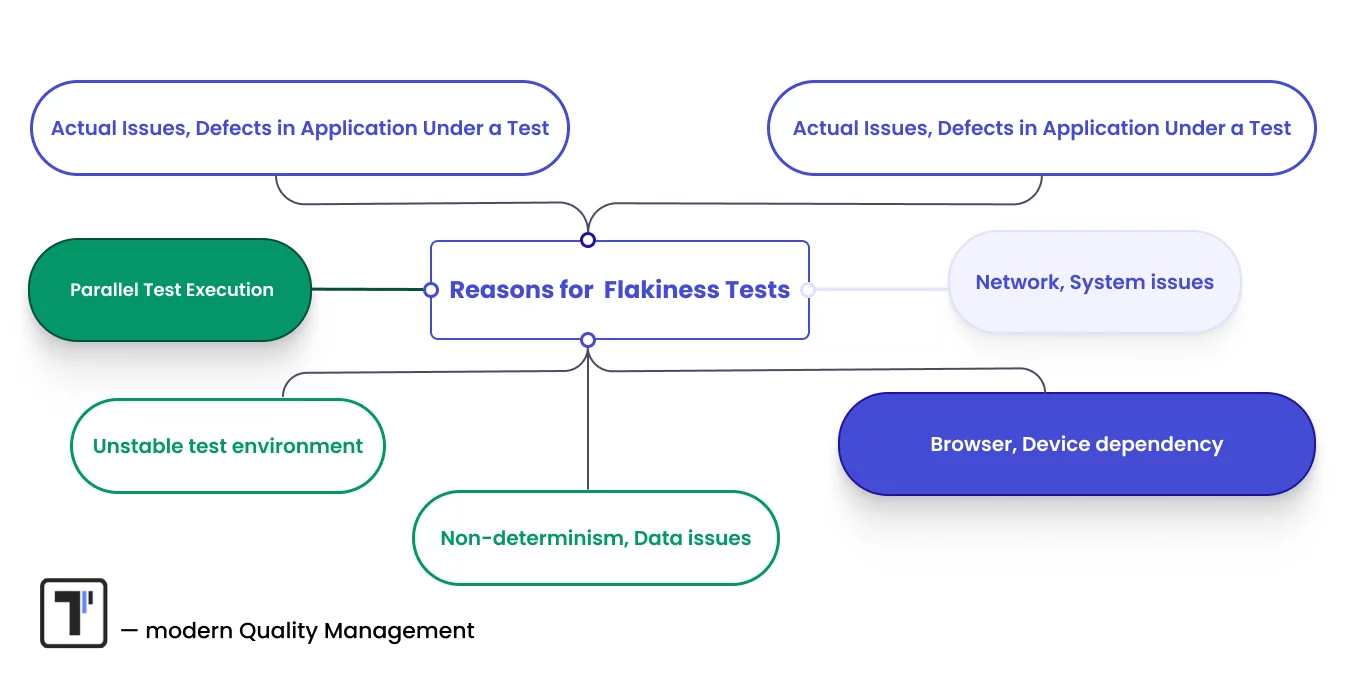

→ Parallel test execution. Running tests concurrently can enhance the efficiency of QA processes. However, in some cases, this approach may backfire, leading to test instability. This happens when multiple tests compete for the same resources. In other words, race conditions are present.

→ Unstable test environment. A project may face unreliable infrastructure or fluctuating system states. Insufficient control over the environment or lack of isolation can also contribute to instability in testing.

→ Non-determinism. This refers to generating different results from the same set of input data. In testing, this can occur when tests depend on uncontrollable variables, such as system time or random numbers.

→ Errors in test case writing. These can result from misunderstandings within the team, incorrect assumptions, or other factors. As a result, the test logic may be compromised, leading to unreliable results, such as false positives or false negatives.

→ Partial verification of function behavior. When creating test cases, it is important to write as many assertions as possible. They should cover all aspects of the function’s behavior, touching on edge cases and accounting for all potential side effects. If this is not done (if the assertions are insufficient), the tests risk becoming unstable.

→ Influence of external factors. Some dependencies can negatively impact test stability. To illustrate, here are a few examples:

- Tests that rely on external services or API can become unstable.

- Problems with data consistency or synchronization can arise if testing is related to a database or external storage.

- System issues. Issues like high server load or memory overload can undermine stability.

- Device dependency. Instability may also arise from problems related to hardware availability.

Most of these preconditions for test instability can be eliminated, thus preventing future issues. However, if this is not achieved, it is important to be able to recognize flaky tests in time.

Learn more about the causes of flaky tests in this video: What Are Flaky Tests And Where Do They Come From? | LambdaTest

How to Identify Flaky Tests?

In this section, we will discuss how to determine that your test suite is not reliable enough due to the presence of flaky tests. It is crucial to do this, as ignoring the issue can reduce trust in the CI\CD pipeline overall and slow down development.

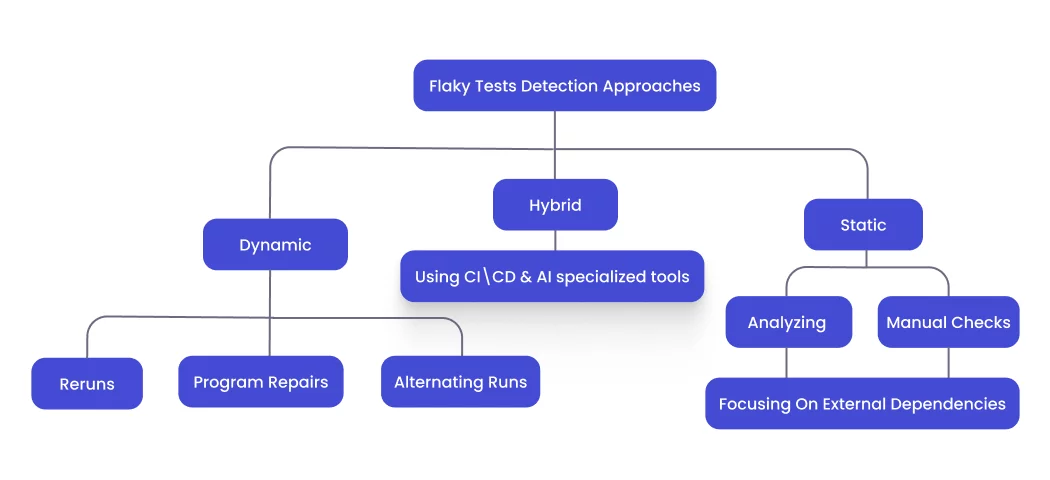

Here we are describing them in detail:

#1. Re-running Tests

Examine the test results when executed multiple times. If conflicting results arise during this process, it’s a clear indication of flaky test.

#2. Alternating Between Sequential and Parallel Test Execution

Test both sequential and parallel executions, then compare the outcomes. If a test fails only during parallel execution, this could point to race conditions or test order dependencies.

#3. Analyzing Test Logs

Reviewing the test execution history and error messages can reveal patterns in failing tests. For example, tests that produce different errors across runs may signal non-determinism or insufficient assertions.

#4. Testing in Different Test Environments

Run tests in various environments with different configurations or resources. If the results vary, it’s a sign that the tests may not be stable.

#5. Focusing On External Dependencies

Pay special attention to tests that depend on external factors. These may include API, databases, file systems, etc. These tests are more prone to unstable behavior. Potential failures may be triggered by issues with the external system.

#6. Using Specialized Tools

The CI\CD pipeline is an ideal place to spot flaky tests, as it tracks the success and failure history of individual tests over time. Many CI\CD tools also offer additional plugins designed to monitor instability.

Modern test management systems like Testomat.io can also assist in detecting and diagnosing flaky tests. We’ll dive into the platform’s capabilities for this later.

#7. Manual Checks

If test flakiness is still not obvious, you can try to detect potential flaky test cases manually. To do this, check the test codebase, evaluate the likelihood of race conditions, and analyze the test logic. In other words, assess the presence of any instability causes we mentioned earlier.

These reliable strategies will help you identify flaky tests. Why is this crucial for project success? We break it down.

The Importance of Flaky Test Detection

Test instability is an issue that many teams face. The results of a recent study showed that 20% of developers detect them in their projects monthly, 24% weekly, and 15% daily. Interestingly, 23% of respondents view flaky tests as a very serious issue.

Here are several reasons behind this perspective, highlighting why it is crucial to identify and address instability promptly:

- Slowing down the development process and increasing project costs. Unreliable test results prevent teams from progressing to the next development stage. They require manual checks, repeated executions, or extra steps to pinpoint and fix errors, consuming valuable time and resources.

- Decreasing the effectiveness of test automation. Flaky test outcomes provide little useful information, leading to a loss of trust in the entire test suite. Over time, teams may begin to disregard test results, undermining the purpose of continuous integration systems.

- Inconsistent feedback. Instability in tests results in inconsistent feedback on the quality of the application code. Developers fail to get an accurate picture of the situation, which delays problem identification and resolution.

- Decreased team performance. Frequent failures can negatively impact team morale, leading to diminished productivity, communication, and motivation. This ultimately affects the quality of the final product.

- Challenges in identifying true errors. Flaky tests in the test suite, may cause developers to mistakenly attribute all failures to these inconsistencies, overlooking real issues in the codebase. As a result, these problems remain undetected, accumulate, and create major challenges in diagnosing and resolving them.

Flaky tests disrupt the software development process in many ways. From increasing the duration of project work to worsening the overall atmosphere within the team. This is why it is important to identify and eliminate them as they arise.

How to Measure and Manage Flaky Tests?

The initial step in effectively managing flaky tests is to evaluate their frequency and the impact they have. This can be done through different methods:

- Analyzing test run history. Review the test execution history in your CI\CD pipeline or version control system. This will help identify the number of tests that periodically change their pass/fail status regardless of code modifications.

- Evaluating failure frequency. Track how often tests fail under varying conditions, such as in specific testing environments.

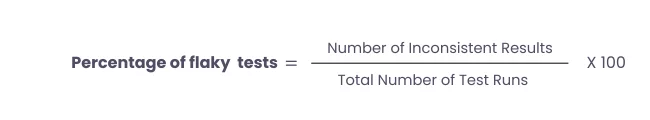

- Using test automation metrics. To gauge the extent of the issue, calculate the flakiness rate. This metric represents the percentage of test runs that produce unstable results. It is calculated using the following formula:

- Applying statistical methods. For example, you can use the Standard Deviation/Variance measurement method. If there is no instability in a specific test suite, the standard deviation will be zero.

- Using specialized tools. Some modern platforms enable teams to optimize their testing efforts by analyzing test result trends and helping to identify and manage flaky tests.

Test Management AI-Powered Solution for Flaky Tests Detection

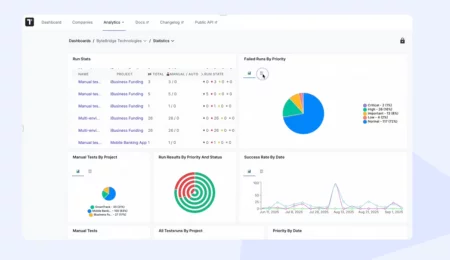

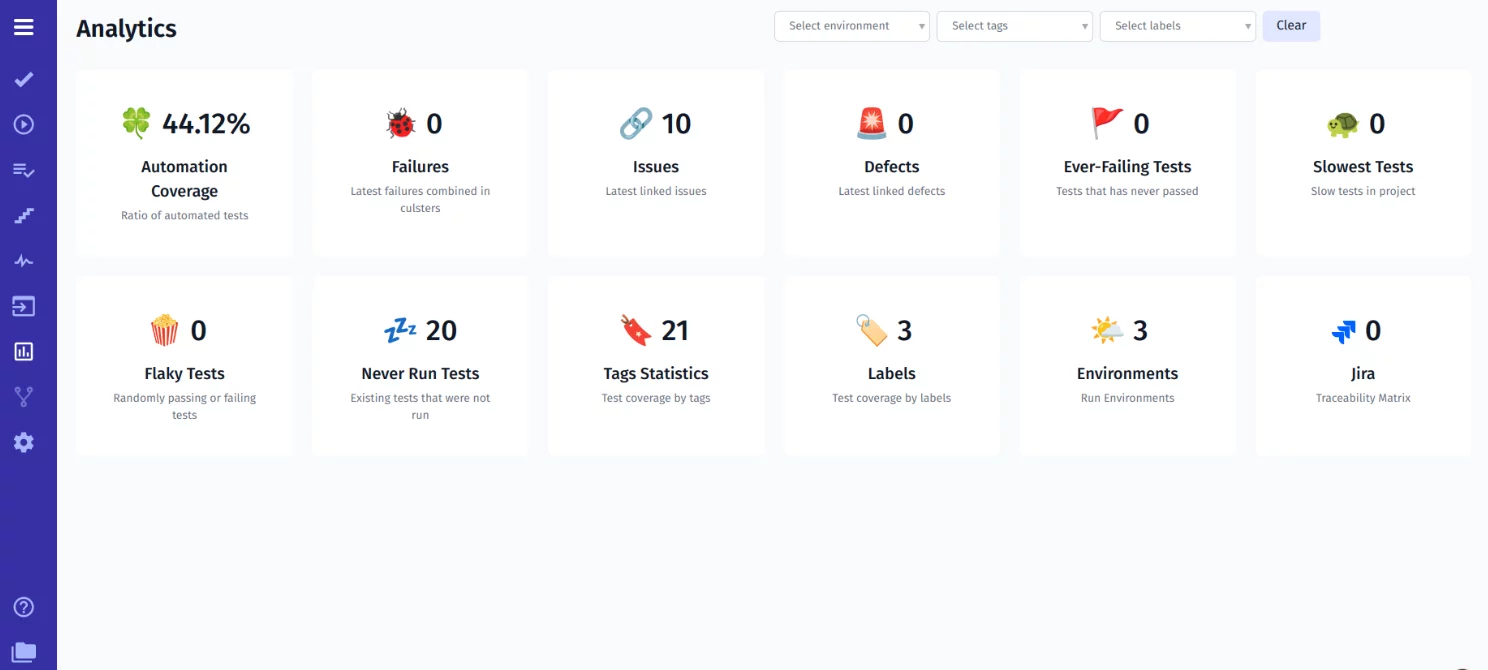

Test Management System testomat.io is a powerful TMS that offers its users advanced capabilities for working with automated tests. One of these features is advanced test analytics, offered through a Test Analytics Dashboard with user-friendly widgets.

One of the key widgets in the system is Flaky Tests. It allows testers to easily track tests with inconsistent results and make decisions about fixing them.

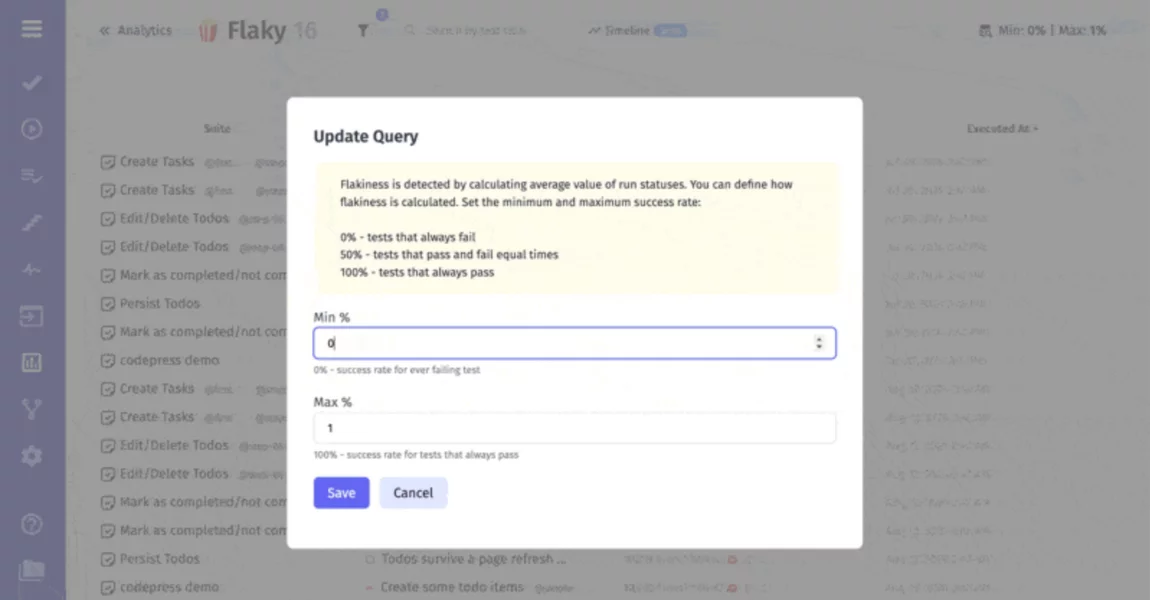

Let’s take a closer look at the algorithms used to detect flaky tests in Testomat.io. On what basis can a test be added to this list?

To identify instability, the system calculates the average execution status of a specific test. The following parameters are used for the calculation:

- Minimum success threshold. The minimum acceptable percentage of a “pass” status, which can be considered an indicator of instability.

- Maximum success threshold. The maximum acceptable percentage of a “pass” status, which can be considered an indicator of instability.

Let’s consider how the system’s algorithms work with a practical example.

Set success thresholds:

- Minimum – 60%.

- Maximum – 80%.

Suppose a test was run 18 times. Out of these, 12 runs were successful. So, its success probability = 66%. We see that the obtained result falls within the specified range. Therefore, the test will be considered unstable.

🔴 Note: To calculate the passing score, the data from the last 100 test runs are considered.

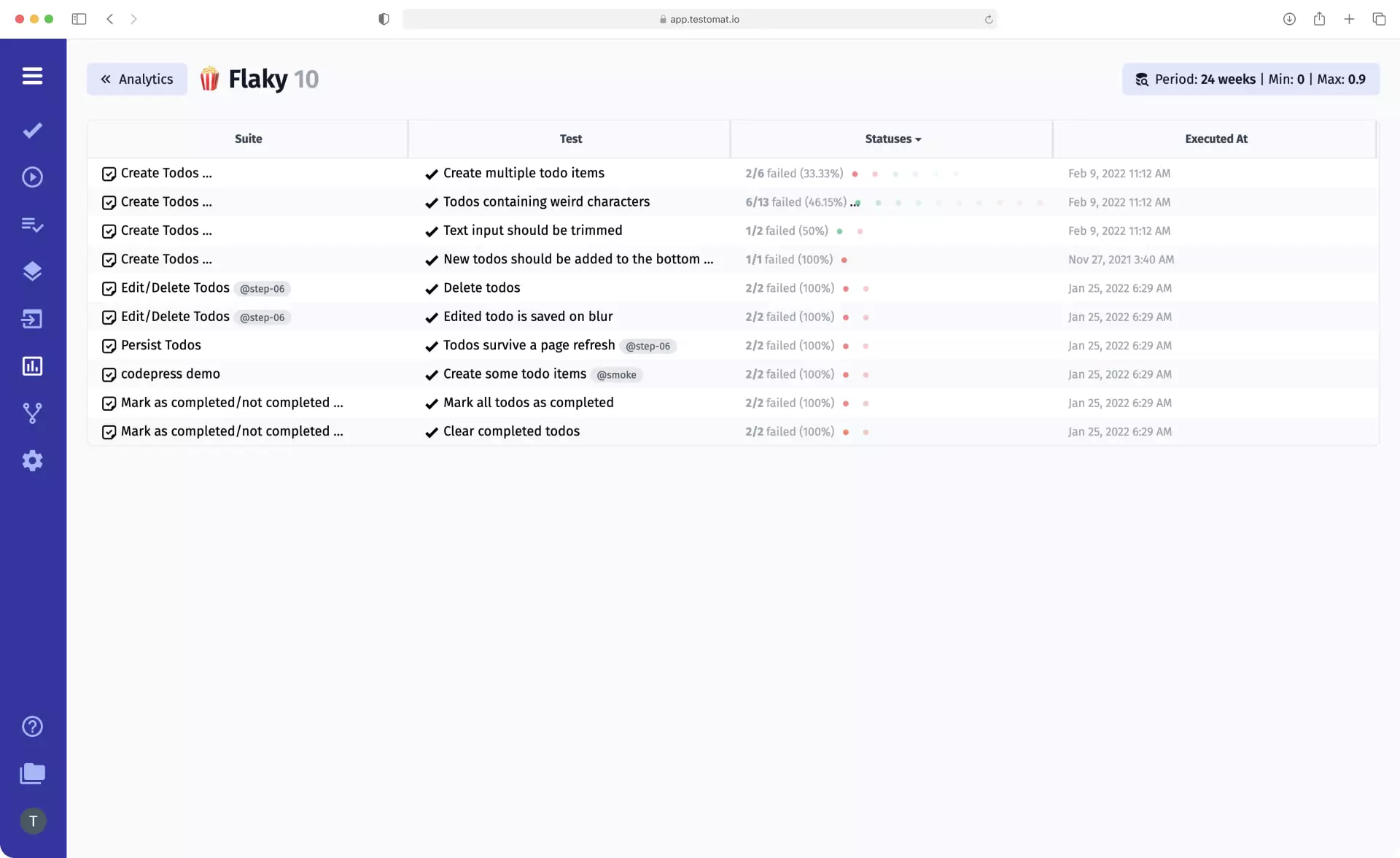

After displaying the table with flaky tests, users can perform the following actions:

- Sort with one click. To do this, click on one of the required columns – Suite, Test, Statuses, or Executed at.

- Filter by execution date, priority, tags, labels, and test environment.

- Change the order of columns for easier data analysis.

So, we have learned how to detect flaky tests, assess their impact on development quality, and manage them with specialized tools. Let’s move on to methods for addressing the problem.

How to Maintain Flaky Tests?

Effective maintenance of flaky tests involves several stages. Together, they allow you to fix existing instability, understand its cause, and prevent it in the future.

Root Cause Analysis

Identify the cause of instability. The most common causes include resource unavailability, external dependencies, errors in test code, or race conditions.

Fixing Flaky Tests

After pinpointing the cause of instability, take corrective measures to eliminate it. These may involve:

- Ensuring test idempotency. Tests should be designed to run independently, without relying on previous executions to maintain consistency.

- Implementing synchronization mechanisms. This is necessary so that tests fail when race conditions or system delays occur.

- Simulating external dependencies. If the cause of instability is a test’s dependency on external services, it is advisable to use stubs. They will simulate the dependency.

- Stabilizing the test environment. It is crucial to ensure maximum stability and predictability of the environment. One option is to use containerization.

- Improving the quality of flaky test cases. Control test logic and cover as many system or function behavior scenarios as possible.

Isolation and Prioritization of Problematic Tests

This step involves categorizing flaky tests by severity. If a test frequently fails, the issue should be addressed as a priority.

The most unstable tests should be isolated or temporarily removed from the overall test suite. Alternatively, use a relevant tag to mark such test cases. This way, you can minimize the impact of flaky tests on overall test results.

Continuous Monitoring of Tests and Team Training

Even after eliminating instability, continue to monitor your test sets continuously. This will allow you to:

- ensure the effectiveness of the fixes made;

- prevent flaky tests from reappearing;

- maintain a feedback loop.

It is also important to provide ongoing training for testers throughout the project. This will help them write reliable, stable tests, including:

- correctly handling external dependencies;

- considering all possible function behavior scenarios;

- following test isolation methods;

- avoiding race conditions.

Effective maintenance of flaky tests includes identifying their causes, working on their elimination, and subsequently monitoring the quality of test cases. Combined with continuous improvement of test automation skills, your team will achieve good results in solving this problem.

Summary: Best Practices for Minimizing Flaky Tests

Flaky tests are a serious problem faced daily by many development teams. To bring a quality software product release closer, it is recommended to focus on reducing their number. How can this be done?

- Ensure test isolation. Tests should not depend on the state of previous tests. It is also important to test in isolated environments. Containers or virtual environments are suitable.

- Avoid tests that rely on time. Do not rely on waiting for a fixed amount of time. Instead, use timeouts or explicit waits.

- Simulate external dependencies. If a test depends on external services, use mocks and stubs. Instead of databases and API, you can use mocking libraries.

- Use reliable test data. It should be predictable. Avoid depending on dynamic data, as any changes to it can cause instability.

- Ensure reliable synchronization. Parallel test execution should be carefully managed. Use locking mechanisms like semaphores or queues to ensure tests run consistently and prevent race conditions.

Implementing these strategies will help minimize the chances of instability in software testing for your project. As a result, your team will save time and resources that would otherwise be spent on fixing it.