Any software development life cycle contains a QA procedure as a non-negotiable element called to ensure that the software meets all technical and business requirements. The QA routine includes a range of testing methods (regression testing, security testing, acceptance testing, performance testing, stress testing, load testing, and more) leveraged by the testing team to check the quality of the final product as a whole and each component within it. However, when conducted as a collection of haphazard moves, even the most reliable testing techniques, performed with top-notch testing tools, can’t produce a high-quality software application. To maximize the QA outcome, testers should have a comprehensive test strategy in place.

This article explains what a test strategy in software testing is, enumerates software test strategy components, highlights approaches to conducting testing of a software product, dwells upon the types of testing strategies, gives advice on choosing the right software testing strategy, and unlocks the best practices for developing and implementing a software testing strategy.

Defining a Testing Strategy within the Software Development Process

A testing strategy is a high-level blueprint that serves as a baseline document for conducting thorough testing of a software solution, determining what and how to test, as well as who and when will do it. Alongside a testing strategy, one more document features in the testing phase of a software project – a testing plan. What’s the difference between a test strategy vs test plan?

In a nutshell, test strategies live up to their name by defining the vendor’s strategic approach to testing software products in the form of a long-term organization-wide guideline. A test plan adopts a short-term project-based perspective by outlining a detailed roadmap for effective software testing of a specific solution.

To guarantee successful testing outcomes and reliability of software products, a test strategy should include:

- Test objectives. These are specific goals to achieve through the testing process (as a rule, ensuring premium software quality and absence of bugs).

- The scope of testing. It includes the list of modules and functionalities to validate.

- Test approach. Here belong testing types (non-functional and functional testing), techniques (manual and automated testing), levels (unit testing, system testing, integration testing), and other options.

- Schedule and resources. This element accounts for human and financial resources to be allocated and a timeline for executing testing activities.

- The tech stack. Test environments, tools, test data, and other technological details are mentioned here.

- Roles and responsibilities. The section enumerates all stakeholders involved in testing efforts and defines what each of them will do.

- Risk assessment. Testers should be aware of potential bottlenecks and have a clear-cut plan for avoiding or at least mitigating them.

- KPIs and exit criteria. The QA team should have a set of metrics (such as test coverage, defect density, defect severity index, defect leakage, etc.) on the table to measure how effective test execution was, as well as explicit conditions, meeting which signals that testing is complete.

The bedrock of all strategies in software testing is the approach testers rely on.

Software Testing Approaches Exposed

What choices do QA teams have for engineering and conducting software testing?

Static vs dynamic testing

When opting for static testing, QA engineers perform code and document reviews without running the product they validate. In this way, defects can be pinpointed early in the SDLC before they escalate. Dynamic testing presupposes the execution of test cases across versatile test scenarios when the application is at work. It gives experts insights into real-life use cases and problems of the product.

Structural vs behavioral testing

Also known as white-box testing, structural testing digs into the system’s inner organization, examining its software code and architecture via branch, statement, and path coverage. Since it requires competence in programming languages, it is often entrusted to developers. Behavioral (or black-box) testing doesn’t involve understanding of code, so QA teams can do it perfectly well. It focuses on the system’s external functioning, checking how its responses coincide with expected results.

Preventive vs reactive testing

The first approach aims to forestall defects before they actually occur, ensuring the creation of a robust software product. The second one is activated when the solution runs into problems during the integration stage or when it is committed to real-world use.

In fact, you don’t have to choose only one approach. A balanced combination of different modes can enhance the efficiency of the overall testing strategy.

Zooming in on the Types of Software Testing Strategies

All testing strategies can be broadly categorized into the following types.

Requirement-based testing

As it is easy to guess, its starting point is the system requirements. Test scripts are written with the purpose of validating each functional and non-functional aspect of the product, which should operate according to specifications. On the other hand, this testing type ensures that the requirements themselves are clear, consistent, and complete.

Risk-based testing

This strategy takes into account the likelihood and severity of potential issues. Testers prioritize possible failures in accordance with the mission-critical areas, functionalities, and modules technologically and business-wise, and use the risk analysis guide to direct their major efforts at eliminating those inadequacies first of all.

Data-driven testing

In it, testers separate test logic and test data, storing the latter in external databases as spreadsheets or CSV files. The advantage of this strategy lies in the ability of QA engineers to reuse the same test script many times, filling it with various sets of input data to match a new scenario and receiving different outputs.

Exploratory testing

While conducting exploratory testing, experts are not only engaged in repetitive testing design and execution but also learn about the product they validate. It consists of time-limited sessions, each having a specific goal (aka test charter) focused on one feature. As they start to interact with the solution, a tester learns its functionalities, identifies potential troubles, and designs tests on the fly that validate the area of concern.

Test automation

It presupposes the involvement of automated testing on a large scale. The strategy aims to optimize and accelerate the QA routine by automating repetitive tasks. However, automation testing has certain limitations since some types of checks (for instance, user acceptance testing) are more effective when submitted for manual testing by human experts.

Usability and user experience-focused testing

This testing strategy relies primarily on manual testing. It evaluates how quickly and efficiently users complete certain tasks (usability testing) and considers a user’s interaction with the software application, concentrating on their emotions (UX-focused testing). Then, the collected indices (such as time on task and success rate) and user feedback are analyzed to detect pain points and improve the product.

Early and continuous testing

The strategy prioritizes testing early in the SDLC, making it an integral element within a CI/CD pipeline. In this way, it becomes the core of shift-left testing in which code reviews, unit testing, and static analysis are leveraged from the very beginning of the development process to pinpoint and prevent defects without delay.

Since there is a great gamut of strategies to choose from, opting for the most effective one is often a tough row to hoe.

How to Choose the Right Software Testing Strategy

You should consider several key factors when you choose a testing strategy.

- Test scope and objectives. You should understand what software features and functionalities the testing should cover and what the ultimate goal of the procedure is.

- Project requirements and constraints. Here belong types of products submitted for validation (mobile apps, e-commerce websites, CRM systems, you name it), unique expectations each product should meet (for instance, cross-browser or cross-device compatibility), project size (simple, where a minimal number of tests are necessary, or complex, necessitating a combination of multiple checks), and industry context (depending on the vertical the solution is developed for, it should undergo different tests).

- Development methodology. The Waterfall or Agile methodologies used during the development call for different checks.

- Testing approach, levels, and types. Various strategies include a range of testing procedures that suit them better.

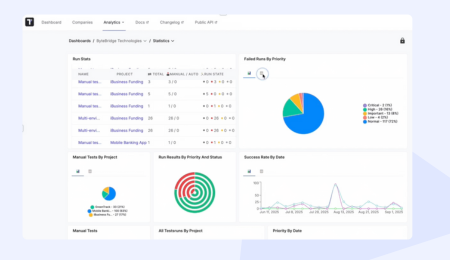

- Tools and environments. The same is true here. However, if you onboard a comprehensive testing platform honed for conducting versatile checks, it can become a key component of any testing strategy.

- Available budget. Some testing types (such as system testing, performance testing, user acceptance testing, and manual regression testing) are more expensive to conduct, so you should understand whether you can afford them.

- Testing team size and skillset. Human resources at your disposal and the competencies they wield have a direct impact on choosing this or that strategy.

- Security and regulations. In some spheres (like healthcare or banking), the data software products operate comes under stringent compliance rules, so your testing strategy should make allowance for such cases.

- Flexibility. The testing strategy should have a considerable adaptability potential to be tailored at will as project requirements change or market conditions evolve.

The choice of the robust strategy is vital, but even the best strategy will do you no good if you can’t implement it properly.

The Best Practices for Developing and Implementing a Testing Strategy

Here are some tips on how to create an effective software testing strategy and then implement it correctly.

An early start is a must

Various QA procedures should be involved at the earliest possible stage. The sooner you engage in validating different software components and functionalities, the less headache you will have with eliminating bugs and detecting issues.

Align with business outcomes

Remember that the perfect functioning of a software product is crucial, but it’s not an end in itself. Each check should aim to achieve some business goal and support user needs.

Automate wisely

An all-encompassing automation is a coveted dream for most testers, but like any dream, it is hardly attainable. You should automate the most critical and high-value areas first of all. Besides, there are some tests that can’t be delegated to machines since they require human oversight and intuition.

Update documentation regularly

Approaches and technologies evolve constantly. Business priorities and customer preferences shift frequently. You should trim your documentation every time a change occurs to keep the strategy up-to-date with the latest technological trends and volatile market conditions.

Foster collaboration

Testing is never an effort for a single person. Moreover, it is not the province of the QA team only. Developers, project managers, business stakeholders, and users should be involved in validating a software application and kept abreast of the progress of testing and its outcomes. Collaboration and test management tools will help you avoid departmental silos and let everybody stay on the same page.

Gauge efficiency

Using KPIs to measure testing efficiency is a natural thing to do. Yet not all KPIs are equal in importance. You should prioritize those that reflect customer impact and business goal attainment. After all, you want the solution to satisfy users and bring income to its owners, not just display excellent indices that have little practical value.

Drawing a Bottom line

A software testing strategy is a comprehensive document containing a set of the most general guidelines for conducting software checks. Typically, such a strategy includes test objectives, scope, and approach, a roster of resources (human, financial, and technological) required for performing tests, the anticipated timeline, the QA team’s roles and responsibilities, risk hedging measures, and KPIs to measure the outcomes.

Testing strategies are classified into requirement-based, risk-based, data-driven, exploratory, automated, UX-focused, and continuous testing types. To opt for the strategy that dovetails with your particular use case, you should pay attention to project specifications and constraints, the toolset and personnel skills it will require, the budget you can allocate, compliance rules, and flexibility, enabling you to attune it to ever-shifting project needs.

To maximize the efficiency of a testing strategy, you should introduce it early in the SDLC, align it with business expectations, utilize automation intelligently, update it regularly, promote collaboration between stakeholders, and leverage high-end software testing tools.