Software testing generative AI is revolutionizing the nature of QA teams performing quality assurance. Weeks-worth of manual testing now takes hours. Human-aided test case generation that would happen now happens auto-pilot style. Adding generative AI to testing is shattering bottlenecks that have plagued development teams for decades.

Software testing generative AI applications run much deeper than mere automation. These AI products produce full test suites, develop natural-looking test data, predict potential issues ahead of time, and update as apps evolve automatically. The result? Faster release cycles, improved software quality, and QA teams focused on strategy rather than routine work.

The value proposition is clear: AI-based QA testing reduces manual effort by up to 80%, improves test coverage across complex software systems, and maintains quality standards commensurate with your product growth. Teams using generative AI in test automation are reporting much faster time-to-market alongside fewer production bugs.

What Is Generative AI in Testing and How to Use It

Generative AI in software testing is a paradigm shift from the traditional way of doing things. Traditional tools execute pre-authored scripts, while generative testing creates new test cases, adapts to changes in applications, and learns from previous test results to maximize future coverage.

The most basic difference is intelligence. Legacy automation runs on rigid instructions. Generative AI understands context, reads user stories, and creates test cases that mimic real user behavior. This AI-based approach transforms testing from a reactive process to a proactive quality approach.

Developing a QA Strategy with Gen AI Workflow Adaptation

Implementing generative AI in your testing strategy requires a systematic approach that transforms existing QA processes while maintaining quality standards.

1️⃣ Generate New Tests

Start with your highest-priority user flows. AI analyzes user stories, API documentation, and application usage to create end-to-end test cases. Natural language requirements are where the process begins and produces executable test cases in multiple frameworks and languages.

AI models trained on software testing processes understand common patterns, boundary cases, and failure modes. They generate tests not just for happy paths but for error cases, boundary values, and integration points that are overlooked by manual test development.

2️⃣ Improve Existing Tests

Legacy test suites contain valuable business logic but are susceptible to maintenance burden and flaky runs. Generative AI inspects current tests, identifies redundancy, and suggests optimizations that enhance reliability and reduce execution time.

AI inspects test results, identifies patterns of test failure, and suggests structural improvement. Tests failing due to timing issues receive dynamic waits. Tests with brittle selectors are given more resilient element identification approaches.

3️⃣ Describe Self-Healing Tests

Self-healing tests are the future of test automation. When application elements change: new IDs, new class names, restructured layouts AI-based tests change automatically too. The system maintains an application structure model and adapts test scripts when it detects interface changes.

Such a capability eliminates the maintenance burden which typically consumes 30-40% of QA team capacity. Tests execute constantly even as applications evolve, with constant validation without constant human oversight.

4️⃣ Check Coverage

AI-powered coverage analysis is more sophisticated than typical code coverage statistics. It tracks patterns in user activities, identifies untested workflows, and reports functional coverage holes. The system offers recommendations on further test cases based on real usage patterns and potential risk areas.

Steps to Implement AI for QA Testing

- Identify Repetitive and High-Priority Tests: Start with repetitive test cases and high-priority business value. These are usually login flows, payment, and functionality of core features.

- Train AI on Test Data and App Behaviors: Provide your AI engine historical test data, app logs, and user interactions. The more data provided, the better AI-synthesized tests are.

- Design Automated Test Cases: Use tools like Testomat.io or ACCELQ Autopilot, translate business requirements into testable scripts. Technical work is performed by the AI while maintaining the focus on business logic.

- Execute Tests in CI/CD Pipelines: Integrate AI-designed tests into the existing deployment pipelines. The tests run automatically with changes to code and provide real-time feedback on quality impacts.

- Check AI-Generated Outcomes and Improve: Periodically check for AI results, verify test validity, and provide feedback to improve future generation. This creates a feedback cycle to make AI better with time.

Why Use Generative AI in Software Testing?

The benefits of using generative AI in software automation testing extend across every aspect of the QA process.

| Benefit | Why It Matters |

| Efficiency | Tests that took days now take minutes. Teams see 70–80% faster cycles. |

| Accuracy | AI reduces human error, covers edge cases, and ensures consistent results. |

| Scalability | Runs thousands of scenarios across platforms without slowing down. |

| Integration | Fits into CI/CD, trackers, and workflows with no disruption. |

| Learning | Reveals app behavior, flags risks, and improves test strategies. |

Top 5 Generative AI Testing Tools

As the landscape of artificial intelligence continues to evolve, generative AI has emerged as a groundbreaking technology, enabling machines to create content, generate code, and even simulate human-like interactions. However, with these advancements come new challenges, particularly in ensuring the quality, reliability, and safety of AI-generated outputs. Testing generative AI systems is crucial for mitigating risks and enhancing performance, making it essential for developers and organizations to utilize effective testing tools.

Let’s explore the top five generative AI testing tools that are setting the standard for quality assurance in the AI domain. These tools not only streamline the testing process but also provide valuable insights into the behavior and outputs of generative models.

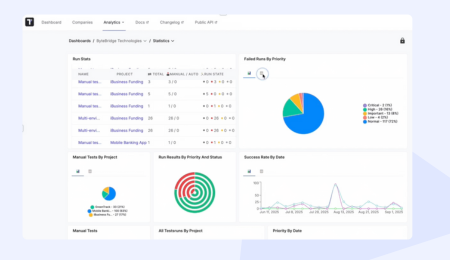

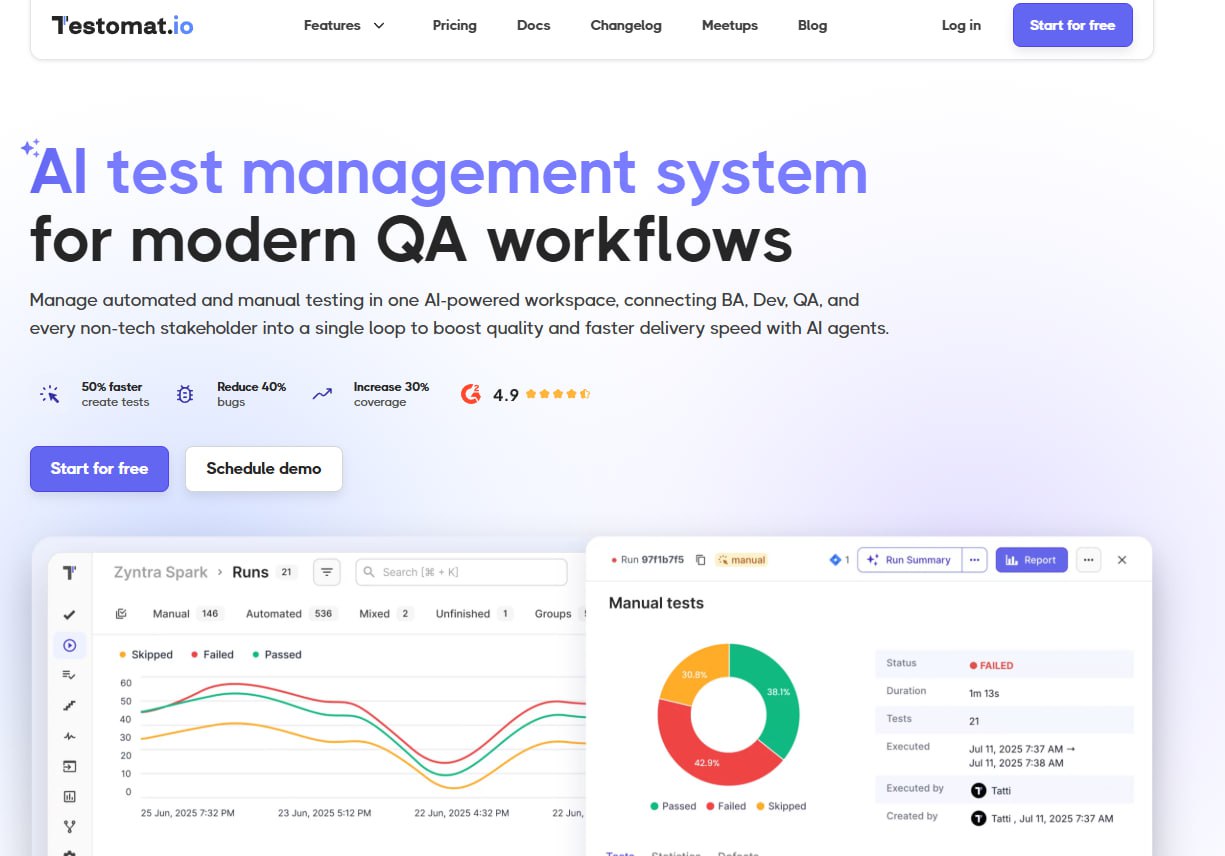

1. Testomat.io

Testomat.io is the market leader in AI-powered test orchestration that brings together test case generation, execution management, and analytics into a single platform. The platform is aware of how to leverage generative AI for software testing across the entire QA cycle. Key Features:

- AI-Powered Test Case Generation: Transform user stories, Jira issues, or plain text requirements into executable test cases. The AI is context-aware and generates realistic test scenarios.

- Flaky Test Detection and Auto-Suggestions: Machine learning algorithms identify unstable tests and even recommend fixes. The platform learns from run patterns to improve test stability.

- Smart Analytics and Reporting: Real-time dashboards provide insight into test coverage, run trends, and quality metrics. AI-powered analytics uncover areas requiring additional testing focus.

- Seamless CI/CD & Jira Integration: Out-of-the-box integrations with top development tools ensure AI-generated tests integrate easily into existing workflows.

- Multi-Framework and Language Support: Whether Selenium, Playwright, or API testing frameworks your team uses, Testomat.io provides corresponding test implementations.

The platform supports 100K+ tests per project with enterprise-grade stability and is therefore suitable for companies of any size. Teams experience significant reductions in manual testing efforts without a sacrifice in test coverage.

2. ACCELQ Autopilot

ACCELQ Autopilot is a GenAI-powered automation platform that discovers business processes and generates standalone tests without requiring in-depth technical expertise.

- Discover Scenarios: AI learns application behavior to auto-generate end-to-end test scenarios based on real user interactions.

- QGPT Logic Builder: Converts business rules in natural language to automation logic, closing the gap between business requirement and technical implementation.

- AI Designer: Creates modular, reusable test components that can be built into complex test workflows.

- Test Case Generator: Automatically generates both test cases and relevant test data, ensuring full validation coverage.

- Autonomous Healing: Tests evolve automatically as application interfaces change, with no human effort to maintain test integrity.

- Logic Insights: Analyzes patterns in test execution and suggests optimizations for improved reliability and performance.

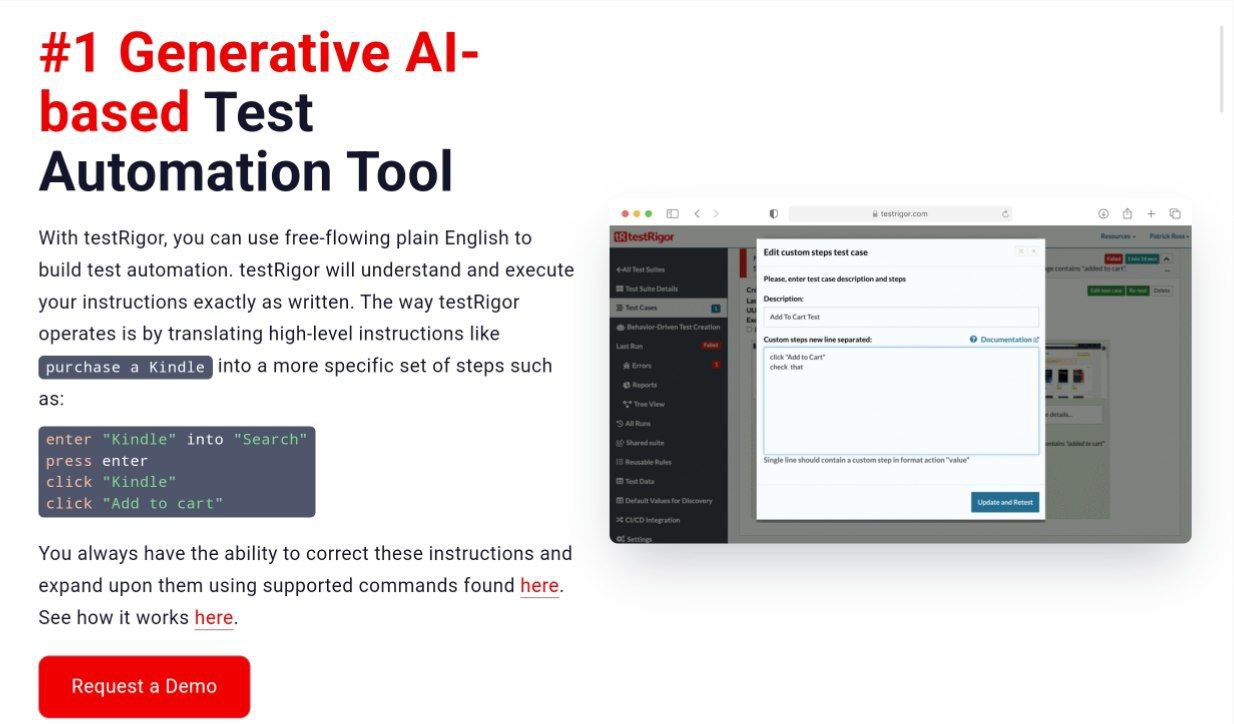

3. TestRigor

TestRigor is a generative AI test generation company for web applications focusing heavily on form-based testing use cases.

- Intent Understanding: The AI is able to comprehend the intent of the user sans strict syntax so that non-technical team members can define tests.

- Step-by-Step Editor: Provides visual workflow editing for automation use cases so that the gap between manual test cases and automated execution is bridged.

- User Story Upload: Generates test cases directly from uploaded user stories, enabling requirements-traceability to verification.

- Chat Interface: Allows natural language interaction for test case refinement and updating.

4. TestCollab

TestCollab is introduced as an AI automation tool bridging the gap between natural language requirements and executable test scripts.

- Plain English to Script Conversion: Translates business requirements in natural language into executable automation code.

- Auto-Healing Scripts: Automatically adjusts test scripts for minor application changes, reducing maintenance effort.

- Parallel Execution: Executes hundreds of tests simultaneously, reducing the total test execution time significantly.

- Hands-Off User Simulation: Creates realistic user simulation without requiring extensive technical specifications.

- Continuous Updates: Maintains tests with automatic updates that prevent testing downtime.

5. LambdaTest KaneAI

LambdaTest KaneAI leverages large language models (LLMs) to provide end-to-end test generation, debugging, and evolution.

- Multi-Language Code Export: Exports test scripts in multiple programming languages and frameworks to cater to heterogeneous technical environments.

- Intelligent Test Planner: Creates high-level automation plans based on application analysis and business requirements.

- Comprehensive Integrations: Pre-built integrations with Jira, Slack, GitHub, and Google Sheets for seamless workflow integration.

- Smart “Show-Me” Mode: Facilitates natural language test development through conversational interfaces.

- Visual Test Reporting: Provides detailed analytics and project insights through visual dashboards and reporting features.

Best Practices for Generative AI in Test Automation

Successful AI deployment in software testing requires strategic development and phased implementation rather than direct replacement of existing processes.

✅ Begin Small with High-Impact Paths

Begin AI deployment on your most important user flows and business-critical features. This provides value in a timely manner while allowing teams to gain insights into AI capabilities without jeopardizing overall test coverage.

✅ Leverage Human QA Knowledge + AI

The optimal approach integrates AI generation with human validation. QA professionals provide context, business sense, and analytical capacity that AI is not yet capable of matching. The hybrid approach utilizes both speed and accuracy to the greatest degree.

✅ Ensure Test Data Quality

AI systems require high-quality training data to generate reliable tests. Invest in well-designed, clean test data and follow data quality standards. Low-quality input data produces unreliable AI results and low confidence levels in automatically generated tests.

✅ Periodically Review AI-Generated Scripts

Establish procedures to review AI-generated tests. Scan for redundancy, verify business logic, and ensure tests comply with current requirements. AI systems learn from feedback, so human review is important for future success.

✅ Build Feedback Loops

Create mechanisms for QA teams to leave feedback on AI performance. On failure of AI tests or when important scenarios are missed, bring this feedback back into the system to improve future generation quality.

✅ Monitor Test Coverage Gaps

Use AI analysis to identify areas where test coverage remains inadequate. AI systems excel at discovering gaps in traditional testing approaches and highlighting areas that require additional focus.

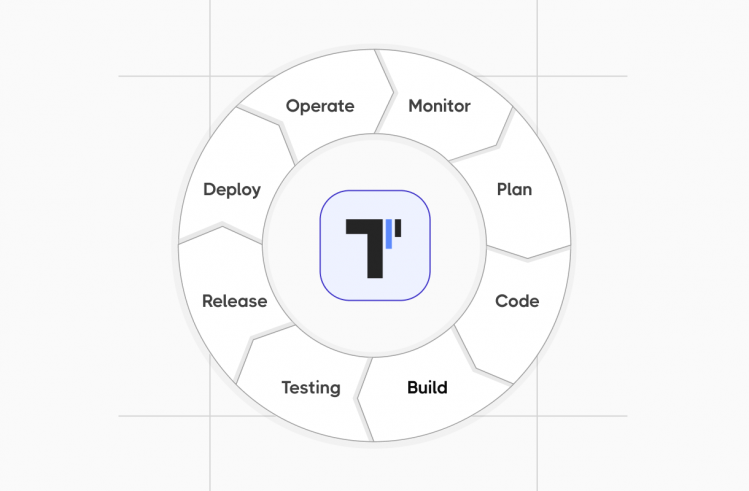

The Future of Generative AI in Quality Assurance

The world of software development is moving towards AI-native software development practices where generative models undertake routine tasks and human abilities are focused on strategy, innovation, and complex problem-solving. QA teams who become proficient in generative AI in software testing are at the forefront of this revolution.

Machine learning and artificial intelligence-based testing will continue to advance, with advances in the future including ever more sophisticated natural language processing, computer vision for image testing, and generative adversarial networks for state-of-the-art test data generation.

The testing itself gets smarter, reacting to specific needs and providing complete coverage with little human interference.

Conclusion

Generative AI transforms QA testing by eliminating human effort, improving accuracy, and reducing release cycles. The technology addresses essential challenges for organizations of all sizes, ranging from startups struggling with test organization to large enterprises requiring extreme scale and reliability.

The future belongs to companies that implement generative AI in a strategic manner. Start with key paths, combine AI efficiency with human judgment, and design feedback mechanisms that refine performance progressively. The cost of learning and implementing generative AI on test automation is worth it in the form of reduced manual testing effort, improved software quality, and faster delivery of features that matter to users.

Success in modern software development is about leveraging AI as not a replacement for human capability but as a multiplier that improves team capabilities. The question is not whether to adopt or not adopt generative AI in software testing, but how quickly your team can get and apply these tools to gain competitive advantage in the increasingly AI-facilitated development workflow.

Frequently asked questions

How does generative AI improve regression testing?

Generative artificial intelligence enhances regression testing by automatically creating and updating test cases when code changes occur. Instead of relying only on traditional testing methods, AI analyzes historical data and adapts existing scripts. This ensures continuous coverage, reduces maintenance, and keeps tests aligned with evolving software.

Can generative AI handle exploratory testing?

Yes. While exploratory testing relies heavily on human insight, AI tools can suggest areas to explore by analyzing patterns, amounts of data, and user behavior. Manual testers remain critical, but generative AI acts as a guide, pointing QA teams toward risk-prone areas for deeper investigation.

How does AI work with vast amounts of data in testing?

AI models thrive on vast amounts of data. In QA, they process historical data from defect logs, test executions, and production issues. This data powers smarter test optimization, generates edge cases, and predicts failure points. The result is higher software reliability with less manual effort.

What role does code completion play in generative AI testing?

Some AI tools extend beyond testing into code completion, suggesting fixes for test scripts or even production code. This speeds up test design, lowers the barrier for non-technical stakeholders, and allows QA teams to move faster without sacrificing software quality assurance.

What are the benefits of generative AI compared to traditional testing methods?

The benefits of generative AI include faster creation of test cases, automated test data management, proactive defect prediction, and seamless integration into continuous integration pipelines. Unlike traditional testing methods, generative AI scales easily, reduces dependency on manual testers, and keeps pace with modern release cycles.

What type of AI is used in software testing?

Most solutions use generative artificial intelligence powered by large language models and machine learning. This type of AI can understand natural language, process structured and unstructured data, and generate executable tests for a wide range of applications, from web to mobile applications.

How does generative AI support manual testers?

Instead of replacing manual testers, generative AI acts as a supportive AI tool. It handles repetitive test design and test data management so testers can focus on exploratory testing, critical thinking, and improving the overall user experience.

How does generative AI integrate into continuous integration pipelines?

Generative AI tools are designed for continuous integration workflows. They generate and execute tests automatically, update them as requirements change, and feed results back to CI dashboards. This enables QA teams to maintain speed without compromising software quality assurance.