Your integration tests are flaky. Again. The API call that worked yesterday now times out. The database connection that passed in staging fails in production. Your team scrambles to find which component broke, digging through logs across three different tools while your release deadline looms.

Integration testing sits at the intersection of chaos and clarity. When different components of your software system communicate, things break in unexpected ways. Data flows incorrectly. Service timeout. Authentication fails.

Generative AI transformed how we approach software testing, moving from reactive debugging to proactive test generation. Tools that once required extensive manual scripting now suggest test cases automatically. Platforms that operated in isolation now connect your entire QA workflow into a unified workspace.

This guide breaks down the top integration testing tools that actually solve problems. No filler. No buzzwords. Just concrete insights on what works, what doesn’t, and how to choose the right approach for your team.

What is Integration Testing?

Integration testing verifies that different components of your software system work together correctly. While unit testing checks individual components in isolation, integration testing examines component interactions: the API calls, database queries, message queues, and service communications that make your application function.

The Role of Integration Testing in the Software Development Lifecycle

Integration testing catches the bugs that slip through unit tests. A function might work perfectly on its own but fail when it interacts with a database, external API, or another service. These failures manifest as data inconsistencies, timeout errors, authentication issues, or unexpected state changes.

Modern software development demands continuous testing. Integration tests run in your CI/CD pipeline, validating every code change before it reaches production. They verify that new features don’t break existing integrations and that microservices communicate as expected across different environments.

Challenges in Manual Integration Testing and Why Automation Tools Are Important

Manual integration testing doesn’t scale. Testing every API endpoint, database interaction, and service communication by hand takes hours. By the time you finish testing one build, three more are waiting in the queue.

Manual testing also lacks consistency. Different testers might check different integration points. Test data varies between runs. Results become unreliable, and critical integration paths slip through untested.

Automation solves these problems. Automated integration tests run consistently, quickly, and repeatedly. They execute the same test scenarios with the same test data every time, catching regressions immediately. More importantly, automation frees your QA teams to focus on exploratory testing and complex test scenarios that actually require human judgment.

Defining Integration Testing Tools

Integration testing tools facilitate testing between different modules or components of a software system. They’re not monolithic platforms,they’re specialized solutions that fit specific needs in your testing process.

Some tools focus purely on test execution. Others handle test management, reporting, or test data generation. The best integration testing tool for your team depends on your tech stack, testing scenarios, and how you structure your testing environment.

Distinction from Unit Testing Tools, End-to-End Testing Tools, and API Tools

The boundaries blur in practice. Many teams use API testing tools for integration tests. Others use end-to-end testing frameworks to validate integration points through the UI. The key is understanding what each tool excels at and choosing accordingly.

- Unit testing tools (like JUnit, PyTest, Jest) test individual functions or methods in isolation. They mock external dependencies and verify that a single unit of code behaves correctly.

- Integration testing tools verify that multiple units work together. They test real database connections, actual API responses, and genuine service interactions. Integration tests don’t mock everything they test the integration points themselves.

- End-to-end testing tools (like Selenium, Playwright, Cypress) test complete user workflows through your application’s UI. They simulate real user interactions from start to finish.

- API testing tools (like Postman, Karate, SoapUI) specifically test web services and API endpoints. While they’re often used for integration testing, their scope is narrower focused on API development and validation.

When to Pick Which Approach

Most teams need all of these approaches. The testing pyramid suggests more unit tests at the base, fewer integration tests in the middle, and even fewer end-to-end tests at the top. Integration tests strike the balance between speed and realistic testing scenarios.

- Use unit testing when you need fast feedback on code changes. Unit tests run in milliseconds and catch logic errors immediately.

- Use integration testing when you need to verify component interactions. Integration tests validate that your services communicate correctly, your data flow works as expected, and your system handles real-world integration scenarios.

- Use end-to-end testing when you need to validate complete user journeys. End-to-end tests ensure that your entire system works together from the user’s perspective.

- Use API testing when you need detailed validation of web services, request/response formats, and API contracts.

Top Integration Testing Tools

1. Testomat.io

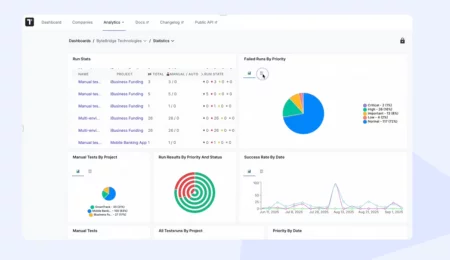

Testomat.io unifies automated and manual testing in one workspace, connecting BA, Dev, QA, and non-technical stakeholders into a single testing environment. It’s built for teams that need to manage thousands of test cases across multiple projects while maintaining visibility and control.

Strengths:

AI-powered test generation creates test cases directly from requirements, eliminating the manual work of translating user stories into test scenarios. The platform’s AI agents detect flaky tests automatically, suggest fixes, and provide predictive insights on test execution patterns.

The unified workspace breaks down silos. BAs write requirements, developers sync automated tests from GitHub or GitLab, QA teams execute manual tests, and PMs track progress,all in the same platform. No context switching. No scattered documentation.

Testomat.io handles scale. Projects with 100,000+ test cases remain performant. Instant syncing with CI/CD pipelines means test results flow directly into your deployment workflow. Native Jira integration keeps your issue tracking aligned with test execution.

The platform supports comprehensive integration testing through its test management capabilities. You can organize integration test cases by component interactions, track test data requirements, monitor test coverage across integration points, and analyze test results to identify problematic integrations.

Limitations:

Testomat.io is a test management platform, not a test execution framework. You’ll still need execution tools like JUnit, PyTest, or Selenium to run your tests. Testomat.io orchestrates these tools, aggregates results, and provides unified reporting but it doesn’t replace your testing framework.

Ideal Use Cases:

- Startups drowning in scattered test documentation across Google Docs, Notion, and Slack

- Mid-sized teams struggling with too many disconnected testing tools and no shared source of truth

- Enterprises with execution bottlenecks, rigid workflows, and outdated QA environments

Language/Technology Support:

Framework-agnostic. Testomat.io integrates with any testing framework through its API and native integrations. Supports JavaScript, Python, Java, C#, PHP, Ruby, and more through direct framework connections.

2. JUnit / TestNG

JUnit and TestNG are testing frameworks for Java applications. While primarily known for unit testing, both frameworks excel at integration testing when combined with Spring Boot Test, Testcontainers, or embedded databases.

Strengths:

Deep Java ecosystem integration. Both frameworks work seamlessly with Maven, Gradle, and Java IDEs. Annotations like@SpringBootTestenable full application context loading for integration tests. Testcontainers support allows testing against real databases, message queues, and external services running in Docker containers.

TestNG offers more flexible test configuration than JUnit, including test grouping, parameterized tests, and parallel test execution out of the box. JUnit 5 closed many of these gaps with its modular architecture and extension model.

Limitations:

Configuration complexity increases with integration test scope. Testing multiple services requires careful setup of test contexts, test data, and environment dependencies. Slow test execution times become problematic without proper parallelization and resource management.

Ideal Use Cases:

Java applications, Spring Boot microservices, enterprise systems built on Java EE or Jakarta EE. Teams already invested in the Java ecosystem find these frameworks natural extensions of their existing development process.

3. PyTest

PyTest is Python’s most popular testing framework, offering a straightforward approach to both unit and integration testing with minimal boilerplate.

Strengths:

Simple, readable test syntax. Tests are plain Python functions with assert statements, no complex test class hierarchies required. Fixtures provide elegant dependency injection for test data, database connections, and external service mocks.

Extensive plugin ecosystem. PyTest-django for Django applications, pytest-asyncio for asynchronous code, pytest-mock for mocking, and pytest-xdist for parallel test execution. These plugins transform PyTest from a simple framework into a comprehensive testing solution.

Excellent reporting capabilities. Detailed failure information, test duration tracking, and integration with coverage.py for code coverage analysis. The pytest-html plugin generates readable HTML reports for stakeholders.

Limitations:

Python’s dynamic nature makes integration tests more prone to runtime errors compared to statically typed languages. Test discovery can become slow in large codebases without proper organization.

Ideal Use Cases:

Python applications, Django/Flask web services, data pipelines, machine learning systems, API backends. Teams building microservices in Python find PyTest ideal for testing service interactions and database integrations.

4. Mocha / Jest

Mocha and Jest are JavaScript testing frameworks for Node.js applications. Mocha offers flexibility and modularity, while Jest provides an all-in-one solution with built-in mocking and assertions.

Strengths:

Mocha excels at flexibility. Choose your assertion library (Chai, Should.js, expect), your mocking framework (Sinon.js), and your reporting format. This modularity allows precise control over your testing environment but requires more configuration.

Jest prioritizes developer experience. Zero configuration for most projects, built-in code coverage, snapshot testing, and parallel test execution by default. The test runner automatically finds tests, mocks modules, and generates reports without additional setup.

Both frameworks handle asynchronous testing well critical for Node.js integration tests that interact with databases, external APIs, and message queues. Support for promises, async/await, and callback-based code makes testing asynchronous integration points straightforward.

Limitations:

Mocha’s flexibility becomes a burden in large teams without clear testing conventions. Jest’s all-in-one approach can feel restrictive for teams wanting specific testing tools.

Jest’s snapshot testing, while powerful, creates maintenance overhead. Snapshots break frequently with UI changes, leading to teams blindly updating snapshots without reviewing actual changes.

Ideal Use Cases:

Node.js backends, Express/Fastify APIs, serverless functions, React/Vue/Angular applications with backend integration tests. Teams building full-stack JavaScript applications benefit from consistent testing frameworks across frontend and backend.

5. Postman / Karate

Purpose & Scope: Postman and Karate specialize in API testing. Postman offers a visual interface for API exploration and testing, while Karate provides a BDD-style framework for API test automation.

Strengths:

Postman democratizes API testing. Non-technical team members can create and execute API tests through its intuitive UI. Collections organize related API requests, environments manage test data across different deployment stages, and pre-request scripts enable dynamic test scenarios.

The Postman CLI (Newman) enables CI/CD integration, running collections as part of your automated testing process. Collaboration features allow teams to share API collections, mock servers, and test results.

Karate combines API testing with BDD syntax and native JSON/XML support. Test scenarios read like plain English but execute as powerful integration tests. Built-in assertions for API responses, parallel execution, and comprehensive reporting make it a complete API testing solution.

Karate eliminates external dependencies. No need for separate assertion libraries, mocking frameworks, or test runners. Everything required for API integration testing comes built-in.

Limitations:

Postman’s visual approach doesn’t scale well for complex test logic or large test suites. Version control becomes awkward,tracking collection JSON files in Git lacks the clarity of code-based tests.

Karate’s custom syntax, while readable, creates a learning curve. Developers familiar with standard testing frameworks need time to adapt to Karate’s approach.

Ideal Use Cases:

REST API testing, GraphQL endpoint validation, microservice contract testing, API development workflows. Teams building API-first applications find these tools essential for verifying service interactions and API contracts.

6. SoapUI

SoapUI focuses on web services testing, supporting both SOAP and REST protocols. It’s the established choice for teams working with enterprise web services and complex service integrations.

Strengths:

Comprehensive SOAP support. Automatic test generation from WSDL files, complex XML message validation, and WS-Security testing for secure web services. Few tools match SoapUI’s depth in SOAP protocol support.

REST support includes request building, response validation, and authentication handling. Data-driven testing allows executing the same test with different test data sets, validating integration points across multiple scenarios.

SoapUI Pro adds load testing, security testing, and advanced reporting features. The visual interface makes it accessible to testers without extensive programming experience.

Limitations:

The UI feels dated compared to modern testing tools. Performance issues arise with large test suites. The open-source version lacks many features available only in the commercial Pro version.

SOAP usage is declining in favor of REST and GraphQL. Teams building new services rarely choose SOAP, limiting SoapUI’s relevance for modern application development.

Ideal Use Cases:

Legacy enterprise systems, banking and financial services using SOAP, government systems with established web service contracts, integration with third-party SOAP APIs.

7. Apache JMeter

JMeter is primarily a load testing tool but excels at integration testing when performance characteristics matter. It validates not just whether integrations work, but how they perform under load.

Strengths:

Tests integration points under realistic load conditions. Verify that your API handles 1000 concurrent requests, your database connection pool doesn’t exhaust under load, and your message queue processes messages efficiently.

Protocol support extends beyond HTTP: databases (JDBC), message queues (JMS), FTP, SMTP, and more. This versatility makes JMeter valuable for testing complex integration scenarios across different protocols.

Distributed testing across multiple machines enables massive load generation. Test how your entire system behaves when thousands of users trigger integration points simultaneously.

Limitations:

Steep learning curve. JMeter’s GUI is functional but not intuitive. Creating complex test scenarios requires understanding samplers, listeners, assertions, and thread groups, concepts unfamiliar to many testers.

Resource intensive. Running large load tests consumes significant CPU and memory. Distributed testing requires infrastructure setup and coordination.

Ideal Use Cases:

Performance validation of integration points, stress testing microservice communications, load testing API gateways, database integration performance testing.

8. Selenium / Playwright / Cypress

These tools automate browser interactions, primarily for end-to-end testing. They’re valuable for integration testing when you need to validate integrations through the user interface.

- Selenium offers the most mature ecosystem and broadest language support. WebDriver protocol enables testing across Chrome, Firefox, Safari, and Edge. Selenium Grid provides parallel test execution across multiple browsers and environments.

- Playwright brings modern capabilities: automatic waiting, network interception, multi-tab/multi-window support, and built-in test fixtures. Cross-browser testing includes Chromium, Firefox, and WebKit. Playwright’s architecture eliminates many flaky test issues that plague Selenium.

- Cypress prioritizes developer experience with automatic reloading, time-travel debugging, and real-time test execution visibility. Tests run directly in the browser, enabling native access to application objects and faster execution compared to Selenium’s remote protocol.

All three tools validate integration points through the UI: form submissions trigger API calls, page loads retrieve database data, user actions generate service communications.

Limitations:

UI tests are slower than API-level integration tests. They require browser initialization, page rendering, and UI interaction,adding seconds to each test execution.

Maintenance overhead increases with UI changes. Button selectors break, layout shifts affect element locations, and asynchronous loading creates timing issues. These tools are powerful but require careful test design to remain stable.

Ideal Use Cases:

Full-stack integration testing, validating frontend-backend integrations, testing complex user workflows that touch multiple services, visual regression testing alongside integration validation.

Key Features to Look for in Integration Testing Tools

Modern applications distribute functionality across microservices, external APIs, and multiple databases. An effective integration testing tool must support these technologies natively: RESTful APIs, GraphQL endpoints, gRPC services, message queues, and relational databases with proper test data management.

| Feature | Why It Matters | What to Look For |

| Automation Capability | Manual testing doesn’t scale; automation ensures frequent, reliable execution. | CLI support, APIs for test triggering, scheduling, parallel execution. |

| CI/CD Pipeline Integration | Ensures integration tests run on every code change, blocking bad deployments. | Native integrations with Jenkins, GitHub Actions, GitLab CI, CircleCI, Azure DevOps; plugins & APIs. |

| Reporting & Analytics | Converts raw output into insights for devs & stakeholders. | Dashboards, failure trend tracking, flaky test detection, executive-friendly reports. |

| Ease of Scripting & Management | Determines test creation speed and long-term maintainability. | Readable syntax, low learning curve, b documentation, test tagging, grouping, search, VCS support. |

| User Interface | Makes testing accessible beyond developers. | Visual test builders + code editors, intuitive navigation for QA/business users. |

| Versioning & Maintenance | Keeps tests reliable as applications evolve. | Rollbacks, audit trails, refactoring support, deprecation warnings, automated test updates. |

| Scalability & Performance | Handles enterprise-scale test suites efficiently. | Parallel execution across machines, fast queries on historical data, performance stability at 10k+ tests. |

| Microservices & Containers | Modern apps run across distributed environments. | Service orchestration, dependency management, Docker/Kubernetes-native support. |

| Database & API Coverage | Core to most integration workflows. | REST, GraphQL, gRPC, MQs, plus DB pooling, transactions, clean setup/teardown. |

Best Practices for Effective Integration Testing

Each integration test should verify one specific interaction. Testing multiple integration points in a single test creates confusion when failures occur, which integration actually broke?

Atomic tests isolate failures to specific component interactions. When your payment service test fails, you know the payment integration broke, not something unrelated in authentication or inventory.

Clear test naming communicates intent:test_payment_service_handles_declined_cardsexplains exactly what the test validates. Descriptive names serve as documentation and make test results understandable to non-developers.

✅Automate Judiciously (Combining Manual and Automated Tests)

Automation isn’t always the answer. Some integration scenarios change frequently, making automated test maintenance costly. Others involve complex user judgment that automation can’t replicate.

Automate stable, repetitive integration tests: API contract validation, database transaction handling, service error responses. Keep manual testing for exploratory integration scenarios, usability validation, and edge cases discovered in production.

The goal is maximum coverage with minimum maintenance effort. Automate what provides reliable feedback with low upkeep costs. Test manually where human insight adds value.

✅Start Integration Testing Early and Run Frequently

Integration testing shouldn’t wait until all components are complete. Test integration points as soon as multiple components exist. Early testing catches integration issues when they’re cheap to fix.

Frequent test execution provides rapid feedback. Integration tests running on every pull request catch breaking changes before they merge. Developers receive immediate feedback on whether their code changes broke existing integrations.

Nightly test runs catch issues that emerge from accumulated changes. Weekly comprehensive test runs validate the entire integration testing suite against production-like environments.

✅Prioritize Critical Integration Paths

Not all integrations carry equal risk. Payment processing integrations failing cost money immediately. Analytics event logging failing creates data gaps but doesn’t stop user workflows.

Identify critical integration paths,the integrations that, if broken, halt business operations or create security vulnerabilities. Test these paths thoroughly with multiple test scenarios covering success cases, failure cases, and edge cases.

Lower-priority integrations still need testing, but can tolerate lighter coverage. Focus your testing effort where failures hurt most.

✅Maintain Robust Regression Suites

Integration points that work today can break tomorrow when dependent services change. Regression testing validates that existing integrations continue functioning as the system evolves.

Build regression suites incrementally. Every bug discovered in production should generate a regression test. Every significant integration change should add tests validating the new behavior.

Run regression suites regularly,at minimum before every major release, ideally on every code commit. Regression failures signal breaking changes that need addressing before deployment.

✅Good Documentation & Communication Between Teams

Integration testing requires coordination across teams. Frontend teams need to know when backend APIs change. Backend teams need visibility into how frontends consume their services.

Document integration contracts: expected request formats, response structures, error codes, authentication requirements. Maintain this documentation alongside code, keeping it current as integrations evolve.

Share test results across teams. When integration tests fail, relevant teams need immediate notification. Cross-team communication channels (Slack integrations, email notifications, dashboard visibility) ensure failures don’t go unnoticed.

✅Monitor and Refine Test Coverage Over Time

Test coverage provides insight into which integration points lack adequate testing. Track coverage metrics to identify gaps and prioritize additional test creation.

Coverage alone doesn’t guarantee quality. High coverage of trivial integrations means little if critical integration paths remain untested. Focus on meaningful coverage: tests that would catch real integration failures.

Refine test suites continuously. Remove redundant tests that verify the same integration points. Update outdated tests that no longer reflect current system behavior. Add tests for newly discovered integration issues.

The Future of Integration Testing

Manual test case writing is ending. AI agents analyze requirements, examine existing code, and generate integration test cases automatically. These aren’t simplistic tests,they understand integration patterns, generate realistic test data, and cover edge cases human testers might miss.

The QA role evolves from test case writer to test case refiner. AI generates the first draft. Humans review, adjust, and approve. Test creation accelerates 10x while maintaining quality.

Self-Healing Test Scripts Eliminate Maintenance Burden

Integration tests break when services change. API endpoints get renamed. Database schemas evolve. Authentication mechanisms change. Traditionally, developers spend hours updating test scripts to match these changes.

Self-healing tests adapt automatically. When an API endpoint changes, tests detect the change, update their assertions, and continue executing. When database schema changes occur, tests adjust queries accordingly. Maintenance burden drops dramatically.

Cloud-Based Integration Testing Eliminates Infrastructure Complexity

Managing test environments is painful. Setting up databases, message queues, external services, and test data takes hours. Environment drift between testing and production causes bugs.

Cloud-based integration testing provides on-demand test environments that mirror production exactly. Tests run against containerized services with production-like data. No manual setup. No environment drift. Tests validate integrations against realistic environments every time.

Microservices and Distributed Systems Drive Testing Evolution

Monolithic applications have clear boundaries. Integration testing verifies database connections, external API calls, and maybe a message queue.

Microservice architectures explode integration complexity. Dozens of services communicate through APIs, events, and shared data stores. Integration testing must validate not just individual integrations but the entire service mesh.

Testing tools evolve to handle distributed system complexity: service mesh testing, chaos engineering integration, distributed tracing correlation. Integration testing expands from validating individual connections to validating system-wide behavior.

Conclusion: Choosing Your Integration Testing Tools

Integration testing isn’t a one-tool problem. You need execution frameworks (JUnit, PyTest, Mocha), specialized tools (Postman, SoapUI, JMeter), and orchestration platforms (Testomat.io) working together.

The right toolset depends on your technology stack, team size, and testing maturity. Startups need simple, fast solutions that grow with them. Mid-sized teams need unified visibility across multiple testing tools. Enterprises need scale, coordination, and sophisticated analytics.

Don’t chase “one size fits all” solutions. Evaluate tools based on your specific needs:

- What integration points do you test most frequently?

- Which programming languages does your team use?

- How do you execute tests today,manually, in CI/CD pipelines, scheduled runs?

- What’s your team size and technical skill distribution?

- Where are your current integration testing pain points?

Start with pilots. Run tools against a subset of your integration tests before committing fully. Measure impact: Do tests execute faster? Are failures easier to diagnose? Does visibility improve?

The integration testing landscape transformed in 2025. AI-powered tools generate tests automatically. Unified platforms connect scattered testing activities. Self-healing tests reduce maintenance overhead. Cloud-based testing simplifies environment management.

The teams winning in this landscape invest in modern testing infrastructure. They adopt AI-assisted test generation. They unify testing workflows. They make integration testing a competitive advantage, not a bottleneck.

Ready to Transform Your Integration Testing?

Testomat.io offers a 14-day free trial with full platform access. Import your existing tests, generate new test cases from requirements, and experience AI-powered test management firsthand.

See how teams like yours manage 100K+ test cases across automated and manual testing workflows. Schedule a 30-minute demo.

Integration testing doesn’t have to be chaotic. The right tools, combined with the right practices, transform testing from a bottleneck into a velocity driver. Stop drowning in scattered tests, flaky results, and invisible coverage gaps. Start building better integrations today.