The process of creating and delivering software is speeding up, and automation testing has become one of the critical aspects of continuous integration and deployment (CI/CD). Automated tests are able to process thousands of cases in minutes and generate lots of valuable data.

AI-powered monitoring steps in to change the way test automation is done. In the process, AI and machine learning are applied to automatically process, interpret, and learn from the data available after test execution. AI systems allow for better automation as they continuously monitor test results and identify anomalies more quickly than conventional, human-run testing systems.

Every automated test produces a huge volume of data, including logs, screenshots, metrics, performance results, and whatnot. This applies even to mid-sized teams. Therefore, it is no longer efficient (or feasible, at that) to review all this data manually.

The goal of AI monitoring is therefore to filter out the information retrieved from the test results and formulate quality, actionable insights that can be immediately used for further development of the software or product. In simple terms, applying AI is much faster and more effective than having a human team review every batch of data by hand.

Why Traditional Test Monitoring Falls Short

There are multiple reasons why more and more teams now prefer AI-powered compliance monitoring, as opposed to the traditional tools.

Manual review slows everything down

QA engineers often spend hours digging through logs and reports just to find the cause of failures. In big automation suites, that review can take longer than the tests themselves, wasting time and letting small defects slip by unnoticed.

Hard to see patterns at scale

With thousands of tests running across different builds, recurring issues are easy to miss. Without AI, teams might fix the same root cause over and over, not realizing it’s part of a bigger problem.

Dashboards don’t keep up

Most dashboards only show basic stats like pass/fail counts or execution times. As projects evolve, these static views stop reflecting what really matters and fail to adapt to new priorities or frameworks.

Hidden risks stay hidden

Flaky tests, slow performance drops, and quiet memory leaks rarely trigger clear failures. They pile up slowly, hurting reliability while traditional tools overlook them.

QA stuck reacting instead of preventing

Manual monitoring keeps teams chasing problems after they appear, often too late in the release cycle. AI-powered monitoring changes that, helping QA predict issues early and focus on prevention instead of damage control.

What Is AI-Powered Monitoring in Test Automation

AI-powered test monitoring can solve all the aforementioned issues at the snap of a finger. Instead of processing test data one batch at a time and taking pauses between analyses, AI models continuously analyze test results, logs, and performance metrics. With such a model at hand, it’s nearly impossible to miss out even on the smallest anomaly. In addition, AI can and will:

- Identify recurring failures and highlight trends so it’s easier for you to fix them in the development process;

- Provide predictive alerts by spotting potential problem areas before they can impact product release;

- Offer deeper insights than manual testing and contextual recommendations.

All of which is combined in one smooth, easy-to-use AI model. The idea is to help you notice and deal with minor errors before they can turn into major problems.

Key Capabilities of AI-Powered Monitoring Tools

When it comes to AI-powered infrastructure monitoring, it fulfils multiple functions and works as an all-in-one tool for strategic problem-solving in automated testing. Having one AI-based program to analyze test data removes the need for applying a multitude of tools.

Clustering failures

With AI tools for monitoring, you can forget about hundreds of separate error logs. Instead, the model groups all of the similar failures together for simpler recognition. It finds shared patterns in stack traces, messages, and logs. This saves hours of manual work for test teams.

Trend analysis

Instead of concentrating on one test’s results, AI tracks and analyses data over long periods of time. It calculates ratios, average execution times, and system stability. All of this to show if there’s any upward trend in the product’s quality. This is ideal for spotting system regression on time.

Anomaly detection

With AI, it takes less time than ever to spot anomalies and unusual behavior. Even if these are small inconsistencies, they can potentially bear catastrophic consequences. AI tracks and catches such issues early on and triggers a warning so the teams can act and respond to challenges more quickly.

Root-cause suggestions

AI models analyze code commits, CI logs, and test results to suggest the most likely source of a problem. It can link a failed test to a specific pull request or a configuration change faster and easier than a human team would. All of this significantly decreases debugging time.

Adaptive reporting

AI-driven reports always adapt to the project and its needs. Depending on the current stage of development, it focuses on coverage, stability, or post-release performance. This makes monitoring the project goals easier at every stage of its existence.

Benefits for QA Teams

AI-powered monitoring is increasingly used in Quality Assurance (QA) testing, as its benefits are numerous. QA testers can employ AI models to identify issues and single out the most relevant failures that can directly impact the product’s quality. Thanks to the insights from AI, professionals find areas to prioritize and improve faster and easier.

Faster triage and debugging

AI quickly identifies which failures actually matter by grouping redundant or flaky results together. Testers spend less time sorting through noise and more time fixing real issues. This faster triage shortens feedback cycles and helps teams move from problem to solution without delay.

Smarter decision-making

AI models contribute a lot to regression testing thanks to clear and data-driven insights. The teams can use the information to focus on high-risk areas and thus allocate their resources more wisely. This way, raw data becomes practical guidance.

Stronger release confidence

By spotting issues and subtle risks early, AI prevents performance drops. This safeguards the team from last-minute surprises and ensures successful and stress-free releases. As a result, teams move forward knowing that all the potential issues have been covered early on.

Higher productivity

The productivity of the test team improves as it becomes free of all the repetitive and time-consuming parts of data analysis. While AI handles the analysis of large data volumes, testers can focus on test design, exploratory testing, and improving user experience. As a result, there is a more engaged and efficient QA process.

Lasting knowledge retention

AI models learn more from every test cycle. Consequently, they build up a long-term memory of your product’s behavior patterns and performance quality. Even if team members come and go, the system preserves insights about recurring issues and testing history. This helps keep and transfer valuable knowledge over to new teams.

Real-World Tools & Examples

AI-powered infrastructure monitoring is already transforming how QA teams work, and several tools on the market demonstrate what this technology can achieve in practice.

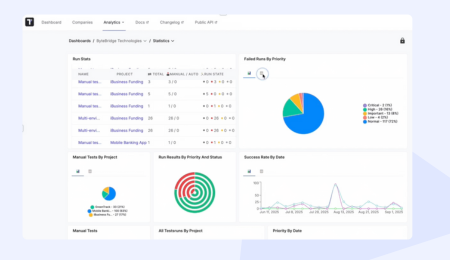

Testomat.io Deep Analyze Agent

This tool applies AI to analyze test runs in depth. It clusters similar failures, detects anomalies, and generates intelligent reports that show patterns across builds. You can also cluster failure detection and check flakiness all within one comprehensive dashboard. Instead of simply listing pass or fail results, it explains why failures happen and how they relate to past issues.

ReportPortal.io

ReportPortal uses machine learning to automatically classify test outcomes. It learns from past runs to make each future analysis smarter. This saves QA teams hours of manual testing and improves accuracy in detecting problem areas.

Mabl

Mabl puts together AI and continuous testing. It keeps track of applications for UI changes and automatically adapts tests when layouts or elements appear. This reduces the need for constant script maintenance and helps teams keep up with frequent product updates.

Testim

Testim uses AI for self-healing tests and analytics. When minor UI changes occur, it automatically adjusts the test steps to prevent false failures. It also tracks long-term quality trends and informs the team of the recurring issues.

Dynatrace Davis AI

Davis AI is a part of the Dynatrace platform. It monitors application behavior not only during testing but also in production. It puts performance metrics, logs, and user sessions together to findanomalies and pinpoint root causes before they can affect end users.

How to Implement AI-Powered Monitoring in Your Automation Setup

With Testomat.io Deep Analyze Agent, it becomes easier than ever to include AI-powered monitoring into your testing systems. It is enough to follow several simple steps to ensure the full automation of all testing processes.

Start with your CI/CD pipeline

The best way to introduce AI is to connect it directly to your continuous integration or delivery process. This allows the system to automatically collect test results, logs, and metrics from every build. Once it starts seeing enough data, it can begin recognizing patterns and flagging potential issues without manual effort.

Feed it your history

AI performs best when it has context. By adding istorical test data, such as past runs, reports, and known bugs, you give the system a strong foundation to understand what is normal for your project. Over time, it becomes better at spotting what is out of place.

Use visual dashboards

Instead of drowning in spreadsheets and static reports, switch to dashboards that show failure trends, performance changes, and release readiness in real time. These visual summaries help both technical and non-technical team members see the big picture at a glance.

Create a feedback loop

AI does not replace people; it learns from them. Encourage QA engineers, developers, and product teams to review the AI’s insights, confirm its findings, and correct any wrong assumptions. This continuous feedback helps the system grow more accurate and relevant to your specific project.

Start small, then grow

There is no need to roll out AI monitoring for your entire test suite on day one. Begin with a smaller set of tests, measure how well the AI identifies issues, and expand gradually as confidence builds. This phased approach keeps adoption smooth and risk-free.

Keep collaboration at the center

AI-powered monitoring works best when it supports real teamwork. Share the insights across QA, DevOps, and development teams so everyone stays aligned on what is working, what is breaking, and what needs attention next.

Challenges & Best Practices

Introducing AI-powered monitoring brings huge benefits, but there are some challenges to keep in mind. Recognizing them early helps you get the most out of your investment.

✅Consistency with different tools

For most test teams, using many different frameworks and tools at once is the norm. To ensure the accurate AI analysis, it’s important to verify that data comes from different sources at once. Thus, one of the main challenges is to standardize log formats to make the system and test results more reliable.

✅Balancing human insight and AI suggestions

Even though AI suggests probable causes and emphasises risks, every test still needs human judgement. Therefore, you must encourage your team to review the output produced by the AI model, validate, and give feedback. This will ensure balance between AI insight and human vision.

✅Growing gradually

The best way to implement AI monitoring is to start with smaller steps. For instance, try to add it to a subset or specific modules first. Once you see the first results and confirm that they are reliable, you can expand the AI model to cover the whole system.

✅Continuous improvement

AI models improve with feedback. Regularly update and retrain them with new data, and refine your monitoring process as your project evolves. The system becomes smarter over time and better suited to your team’s specific needs.

✅Promote collaboration

Sharing AI insights across QA, development, and operations helps everyone understand the health of the system. Make it a team effort so that the information is acted on quickly and effectively.

The Future of AI in Automation Test Monitoring

AI is changing the way QA teams work today and will continue to shape the future of software testing.

Self-healing tests

The next generation of AI-driven test suites will not only identify issues but also fix minor problems automatically. If a UI element changes or a dependency breaks, the system can adapt tests without human intervention.

Full visibility across development and production

AI will increasingly integrate with operational monitoring tools, giving teams insight from testing all the way to live production. This means issues can be detected and resolved before they reach end users.

Predictive quality insights

Future dashboards will not only show what has happened but also predict what might go wrong in upcoming builds. Teams will be able to focus testing efforts where risk is highest, saving time and reducing the chance of failure.

Holistic approach to quality

AI will unify insights from functional, performance, and security testing, giving a complete picture of application health. QA will shift from just running tests to actively guiding the delivery of reliable, high-quality software.

Final Word: The Place of AI in Test Monitoring

AI-powered monitoring is making the process of testing more intelligent than ever before. It analyzes logs, test results, and performance metrics automatically. This way, teams can benefit from AI tools to spot patterns, detect risks, and get rid of problems in the development process early on.

AI in test monitoring brings teams various benefits. Testers and analysts spend less time on repetitive tasks and allocated their resources more wisely instead. QA engineers, in turn, can focus on designing better tests. All this happens while AI handles the difficult and monotonous part of data analysis. Luckily, there are multiple tools in place, designed for various software, products, and purposes.

For example, with Testomat.io Deep Analyze Agent, you can save time on routine manual checks and deliver your software in its best possible version. Try it today to see for yourself.

Frequently asked questions

In what ways does cloud-based Al monitoring enhance shift-left testing strategies?

Cloud-based AI monitoring enhances shift-left testing by providing real-time insights early in the development lifecycle, identifying potential defects, performance bottlenecks, and security vulnerabilities before code reaches later stages. It automates anomaly detection, accelerates feedback loops, and enables predictive analysis, allowing teams to prevent issues, optimize quality, and reduce costly downstream fixes.

How does AI-powered regulatory change management and compliance automation ensure ongoing regulatory compliance and data privacy?

AI-driven regulatory change management helps organizations adapt to constant regulatory changes by analyzing new laws, rules, and regulatory standards in real time. Through compliance automation, compliance teams can quickly align internal policies and business processes with updated compliance requirements, reducing operational delays and compliance risks. The system securely processes sensitive data within strict data privacy frameworks while maintaining transparent risk assessment and consistent regulatory compliance. By leveraging AI’s analytical power on large datasets, organizations can detect anomalies, prevent compliance issues, and maintain resilient, adaptive compliance programs.

What is AI-powered monitoring in automation testing?

AI-powered monitoring uses artificial intelligence and machine learning to analyze logs, metrics, and other test data. It helps QA teams make sense of large volumes of data faster and more accurately than they ever could manually. This intelligent approach minimizes human error, improves visibility into test results, and ensures that potential issues are caught before they affect product quality.

Why is traditional test monitoring no longer enough?

Manual monitoring is slow, repetitive, and prone to mistakes. Teams often spend too much time sorting through raw data and miss subtle problems. Traditional dashboards don’t adapt to ongoing regulatory updates or shifts in project priorities. In contrast, AI-powered tools provide deeper insights, revealing hidden risks and performance trends that manual methods often overlook.

How can a team start implementing AI-powered monitoring?

Begin by connecting the AI tool directly to your CI/CD pipeline and upload historical data to help it understand past trends. From there, use visual dashboards to track progress, involve human oversight to validate AI findings, and scale the system gradually. This thoughtful rollout helps balance automation with expert judgment while ensuring accurate and reliable monitoring results.