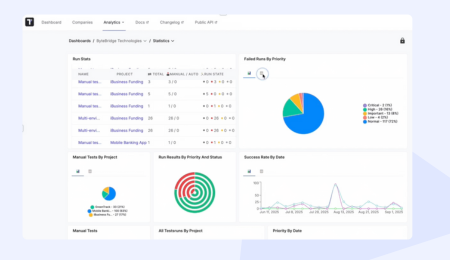

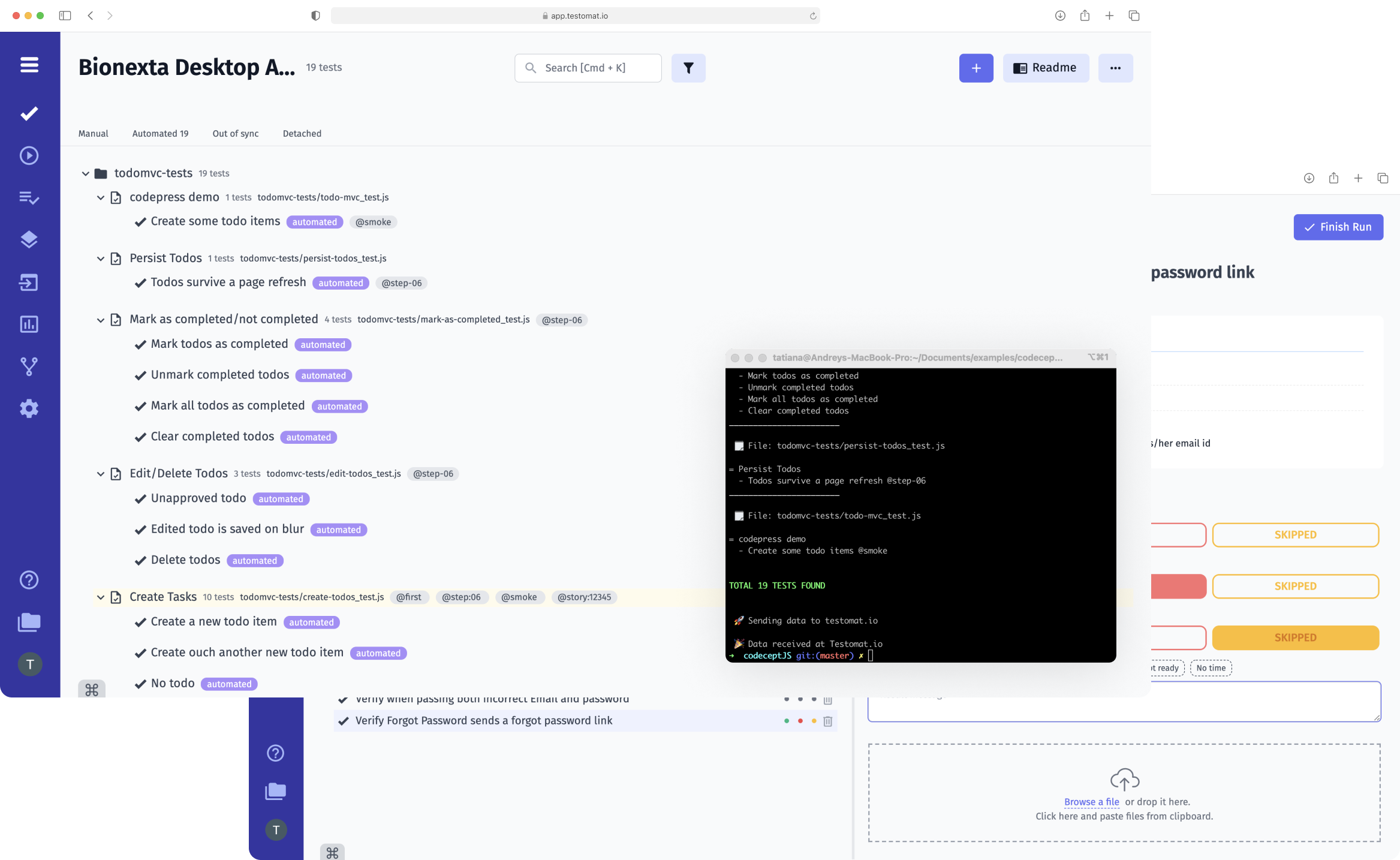

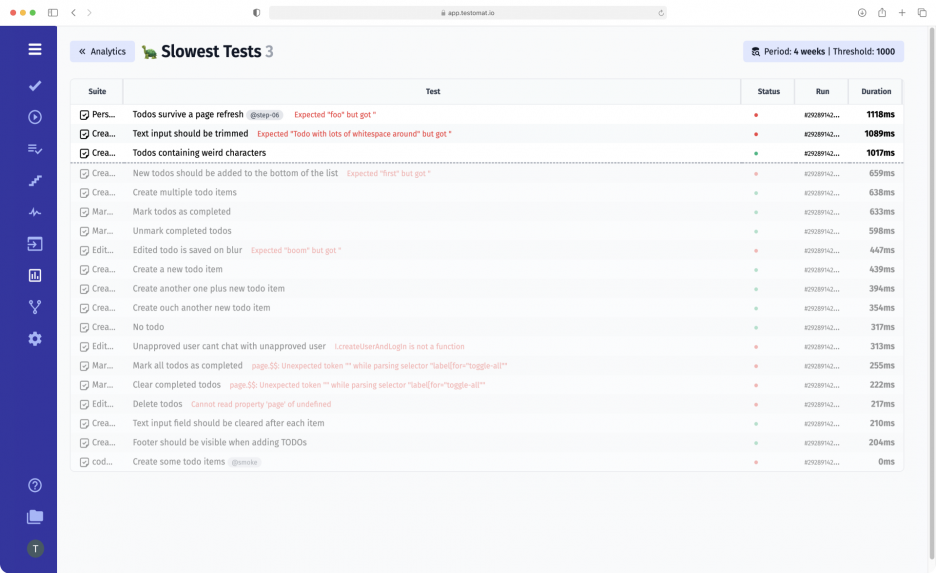

The testomat.io test management app supports the Slowest test detection. You can track slow tests on Analytics Dashboard through the Slowest Tests widget. The slowest Tests widget visualizes slow automated tests within a table. The report doesn’t work automatically. You should set your own testing time in settings.

What mean slow tests metric in quality assurance?

There is no clear definition and strict measure for slow tests in quality assurance. Which tests you should consider as slow at first depend on the context. Also, you should consider fast and slow tests in a single testing category. Like unit testing, integration, e2e, etc.

But the main factor that influences the test execution duration is the complexity of flow determined by requirements needs. Automation tests that are too abstract or go through many steps can`t be fast.

For instance something like:

- Open application (0.8 seconds)

- Create a user (1.5 seconds)

- Login (0.5 seconds)

- Create order (1.2 seconds)

- Receive confirmation pop up (1 second)

In compare

- Click a username field (0.00001 seconds)

- Type username

- Type password

- Click log in

Slow tests are frequently overloaded, attempting to accomplish too many tasks. By reducing the number of steps required for each test, the overall time for each test is reduced, and there is typically a synergistic impact. Performing numerous single-purpose tests may be faster than running a single overloaded test. As you can see, the time of tests is measured in nanoseconds or seconds.

Push tests down a layer. In a web application, for example, you can test at the API level instead of using a browser. You can still write BDD-style tests in this way, describing the interaction with the data in the API, instead of the interaction with a UI.

How to decide which tests should be slow

Anyway, you have to define by your team collectively what slow and fast mean for you. e.g. a slow test starts from 3 seconds. You should measure test execution time explicitly in one of the time units. In the meantime, define what’s share of slow tests is allowed in your testing portfolio.

Why measure slow tests are important

Once you’ve discovered slow tests, go over each one and identify where the time is spent. With that information, you should be able to identify any hot spots and work on resolving them.

Information about the execution time of tests is helping a lot in the maintenance and refactoring of automated tests. You can track the performance of tests. It is a widely spread trouble when tests slow down week after week as a result limit testing delivery.

The main errors slow down your tests:

- Frequently using “sleeps”, delays and timeouts

- Except testing at the appropriate level

- Tests include launching a whole application with all of its accompanying services, you’ll be dealing with lengthy startup and shutdown times. If at all possible, avoid creating a full environment, or do it only once for the entire test run, rather than for each test case

- No use of distributed testing and parallel execution

- No use mocking

- See if there are any hard-coded constants that need to be set per environment

- Disable build triggers for non-production code changes, such as modifications to “helper” scripts or automated checks

- Run the slowest test buckets last, as long as earlier tests don’t depend on them.

So, the faster your tests run, the more frequently they’ll be run, which is an important performance parameter. Using this widget and this test metrics determines whether they are application performance dips that can be improved or issues of automation framework to be resolved.