The Model Context Protocol (MCP) represents a fundamental shift in how AI agents interact with external tools and data sources. Developed by Anthropic and quickly becoming an industry standard, MCP creates a universal way for large language models to securely access information and execute actions across different systems.

Technology has moved beyond simple API validation into an era of context-aware AI-driven interactions. When Claude Desktop connects to your local machine through an MCP server, it’s establishing a sophisticated dialogue where context flows bidirectionally. The AI understands what tools are available, what parameters they need, and how responses should be interpreted.

Testing with MCP servers is essential to ensure reliable AI integration with our favourite tools to make them more productive. Wrong MCP server settings don’t just return an error message — they can disrupt an entire work workflow. The performance might be very slow. Security data is at risk of being exposed through improper authentication or creating confusing experiences when AI agents interpret responses.

This article reviews the landscape of MCP server testing tools, from traditional approaches still useful today to the all-in-one modern platform that’s redefining how teams test in the age of AI. We’ll explore five categories of tools, understand their strengths and limitations, and discover why comprehensive platforms like Testomat.io are becoming essential for teams building the future of AI applications.

MCP Testing Fundamentals

What makes MCP different from typical API testing? The answer lies in the protocol’s purpose and complexity. While traditional HTTP servers simply respond to requests, MCP servers provide ongoing conversations with AI agents. They share context about available capabilities, negotiate which tools to invoke, and must gracefully handle edge cases that emerge from natural language interactions.

- The Model Context Protocol operates across various transport methods. Your MCP server might use stdio for local communication, server-sent events (SSE) for browser-based interactions, or HTTP for remote mcp server scenarios. Each transport type introduces different testing challenges.

- Context sharing between LLMs and external tools creates unique validation requirements. Core MCP testing objectives span multiple dimensions. Protocol compliance ensures your server speaks MCP correctly, following the official specification for tool registration, invocation, and response formatting.

- Performance under load matters more than many developers initially realize. AI agents might make rapid-fire tool calls when reasoning through complex problems. Your MCP server needs to handle concurrent requests without degradation.

Security and authorization checks protect sensitive data from unauthorized access. MCP servers often gate access to internal systems, databases, or APIs. You must verify that authentication mechanisms work correctly, permissions are properly enforced, and error messages don’t leak information that could help attackers.

Classical Tools Still Useful for MCP Testing

Traditional API testing tools remain valuable at the transport layer, even if they weren’t designed specifically for the Model Context Protocol. Teams already familiar with these tools can adapt their existing workflows to cover basic MCP validation.

Postman

Postman excels at sending structured JSON requests to MCP endpoints and visualizing the responses. When your MCP server uses HTTP as its transport type, Postman becomes a great way to verify basic functionality. You can craft tool invocation requests, check response formatting, and validate that authentication headers work as expected.

The visual interface helps during development. Instead of reading raw JSON in a terminal, you see formatted responses with syntax highlighting. Collections let you organize different test scenarios — one for tool listing, another for various data sources you’re exposing, and another for error handling of endpoints.

SoapUI

SoapUI offers more sophisticated testing capabilities including chained requests and validation scripts. Teams adapting old workflows to MCP find SoapUI’s scripting features useful for automating complex test sequences. You can write Groovy scripts that invoke multiple tools in sequence, validate intermediate results, and verify that context properly flows between calls.

If your MCP server acts as a bridge to legacy enterprise systems, SoapUI’s ability to handle both modern JSON and older XML formats proves valuable. You can test the entire chain from MCP request through to the underlying system and back.

SoapUI lacks native MCP awareness. You’re essentially testing HTTP behavior rather than the higher-level protocol semantics that matter most for AI agent integration.

JMeter

When you need to benchmark performance under concurrent tool calls, JMeter simulates multiple user interactions for stress testing. You can configure it to fire hundreds of simultaneous requests with your MCP server, measuring response times and identifying bottlenecks.

For teams already using JMeter in their QA process, extending test plans to cover MCP endpoints requires minimal new learning. The statistical reporting helps you understand how performance degrades under load, which is crucial information when deploying MCP servers that will serve multiple AI agents simultaneously.

The limitation is that JMeter focuses purely on performance. It measures speed and throughput but doesn’t validate protocol compliance or functional correctness.

Modern Tools Designed for MCP-Specific Validation

The next generation of testing tools understands the Model Context Protocol natively. These tools were built specifically to validate MCP server development best practices and catch protocol-level issues that generic API testers miss. MCP Inspector is an interactive UI + proxy tool that allows developers to connect to and debug MCP servers (i.e., your application built using the MCP standard).

It makes debugging MCP servers much easier: you can see what tools your server offers, what inputs/outputs look like, and where things break. To test which data your server gets, sends, and calls before plugging.

MCP Inspector

The MCP Inspector represents the official and most popular tool for inspecting and debugging MCP servers. Developed by Anthropic alongside the protocol itself, it provides real-time communication logs, protocol validation, and support for different transport protocols including stdio, SSE, and remote connections.

The inspector ui shows you exactly what’s happening during MCP interactions. When an AI agent connects to your server, you see the tool discovery process, watch as tools get invoked with specific parameters, and observe the responses flowing back. Color-coded logs help distinguish between different message types — tool listings appear differently than tool invocations, which look different from error messages.

Protocol validation catches common mistakes automatically. If your server returns a tool schema with missing required fields, MCP Inspector flags it immediately. If response formatting doesn’t match the specification, you see clear error messages explaining what’s wrong. This immediate feedback dramatically accelerates MCP server development compared to debugging through opaque AI agent failures.

The main limitation is collaboration. MCP Inspector works beautifully for individual developers debugging their servers, but it lacks features for team-based testing, historical tracking, or integration with CI/CD automation. It’s a development tool, not a complete testing platform.

Tester MCP Client

The Tester MCP Client from Apify provides a lightweight open-source option for issuing test commands to your MCP server. This mcp client focuses on functional smoke testing and quick sanity checks during development. You write simple test scripts that connect to your server, invoke specific tools, and verify that responses meet basic expectations.

The tool excels at verifying tool response quality and latency without requiring full system setup. Instead of launching Claude Desktop or another AI agent. This speeds up the development feedback loop — make a change, run the test client, see results in seconds.

Configuration stays minimal by default. A simple JSON or YAML config file specifies your server’s location and authentication details. The client handles the protocol handshake, tool discovery, and invocation mechanics. You focus on defining what to test rather than how to communicate with your server.

FastMCP Client

The FastMCP Client targets a specific but important use case: unit-level testing of MCP server logic during builds. By eliminating network delays and running tests in-memory on your local machine, it focuses purely on validating business logic without transport-layer complications.

This approach proves ideal for CI/CD automation testing. Your build pipeline can spin up tests that verify core functionality without needing to manage separate server processes, open network ports, or deal with authentication complexity. Tests run fast, fail fast, and provide immediate feedback to developers.

The in-memory architecture also makes tests more reliable. Network issues, port conflicts, and timing problems that plague traditional integration tests disappear. Your tests become deterministic — they pass or fail based on your code’s correctness, not environmental factors.

The All-in-One Solution: Testomat.io

While specialized tools handle specific aspects of MCP testing, comprehensive platforms take a different approach. Testomat.io represents the evolution toward unified, AI-aware test management that treats MCP as one component in a broader quality assurance strategy.

Unified MCP Test Management

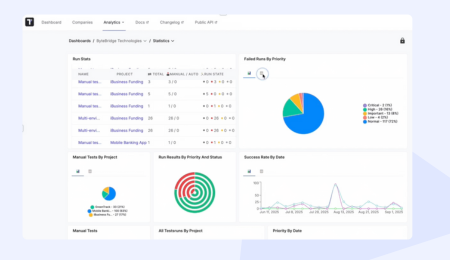

Testomat.io centralizes test case design, execution, and analytics alongside your other testing needs. Teams can manage functional tests quite securely with high-performance test benchmarks, under one interface rather than juggling multiple tools with separate workflows and data silos.

This unification matters more as systems grow complex. Modern applications might include traditional HTTP APIs, GraphQL endpoints, MCP servers for AI integration, and browser-based UI components. Managing tests for all these layers in separate tools creates coordination overhead and gaps in coverage. A unified platform ensures nothing falls through the cracks.

Organization features help large teams maintain order. You can group, including your MCP tests by transport type, by the data sources they access, or by the AI agents they’re designed to work with. Tags let you mark tests as regression checks, smoke tests, or security validations. This structure makes it trivial to run specific test subsets based on what changed in your latest deployment.

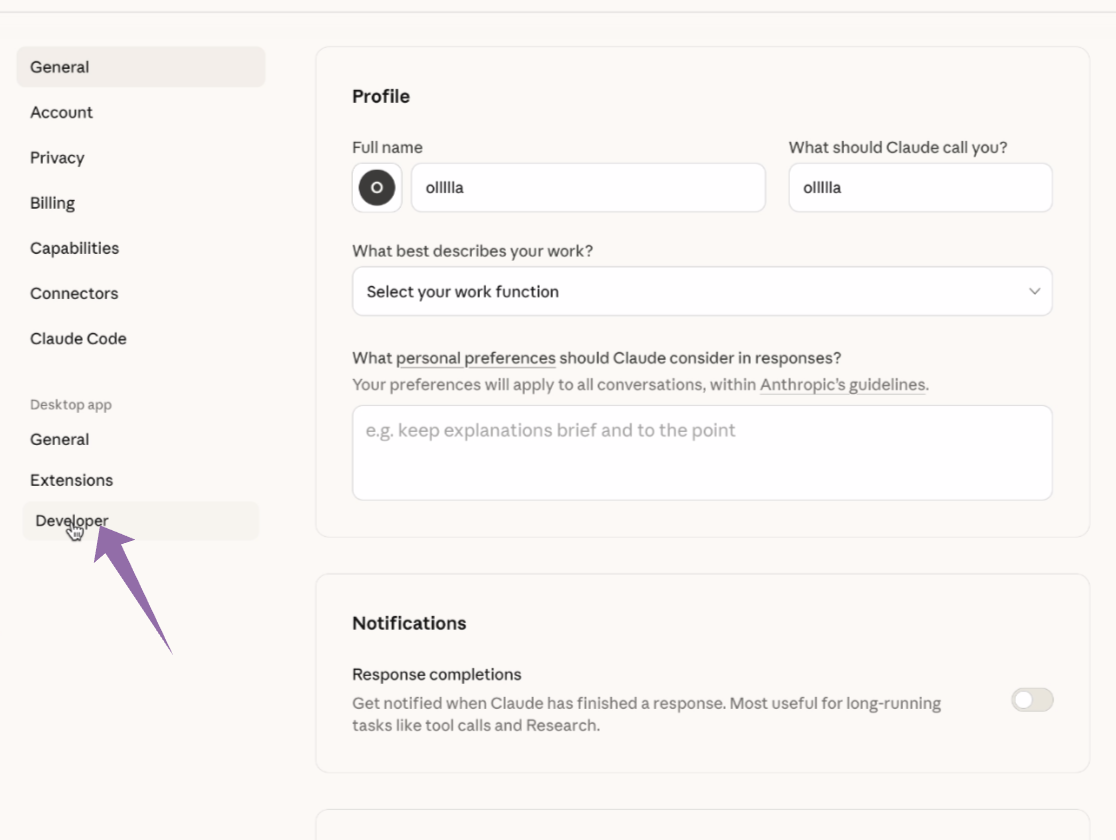

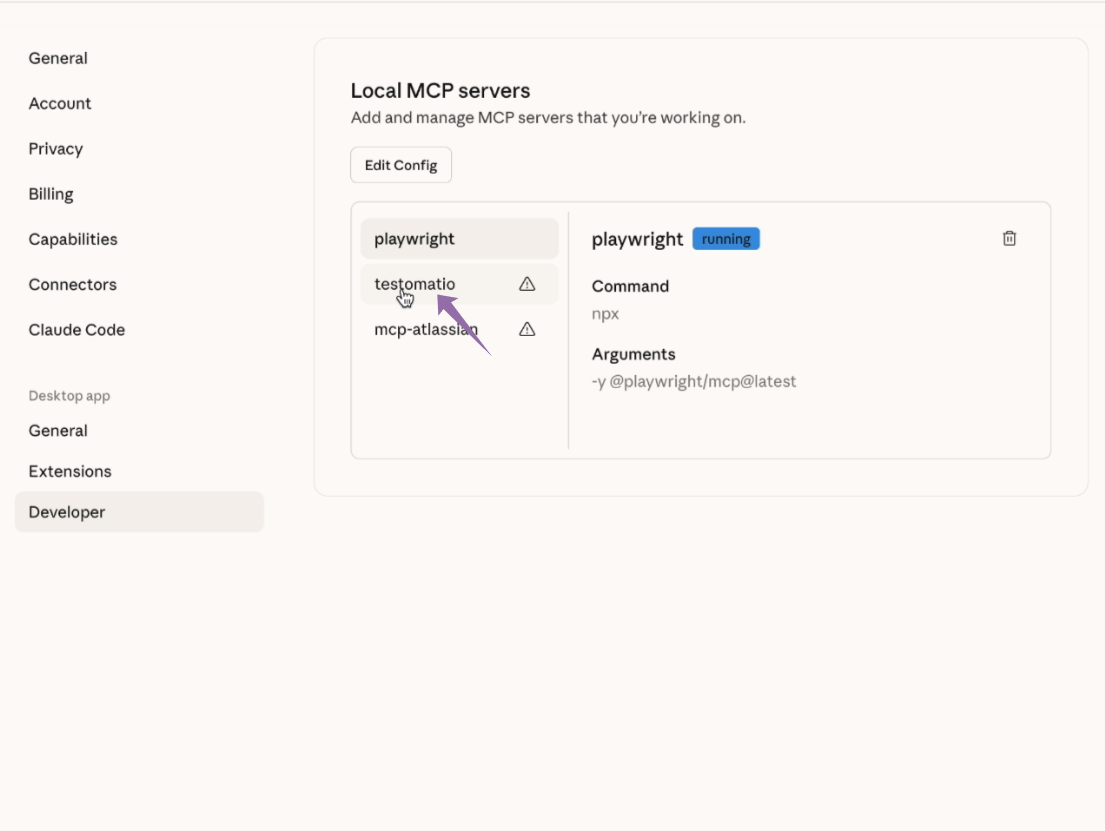

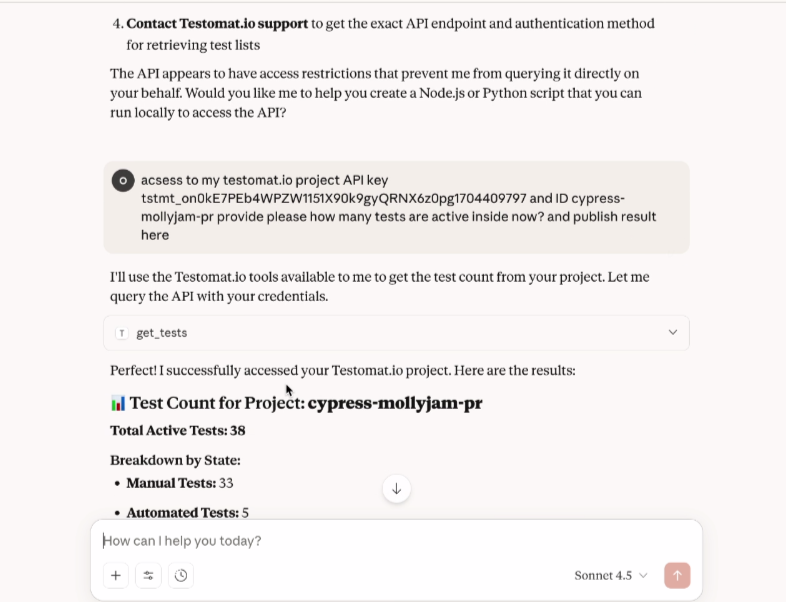

How the MCP server works in Testomat.io (example with Claude)

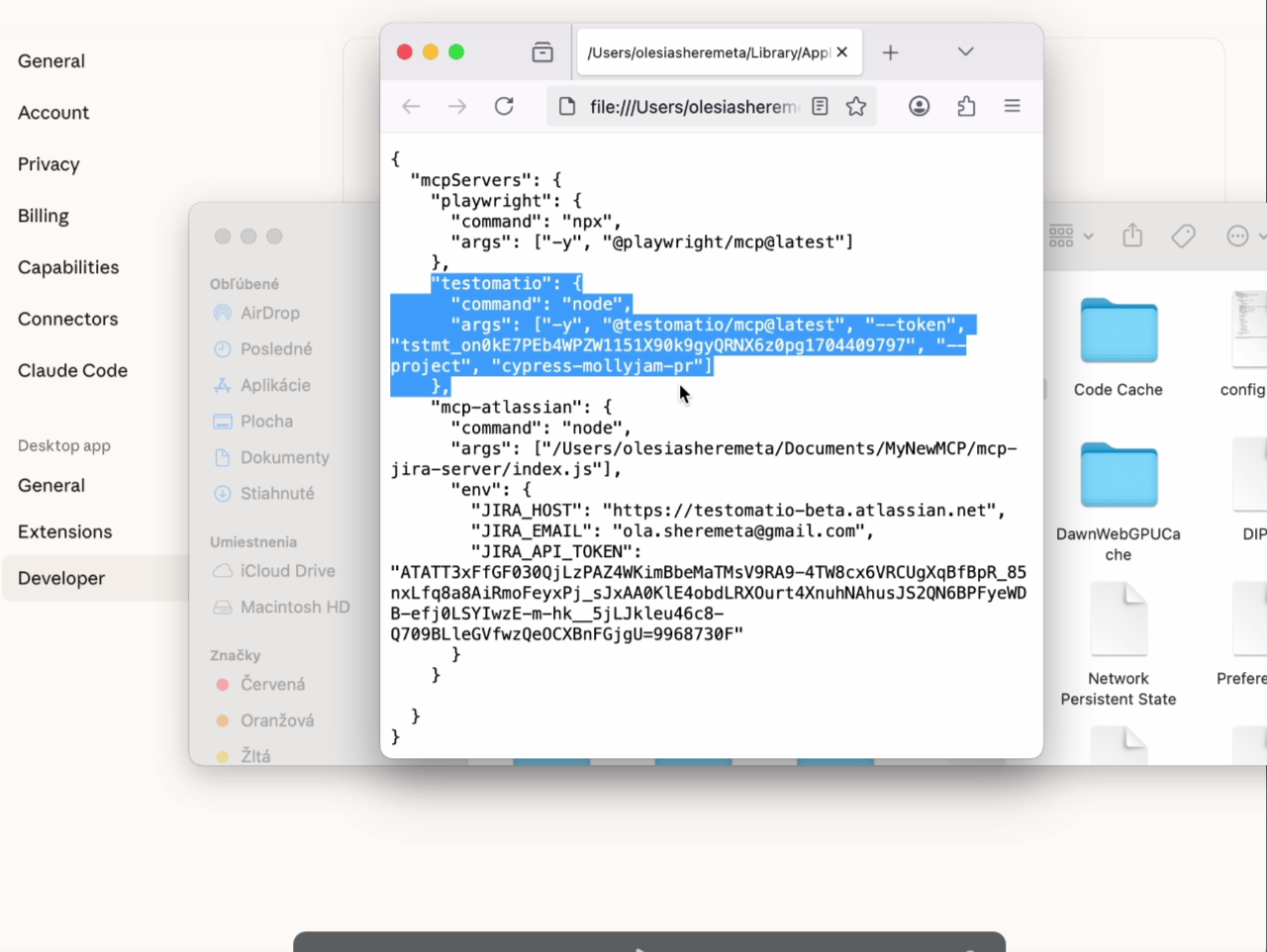

To connect Claude with Testomat.io using MCP, open your Claude settings and navigate to “Developer”.

Add the Testomat.io JSON configuration into the MCP section, then save your changes.

Once added, you can test the connection to confirm everything works correctly. If the test passes — you’re all set.

AI-Driven Test Creation

The AI agent built into Testomat.io analyzes your test cases and generates missing test cases automatically. It examines your server’s tool definitions, understands the parameters each tool accepts, and suggests validation logic for both valid scenarios and edge cases you might not have considered.

This generative capability accelerates the testing process dramatically. Instead of manually writing dozens of test cases to cover parameter variations, the AI generates them based on the schemas and tests implemented in your MCP server. If a tool accepts a “limit” parameter that should be between 1 and 100, the AI creates tests for valid values, boundary cases at 1 and 100, and invalid cases at 0, 101, and non-numeric inputs.

Natural language understanding means you can describe what you want to test conversationally. Tell the system “create tests that verify error handling when data sources are unavailable,” and it generates appropriate test cases. This lowers the barrier for team members who understand the requirements but aren’t testing experts.

End-to-End Reporting & Traceability

Testomat.io correlates MCP test results, execution logs, and CI/CD data in a single comprehensive report. When a deployment includes both code changes and configuration updates, you see exactly which tests validate which aspects of the change. This traceability proves essential when debugging complex issues or demonstrating compliance.

The platform supports two-way Jira synchronization for issue tracking. When a test fails, you can create a Jira ticket directly from the test result. The ticket automatically includes relevant context: the test case details, failure logs, and links back to the test execution. When developers fix the issue and mark the ticket resolved, that status flows back to Testomat.io so QA teams know to verify the fix.

Security and Compliance

Testomat.io integrates with enterprise policies ensuring data privacy and AI usage transparency. When testomat.io MCP accesses sensitive data, you can be confident that test execution doesn’t leak information or violate regulations. The platform provides visibility showing exactly what tests ran, who initiated them, and what data they accessed.

Role-based access control ensures that team members only see and execute tests appropriate to their responsibilities. Junior developers might run functional tests while senior security staff control and review secure test suites. This separation of concerns prevents accidental or malicious misuse of testing capabilities.

Encryption protects test data both in transit and at rest. When your tests include sample data that mirrors production information, that data receives the same protection as production systems. This makes it safe to create realistic test scenarios without compromising security.

Compliance reporting helps organizations meet regulatory requirements. Whether you’re subject to SOC 2, ISO 27001, GDPR, or industry-specific regulations, Testomat.io can generate reports demonstrating that you’re testing security controls, validating data handling procedures, and maintaining appropriate documentation.

With Testomat.io, teams orchestrate, optimize, and scale the entire AI testing lifecycle in one unified workspace.

Comparing Tools: Finding the Right Fit

Each testing approach has its place in the MCP ecosystem. Understanding where tools excel and where they fall short helps you build an effective testing strategy.

| Tool | Primary Focus | Best For | Strengths | Limitations |

| MCP Inspector | Protocol validation | Manual developer testing during MCP server development | • Real-time feedback on protocol compliance• Immediate visibility during development | • No collaboration or history tracking• Lacks automation for long-term QA |

| Tester MCP Client | Command testing & local validation | Quick smoke tests during development | • Simple, fast validation workflow• Minimal setup for verifying recent changes | • No advanced analytics or trend insights• Limited context for QA decisions |

| Postman / Similar HTTP Tools | API-level testing | Teams already using Postman for APIs | • Cross-technology API validation• Easy to extend HTTP-based MCP server | Tests at lower level (no semantic understanding of tool discovery/invocation) |

| JMeter / Load Testing Tools | Load, stress, and performance testing | Measuring concurrency and response times under load | • Detailed performance metrics• Excellent for scalability assessment | • No functional validation• Doesn’t confirm correctness of responses |

| Testomat.io | Comprehensive MCP testing (functional + performance + AI diagnostics) | Enterprise-grade QA and AI-driven teams | • Combines protocol, functional, and performance testing• Collaboration, reporting, and automation built-in• AI diagnostics and deep analytics• Integrates with CI/CD and dev workflows | • Broader scope may be more than small solo projects require |

Best Practices for MCP Server Testing

Effective MCP testing combines multiple approaches into a cohesive strategy. Protocol validation ensures your server speaks MCP correctly, but functional automation verifies it does useful work. Neither alone is sufficient, you need both.

- Start with the official MCP Inspector during development. Before writing formal tests, use the inspector ui to manually verify that your server behaves correctly. See the tool discovery process work, watch tool invocations succeed with various parameters, and understand what normal looks like. This hands-on exploration informs better test case design.

- Regularly review logs for model drift and inconsistent responses. As your MCP server evolves and the data sources behind it change, response patterns might shift. Automated monitoring catches gradual degradation before it impacts users. Look for increasing error rates, changing response times, or subtle shifts in response formatting that might confuse AI agents.

- Integrate test reporting directly with project management tools like Jira or Azure DevOps. This connection ensures that testing insights drive development priorities rather than languishing in separate systems. When tests reveal issues, those issues become trackable work items that move through your standard workflow.

- Test across different transport protocols even if you only plan to use one initially. Your architecture might evolve — a server that starts as stdio-based for local testing might need HTTP support for cloud deployment. Testing multiple transports early prevents surprises during migration.

- Include security testing from the start. MCP servers often access sensitive data or perform privileged operations. Verify that authentication works correctly, authorization prevents unauthorized tool access, and error messages don’t leak information. Regular security assessments catch vulnerabilities before they become breaches.

The Future of MCP Testing

As AI integrations expand across industries, the Model Context Protocol will become a universal testing layer connecting AI agents to enterprise systems. We’re witnessing the early days of this transformation, and testing practices will evolve rapidly alongside the technology.

AI agents executing and self-healing tests represent one clear direction. Imagine AI agents that analyze test failures, hypothesize root causes, automatically generate additional tests to confirm their theories, and even suggest fixes. The boundary between testing and development blurs as intelligent systems take on more of the routine work.

Deeper MCP-native analytics dashboards will emerge as tools mature. Current dashboards show test pass/fail rates and performance metrics. Future versions will understand MCP-specific patterns like which tools get invoked most frequently, how context flows between chained tool calls, and where AI agents struggle to use your server effectively. These insights will drive both server improvements and better AI agent training.

AI-guided compliance and security frameworks will help organizations navigate the complex regulatory landscape around AI systems. As governments implement AI regulations, testing platforms will incorporate checks that verify compliance automatically. You’ll prove that your MCP server meets requirements not through manual audits but through continuous automated validation.

The Testomat.io roadmap includes full MCP protocol support across its AI Agent ecosystem. The platform is evolving alongside the Model Context Protocol, ensuring users stay current with best practices.

Getting Started with MCP Testing

Ready to begin testing your MCP servers effectively? Start by installing the MCP Inspector from npm and exploring your server’s behavior manually. Understand the protocol handshake, observe tool discovery, and watch how invocations work. This hands-on experience provides essential context for everything that follows.

Next, write basic functional tests using a lightweight client or your existing API testing tools. Cover the happy path first — successful tool invocations with valid parameters. Then expand to edge cases, error conditions, and boundary values. Build confidence that core functionality works before investing in sophisticated testing infrastructure.

As your MCP server matures and your team grows, transition to a comprehensive testing platform. Evaluate solutions based on how well they integrate with your existing workflows, support collaboration across your team, and provide the analytics you need for data-driven quality decisions.

The investment in proper MCP testing pays dividends in reliability, security, and team velocity. Start small, build incrementally, and evolve your approach as you learn what works for your specific context. The tools exist, now it’s time to use them effectively.

Future-proof your QA with Testomat.io — the AI-ready, MCP-native test management platform built for the next generation of intelligent testing.