The Testomatio team is expanding AI capabilities, consistently implementing a variety of AI-driven reports. One of them – Run Summary Report.

The Run Summary Report automatically generates an intelligent, actionable overview from test execution data.

🔴 Note: that it works at the level of the particular Run.

As you can see in the screen, the AI Run Summary Report highlights what truly matters and might be hidden: failures, detects patterns, and instantly proposes suggestions. These concise insights enable QA, developers, and stakeholders to make faster and more confident release decisions. In addition, QA teams save time from the manual work of writing reports.

How to generate the Run Summary Report?

Run Summary Report is an intelligent feature built into the Run Report. Also, it is presented as an AI-powered Agent Assistant. So, to generate an AI-driven Run Summary Report, just press the button Run Status Report in the upper right-hand corner… a few seconds, and the result should appear with an AI overview. Having examined it, since it is an agent, you can ask some additional questions in the chat window to receive enhanced feedback or deepen the report to the level you need by supplementing it with context information, customizing your report more.

For instance, prompt use cases:

- Generate sprint summary reports: “Show test coverage and pass/fail rates for this release”.

- Create defect-focused reports: “Highlight critical bugs from Gauge test runs”.

In the first case, you can get the pass rate, coverage estimation — is it acceptable or not, and what are the gaps that still remain uncovered.

In the second case, you can get a list of critical bugs, including links to cross-suite risk areas, along with suggestions on the best actions to take.

If you are not satisfied with the results, you can easily regenerate them using the Regenerate button. These results are saved as an insight. You can review it later in the insights section on the Tests page.

Instead of reviewing every failed test case individually, use Clustarize Run Errors extra option, which clusters failures that share the same root cause.

Key Run Summary Report Structure

- Run quick summary — identifies gaps or areas where tests may be missing, incomplete, or redundant.

- Risk areas — AI reviews failure logs and error patterns, flags flaky or unstable tests and pinpoints risky areas, helping teams debug faster.

- Stability summary. The current test stability is rated proportionally, as if 8/21 tests failed in the test suite. It indicates script or application logic flaws persist. No flaky tests detected in this run, but failures in the suite suggest script-level instability.

- Runs trends — represent stability over the runs in comparison to the prior stable reports, and tell us the likely reason why it might happen.

- Actionable recommendations – provide us insights such as which tests should be stabilized, removed, or expanded.

What next?

→ Share concise executive summaries with stakeholders during release planning or retrospectives.

→ Archive AI-generated reports for compliance, audits, or historical tracking of project quality.

Also, you are free to customize reports for different audiences, such as detailed technical reports for developers or high-level summaries for management.

Benefits of AI-generated Reports

- Effortless reporting. Teams can create complex reports with simple prompts, saving time on manual data compilation and formatting.

- Stakeholder clarity. Clear, professional reports with AI-curated insights, making it easy for non-technical stakeholders to understand test outcomes.

- Scalability. AI reports process large volumes of test data and highlight only the most relevant information.

- Decision-making support. AI-driven insights highlight critical metrics and trends, enabling teams to focus on high-impact issues during sprint reviews or release planning.

- Shareability. Export reports as PDFs for easy distribution via email, presentations, or audits, ensuring universal compatibility.

Assosiated Test Management AI Report functionality

- Reporters by Testing Frameworks – test management app downloads all the test artifacts from S3 Storage and places them in test reports. The user has only to go to the desired report and click on the test of interest. The data in the reports are presented in a user-friendly format, making it understandable to the entire development team. View the testing results of end-to-end, integration, unit, and API tests and compare them with the expected results. Artifacts attached to the test result reports and documents provide a clear view of the errors made.

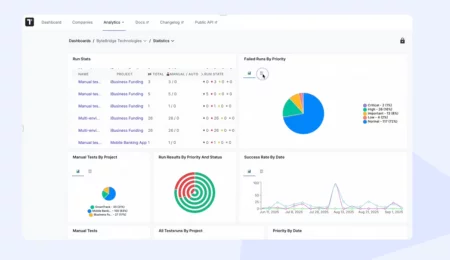

- Automated Tests Analytics – we offer detailed test analytics. QAs can analyze test results in different statuses and the percentage of coverage by automated tests and intelligently prioritize their work. Available to track flaky tests, never run, every failed tests, environment statistics etc.