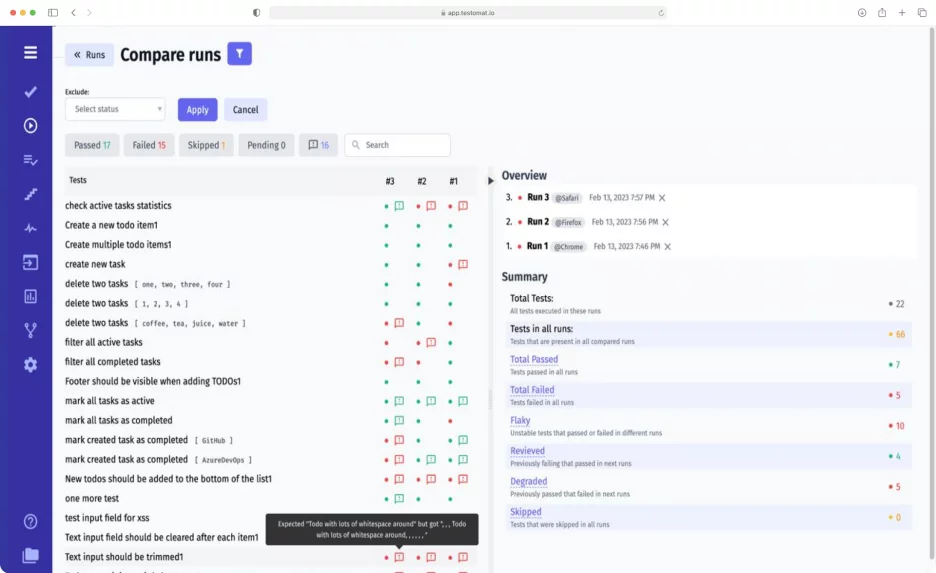

Since tests are run at different times and in different environments, there is a need to monitor progress constantly. Thanks to the Compare Test Runs feature, the team can see the test results for different test runs. This data allows to draw conclusions about the project’s development stage and make appropriate decisions.

How do I compare test runs?

Go to the Runs page and click on Multiselection (the icon near the search bar). Then mark the test runs that you are interested in at the moment. This can be two or more test runs, including all on the page.

Now click on Compare on the bottom menu. The Compare runs window will open in front of you. It displays the test results for the test runs that you have selected. The test runs are divided into columns. There can be any number of them: just expand the data to see them all.

On the Compare runs page you can:

- View all tests. On the left, you see a complete list of the tests that apply to these test runs. The test runs are numbered. Hovering over the number displays the date and time of the test run.

- Go to each test. Clicking on the desired test opens a window with all the details: Description, Code Template, Attachment, Runs, History, and Forks. This gives a complete understanding of each test.

- Analyze the status of the tests in all test runs. Each test has a different status in the corresponding test run. For your convenience, the statuses have different colors: green Passed, red Failed, yellow Skipped, gray Pending.

- Filter tests by status. To see tests with a certain status, click on the desired option. Alternatively, you can use the Filter option to exclude a specific status.

- Search tests by comments. Use the With massage option to see tests that have comments. This applies to tests of any type (automated, manual) and with any status. For example, a comment might describe the reason for a fiasco. Hovering over the comment will read its text.

- Search for tests by title. Another way to make a selection of tests is to use search. Simply enter the name, and the system will list those tests.

- Study Overview. On the right, you see a complete list of test runs with the name, date, and time. You can view details for each of them. To do this, click on the desired test wound.

- Study Summary. Below Overview, you will find a Summary. This displays the total number of tests and the number depending on the type.

Note that Summary is an interactive list. You can click on each metric (except Total Tests and Tests in all runs) to display the corresponding results on the left.

Metrics for detailed analysis of test runs

The following metrics are available for you to examine in Summary:

- Total Tests. The total number of tests executed in these runs.

- Tests in all runs. Tests in all compared runs.

- Total Passed. Successful tests in all runs.

- Total Failed. The tests that failed are in all the runs.

- Flaky. Tests that work erratically.

- Revieved. Tests that were failed but became passed.

- Degraded. Tests that have been passed but tend to fail.

- Skipped. Tests that the team skipped in all runs.

Thus, the Compare Test Runs function allows you to create a summary report from your selected reports. These can be manual or automated tests or a combination of both (mixed tests). You can do a retrospective over a period and compare across Wednesdays.

To analyze results even more efficiently, use some other features.

Functions that are useful in combination with Compare Test Runs

- Real-time reporting – reports are available to you based on your analytics. You can view them anytime without waiting for all tests to be completed.

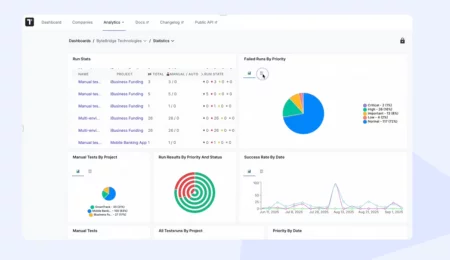

- Analytics – the system displays analytical metrics for each project. You can evaluate the results of your work in a particular area and get a complete picture of the project.

- Flaky tests – this is an analytic widget that displays the number of unstable tests. Such tests regularly fail, slowing down progress on the project. By tracking them, you can fix problems in your product promptly.

- Test case execution – the test management system allows you to run tests in different ways: manually, automatically, mixed runs, runs from Jira, and restarts. After running them, you can compare the different runs.