Every pull request, every line of code, every sprint, they all demand speed and scrutiny. When quality slips, users feel it. When review slows, releases back up.

Automated code review sits at the intersection of those two pressures. Testers now aren’t just validating features, they’re writing automation, reviewing code, and maintaining test suites under constant pressure to move fast. Whether you’re an SDET, AQA, or QA engineer juggling reviews, flaky tests, and legacy cleanups, the challenge is the same: how do you scale quality without burning out?

That’s where automated code review steps in. It doesn’t replace your judgment, it enhances it. By catching repetitive issues, enforcing standards, and removing review noise, it frees you to focus on what matters: writing resilient code and improving test strategy.

What Automated Code Review Really Does

An automated code review tool scans your source code using static code analysis. It checks for potential issues like:

- Security flaws

- Logic bugs

- Duplicate logic

- Poor naming conventions

- Noncompliance with best practices

- Violations of code style guides

- Excessive complexity

- Inefficient patterns

The tool then delivers immediate feedback inside your IDE, on the pull request, or in your CI pipeline depending on how you’ve integrated it.

Automated code review should run early and often — ideally on every commit or pull request. It’s especially useful in fast-paced teams, large codebases, or when enforcing consistent standards. The tools vary: formatters (like Prettier, Black), linters (ESLint, Pylint), AI-powered review bots (like CodeGuru or DeepCode), and analytics dashboards (like SonarQube, CodeClimate). These tools don’t get tired, forget checks, or skip reviews. That consistency compounds over time — leading to cleaner code, faster onboarding, and better collaboration.

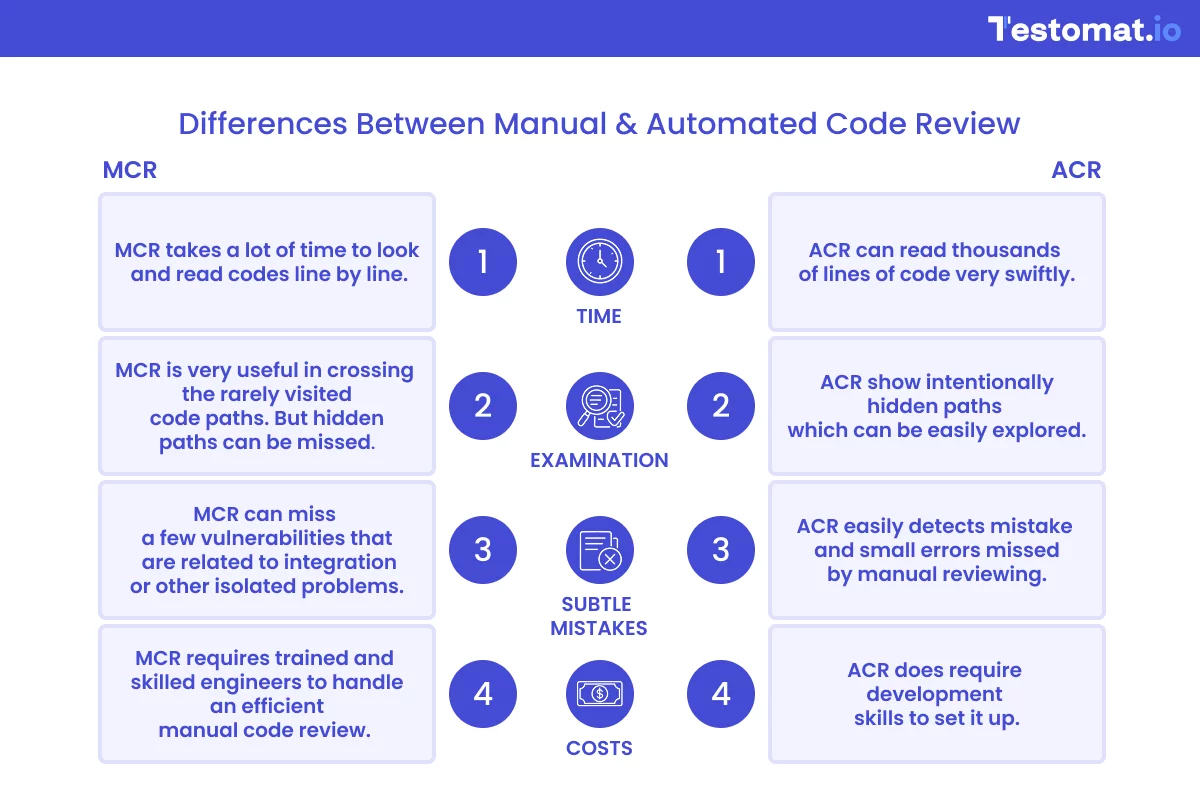

Manual vs. Automated Code Review

Code review is essential for maintaining high code quality, but manual and automated approaches differ significantly.

Manual code review has limits. It’s subjective, time-consuming, and highly variable across reviewers. What one engineer flags, another misses. Some focus on code style, others on logic. Many ignore security vulnerabilities entirely, simply due to lack of time or expertise.

This leads to inconsistent code, missed defects, and bloated review processes. It also creates fatigue for both developers and reviewers especially when every pull request involves sifting through boilerplate and formatting issues instead of focusing on actual functionality. The reality: without support, manual reviews break down at scale.

Where Automated Code Review Fits in the Development Process

Automated code review works best when embedded throughout your software development process, not bolted on at the end.

- Static Code Review. Catch issues as you write code. Tools surface mistakes in real time, while context is fresh and changes are easy.

- Stage of Compelling (GitHub, GitLab, Bitbucket). Trigger scans automatically during review requests. Flag violations before merging into main, reducing cycle time and improving team trust.

- Deployment Stage (Jenkins, Azure, CircleCI). Use quality gates to block builds that don’t meet defined thresholds — like code coverage, complexity, or security risk. In your dashboard. Track trends, monitor repositories, and highlight vulnerabilities. Dashboards give engineering leads visibility into team-wide habits and technical debt.

This end-to-end presence ensures new code meets expectations before it becomes tech debt.

Benefits of Automated Code Review

The value of automated code review is measurable, not theoretical. It shows up in your delivery metrics, onboarding speed, security posture, and team morale.

✅ 1. Cleaner Code, Faster

By offloading repetitive tasks like checking indentation, naming, or unused variables reviewers can focus on logic, design, and architectural decisions. The result? Fewer comments per PR, faster turnaround, and better conversations.

✅ 2. Fewer Production Defects

Catch problems when they’re still cheap to fix before they make it into production. Static code analysis surfaces potential issues that manual reviews may overlook, especially in large or unfamiliar codebases. Automated code reviews can use static analysis tools or custom rules to:

- Detect use of

Thread.sleep()or timing-based waits. - Flag tests that rely on non-deterministic behavior (e.g., random input, current system time).

- Catch poor synchronization or race conditions in test code.

- Warn against shared state between tests (e.g., using static variables improperly).

✅ 3. Consistent Standards

With automation, every line of new code gets the same scrutiny, regardless of who writes it. No more “it depends on who reviewed it.” You enforce coding standards and best practices as part of the pipeline.

✅ 4. Stronger Security

The best tools scan for vulnerabilities like SQL injection, cross-site scripting, and insecure API use. They also catch dangerous patterns like hardcoded credentials or risky file access. This shifts security left, where it belongs.

✅ 5. Better Onboarding

New team members don’t have to learn your standards by trial and error. The code review tool enforces them automatically, speeding up onboarding and reducing friction between juniors and seniors.

✅ 6. Developer Confidence

Clear, consistent feedback builds confidence. Programmers know what’s expected. They spend less time guessing and more time solving real problems.

Where Automated Code Review Fits in the Development Process

Automated code review integrates directly into your CI/CD pipeline — typically right after a commit is pushed or a pull request is opened. It acts as an early filter before human review, catching common issues, enforcing style, and flagging risks.

Key touchpoints:

- Pre-commit: Formatters & linters clean up code instantly

- Pre-push / CI: AI review bots and coverage checks kick in

- PR stage: Dashboards summarize issues, risks, and quality trends

- Post-merge: Analytics track long-term code health across the repo

It works quietly in the background, guiding developers and testers without slowing them down. By the time code reaches human review, the basics are already covered — so people can focus on logic, architecture, and value.

The Trade-Offs of Automated Code Review You Need to Know

Automated review isn’t perfect. But its flaws are solvable and far outweighed by its advantages.

| ✅ Problem | Why it’s a Problem | How to Fix It |

| False Results | Bad configuration overwhelms devs with irrelevant alerts. | Customize rule sets to your needs. Tune thresholds. Suppress noisy checks. Focus reviews on new code. |

| Overdependence | Automation catches syntax and known bugs — not intent or business logic. | Keep human reviewers in the loop. Automation assists, but judgment still requires a person. |

| Adoption | Tools that slow pull requests or create noise get ignored. | Prioritize ease of use. Integrate tightly into workflows. The dev team adopt what helps them. |

Best Practices for Automated Code Review

Automated code review, when done right, reinforces engineering values: clarity, safety, consistency, and speed. When done wrong, it breeds friction, false confidence, and disengagement in development teams.

These best practices are here to help build an automated review process that earns trust, scales with your team, and quietly enforces quality without disrupting momentum.

✅ 1. Start with Precision, Not Coverage

The biggest mistake teams make is turning on too much too fast. Every alert costs attention. A single false positive can train developers to ignore all feedback, even the valid kind. So before you aim for 100% rule coverage, aim for signal over noise. Start with a focused rule set:

- Common style or lint violations your team already agrees on

- Fatal or undefined code behavior that must be controlled first

- Security vulnerabilities

Then layer in more checks gradually, based on real-world feedback. Start with the guardrails teams want, not the ones you think they need. Choose a responsible person for code review. It might be a guru, an Architect of a product who described the architecture of how our product should be implemented. Or a group of experienced, well-educated developers. Establish a process, when they should do it? During Code Review Meeting, or in pair programming.

✅ 2. Customize Everything You Can

No off-the-shelf configuration fits your team perfectly. Automated review tools come with rules designed for everyone, which means they work best for no one in particular. Customize:

- Rulesets to match your coding standards, risk tolerance, and language use

- Severity levels (e.g. error vs. warning)

- Ignored paths or files (e.g. auto-generated code, legacy blobs)

- Thresholds (e.g. cyclomatic complexity, line length, duplication ratio)

The more the tooling reflects your codebase and your values, the more it will be trusted. If developers feel like they’re arguing with a machine, you’ve already lost.

✅ 3. Don’t Review the Past, Focus on What’s Changing

Flagging issues in legacy code is often pointless. You’ll either:

- Force devs to “fix” old code just to pass CI

- Or encourage them to ignore the tool entirely

Instead, narrow automated review to new and modified code only. This keeps feedback contextual and encourages continuous improvement without opening the door to massive refactoring or alert fatigue.

✅ 4. Integrate Feedback Where Development Lives

Automated review should meet developers in their flow, not pull them out of it. That means:

- Running in pull requests (e.g. GitHub/GitLab/Bitbucket comments)

- Surfacing feedback in CI pipelines, not a separate dashboard

- Avoiding annoying email reports or obscure web UIs

✅ 5. Be Deliberate About What Blocks Merges

Not all issues are created equal. If your automated system fails builds for minor style inconsistencies or low-risk warnings, developers will start gaming the system or switching it off. Use blocking only for:

- Critical security issues

- Build-breaking bugs

- License violations or known malicious dependencies

Everything else should be advisory: surfaced, but non-blocking. Let humans decide when it’s safe to proceed.

✅ 6. Treat Automation as an Assistant, Not an Authority

Automated tools are fast, consistent, and tireless, but they lack context. They can’t understand your product, your priorities, or your reasoning. That’s why code review still needs humans:

- To assess trade-offs

- To weigh design decisions

- To ask questions tools never will

✅ 7. Explain the Why Behind Every Rule

Tools often tell you what’s wrong, but not why it matters. When developers don’t understand the reasoning behind a check, they’ll treat it like red tape. That’s where documentation and context come in. Connect every rule to:

- A real-world risk (e.g. “This style prevents accidental type coercion”)

- A team standard

- A known bug pattern from your history

Better yet, invite feedback. QAs are more likely to respect rules they’ve had a say in shaping.

Tips to Choose the Right Tool: What Actually Matters

Plenty of tools claim to “automate review,” but real value comes from depth, adaptability, and ease of use.

| Feature | Why It Matters |

| Static Code Analysis | Detects quality issues, and complexity across your codebase. |

| IDE Plugins | Deliver immediate feedback during coding — not after a push. |

| Seamless Integration | Plug into your existing tools: GitHub, GitLab, Azure Pipelines, or Jenkins. |

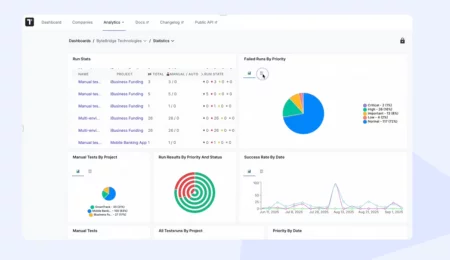

| Actionable Dashboards | Show metrics across repositories, track violations, and monitor improvements. |

| Configurable Quality Gates | Block merges if code changes don’t meet defined metrics (e.g., test coverage, duplication). |

| Minimal False Positives | Prioritize meaningful alerts. No developer wants to fight the tool. |

Tools for Automated Code Review

- Lint + Prettier: Essential for different programming languages and projects; handles code style cleanly and predictably.

- Codacy: Lightweight, flexible, solid JavaScript support, easy GitHub integration.

- DeepSource: Clean UI, smart autofixes, focused on Python and Go, ReviewDog, Husky.

- Testomat.io: A test management system that helps teams manage both automated and manual tests. It can integrate with popular testing frameworks and CI/CD pipelines, and become an essential component of automated code review

These tools work well across modern version control systems, offer rich configuration, and support most mainstream programming languages.

Automation + Human Review = Scalable Quality

The goal of automated code review isn’t to eliminate humans. It’s to elevate them. By automating the mechanical checks, you give your team time and space to focus on higher-order thinking: design, performance, scalability, and real collaboration. Done right, it becomes part of your software development process, not an obstacle to it.

Your delivery process enforces quality automatically. Your pull requests become cleaner. Your reviewers become more strategic. And your development teams ship faster, with fewer bugs and tighter security. That’s a tested process.

Automated code review doesn’t fix everything. But it fixes enough to change how you build. Start small. Choose a tool that fits your stack. Configure it to your standards. Run it on real code changes. Measure impact. Refine. The teams who do this don’t just move faster, they improve continuously. And today that’s the real competitive edge.

Frequently asked questions

What is an automated security code review?

An automated security code review uses tools to scan your source code for security vulnerabilities like SQL injection or insecure API use. Integrated into your CI/CD pipeline or IDE, it catches risks early, enforces best practices, and reduces manual errors, helping teams maintain strong software security consistently.

What is test automation code review?

Test automation code review checks the quality and effectiveness of your automated test scripts. It ensures tests are maintainable, stable, and meaningful. Platforms like Testomat.io integrate test review with your development workflow, linking test results and code changes to improve defect detection and streamline QA.

Is there any AI tool for code review?

Yes. AI-powered tools analyze code and tests using machine learning to spot bugs, style issues, and security flaws with fewer false positives. Testomat.io applies AI to test automation, helping teams prioritize failures and optimize coverage, integrating seamlessly with GitHub, JetBrains IDEs, and CI pipelines.

How does automated code review help detect vulnerabilities in GitHub projects?

When integrated into GitHub, automated review tools like Codacy or SonarQube run checks on each pull request. They scan for known vulnerabilities, unsafe dependencies, and poor security practices (e.g., hardcoded credentials or unsafe deserialization) before code is merged. This ensures security issues are caught early in the review process, not after deployment.

Why is automation in the IDE crucial for developer productivity?

Running automated reviews inside your IDE (like JetBrains, Eclipse, or VSCode) provides immediate feedback during coding, long before code reaches the PR stage. Developers fix issues when they’re cheapest to address, which improves turnaround, avoids false positives, and ensures fewer quality regressions make it to your CI pipelines.